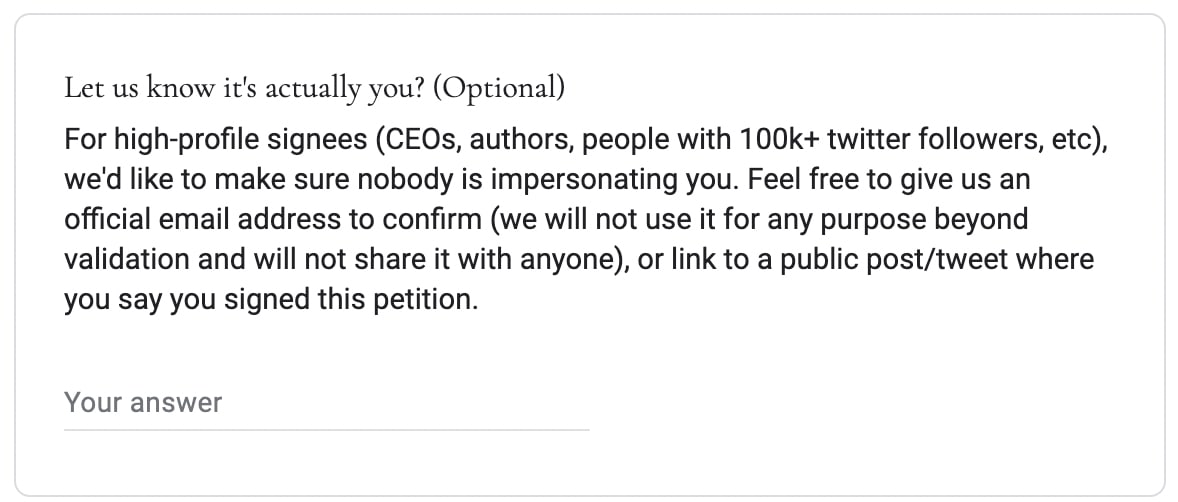

I think this letter is not robust enough to people submitting false names. Back when Jacob and I put together DontDoxScottAlexander.com we included this section, and I would recommend doing something pretty similar:

I think someone checking some of these emails would slow down high-profile signatories by 6-48 hours, but sustain trust that the names are all real.

I'm willing to help out if those running it would like, feel free to PM me.

Oh no. Apparently also Yann LeCun didn't really sign this.

Indeed. Among the alleged signatories:

Xi Jinping, Poeple's Republic of China, Chairman of the CCP, Order of the Golden Eagle, Order of Saint Andrew, Grand Cordon of the Order of Leopold

Which I heavily doubt.

I concur, the typo in "Poeple" does call into question whether he has truly signed this letter.

I believe the high-profile names at the top are individually verified, at least, and it looks like there's someone behind the form deleting fake entries as they're noticed. (Eg Yann LeCun was on the list briefly, but has since been deleted from the list.)

When we did Scott's petition, names were not automatically added to the list, but each name was read by me-or-Jacob, and if we were uncertain about one we didn't add it without checking in with others or thinking it over. This meant that added names were staggered throughout the day because we only checked every hour or two, but overall prevented a number of fake names from getting on there.

(I write this to contrast it with automatically adding names then removing them as you notice issues.)

Lecun heard he was incorrectly added to the list, so the reputational damage still mostly occurred.

Yeah this seems a bit of a self-defeating exercise.

Who made the decision to go ahead with this method of collecting signatories?

They didn't even have a verification hold on submitted names...

It seems like there's a lot of negative comments about this letter. Even if it does not go through, it seems very net positive for the reason that it makes explicit an expert position against large language model development due to safety concerns. There's several major effects of this, as it enables scientists, lobbyists, politicians and journalists to refer to this petition to validate their potential work on the risks of AI, it provides a concrete action step towards limiting AGI development, and it incentivizes others to think in the same vein about concrete solutions.

I've tried to formulate a few responses to the criticisms raised:

- "6 months isn't enough to develop the safety techniques they detail": Besides it being at least 6 months, the proposals seem relatively reasonable within something as farsighted as this letter. Shoot for the moon and you might hit the sky, but this time the sky is actually happening and work on many of their proposals is already underway. See e.g. EU AI Act, funding for AI research, concrete auditing work and safety evaluation on models. Several organizations are also working on certification and the scientific work towards watermarking is sort

AI labs and independent experts should use this pause to jointly develop and implement a set of shared safety protocols for advanced AI design and development that are rigorously audited and overseen by independent outside experts. These protocols should ensure that systems adhering to them are safe beyond a reasonable doubt.

That's nice, but I don't currently believe there are any audits or protocols that can prove future AIs safe "beyond a reasonable doubt".

In parallel, AI developers must work with policymakers to dramatically accelerate development of robust AI governance systems. These should at a minimum include: new and capable regulatory authorities dedicated to AI; oversight and tracking of highly capable AI systems and large pools of computational capability; provenance and watermarking systems to help distinguish real from synthetic and to track model leaks; a robust auditing and certification ecosystem; liability for AI-caused harm; robust public funding for technical AI safety research; and well-resourced institutions for coping with the dramatic economic and political disruptions (especially to democracy) that AI will cause.

Would the authors also like the moon on a stic...

I don't agree with the recommendation, so I don't think I should sign my name to it.

To describe a concrete bad thing that may happen: suppose the letter is successful and then there is a pause. Suppose a bunch of AI companies agree to some protocols that they say that these protocols "ensure that systems adhering to them are safe beyond a reasonable doubt". If I (or another signatory) is then to say "But I don't think that any such protocols exist" I think they'd be in their right to say "Then why on Earth did you sign this letter saying that we could find them within 6 months?" and then not trust me again to mean the things I say publicly.

The letter says to pause for at least 6 months, not exactly 6 months.

So anyone who doesn't believe that protocols exist to ensure the safety of more capable AI systems shouldn't avoid signing the letter for that reason, because the letter can be interpreted as supporting an indefinite pause in that case.

Oh, I didn't read that correctly. Good point.

I am concerned about some other parts of it, that seem to imbue a feeling of "trust in government" that I don't share, and I am concerned that if this letter is successful then governments will get involved in a pretty indiscriminate and corrupt way and then everything will get worse; but my concern is somewhat vague and hard to pin down.

I think it'd be good for me to sleep on it, and see if it seems so bad to sign on to the next time I see it.

Currently, OpenAI has a clear lead over its competitors.[1] This is arguably the safest arrangement as far as race dynamics go, because it gives OpenAI some breathing room in case they ever need to slow down later on for safety reasons, and also because their competitors don't necessarily have a strong reason to think they can easily sprint to catch up.

So far as I can tell, this petition would just be asking OpenAI to burn six months of that lead and let other players catch up. That might create a very dangerous race dynamic, where now you have multiple players neck-and-neck, each with a credible claim to have a chance to get into the lead.

(And I'll add: while OpenAI has certainly made decisions I disagree with, at least they actively acknowledge existential safety concerns and have a safety plan and research agenda. I'd much rather they be in the lead than Meta, Baidu, the Chinese government, etc., all of whom to my knowledge have almost no active safety research and in some cases are actively dismissive of the need for such.)

- ^

One might have considered Google/DeepMind to be OpenAI's peer, but after the release of Bard — which is substantially behind GPT-3.5 capabilities, neve

DeepMind might be more cautious about what it releases, and/or developing systems whose power is less legible than GPT. I have no real evidence here, just vague intuitions.

I agree that those are possibilities.

On the other hand, why did news reports[1] suggest that Google was caught flat-footed by ChatGPT and re-oriented to rush Bard to market?

My sense is that Google/DeepMind's lethargy in the area of language models is due to a combination of a few factors:

- They've diversified their bets to include things like protein folding, fusion plasma control, etc. which are more application-driven and not on an AGI path.

- They've focused more on fundamental research and less on productizing and scaling.

- Their language model experts might have a somewhat high annual attrition rate.

- I just looked up the authors on Google Brain's Attention is All You Need, and all but one have left Google after 5.25 years, many for startups, and one for OpenAI. That works out to an annual attrition of 33%.

- For DeepMind's Chinchilla paper, 6 of 22 researchers have been lost in 1 year: 4 to OpenAI and 2 to startups. That's 27% annual attrition.

- By contrast, 16 or 17 of the 30 authors on the GPT-3 paper seem to still be at OpenAI, 2.75 years later, which works out to 20% annual attrition. Notably, of those who have left, not a one has left for Google or DeepMind, though interestingly,

If a major fraction of all resources at the top 5–10 labs were reallocated to "us[ing] this pause to jointly develop and implement a set of shared safety protocols", that seems like it would be a good thing to me.

However, the letter offers no guidance as to what fraction of resources to dedicate to this joint safety work. Thus, we can expect that DeepMind and others might each devote a couple teams to that effort, but probably not substantially halt progress at their capabilities frontier.

The only player who is effectively being asked to halt progress at its capabilities frontier is OpenAI, and that seems dangerous to me for the reasons I stated above.

The letter feels rushed and leaves me with a bunch of questions.

1. "recent months have seen AI labs locked in an out-of-control race to develop and deploy ever more powerful digital minds that no one – not even their creators – can understand, predict, or reliably control."

Where is the evidence of this "out-of-control race"? Where is the argument that future systems could be dangerous?

2. "Should we let machines flood our information channels with propaganda and untruth? Should we automate away all the jobs, including the fulfilling ones? Should we develop nonhuman minds that might eventually outnumber, outsmart, obsolete and replace us? Should we risk loss of control of our civilization? Such decisions must not be delegated to unelected tech leaders."

These are very different concerns that water down what the problem is the letter tries to address. Most of them are deployment questions more than development questions.

3. I like the idea of a six-month collaboration between actors. I also like the policy asks they include.

4. The main impact of this letter would obviously be getting the main actors to halt development (OpenAI, An...

GPT-4 was rushed, and the OpenAI Plugin store. Things are moving far too fast for comfort. I think we can forgive this response for being rushed. It's good to have some significant opposition working on the brakes to the runaway existential catastrophe train that we've all been put on.

(cross-posting my take from twitter)

(1) I think very dangerous AGI will come eventually, and that we’re extremely not ready for it, and that we’re making slow but steady progress right now on getting ready, and so I’d much rather it come later than sooner.

(2) It’s hard to be super-confident, but I think the “critical path” towards AGI mostly looks like “research” right now, and mostly looks like “scaling up known techniques” starting in the future but not yet.

(3) I think the big direct effect of a moratorium on “scaling up” is substitution: more “research” than otherwise—which is the opposite of what I want. (E.g. “Oh, we're only allowed X compute / data / params? Cool—let's figure out how to get more capabilities out of X compute / data / params!!”)

(4) I'm sympathetic to the idea that some of the indirect effects of the FLI thing might align with my goals, like “practice for later” or “sending a message” or “reducing AI investments” etc. I’m also sympathetic to the fact that a lot of reasonable people in my field disagree with me on (2). But those aren’t outweighing (3) for me. So for my part, I’m not signing, but I’m also not judging those who do.

(5) While I’m here, I want to mor...

I doubt training LLMs can lead to AGI. Fundamental research on the alternative architectures seems to be more dangerous.

I just want to point out that if successful this moratorium would be the fire alarm that otherwise doesn't exist for AGI.

The benefit would be the "industry leaders agree ..." headline, not the actual pause.

This letter is probably significantly net negative due to its impact on capabilities researchers who don't like it. I don't understand why the authors thought it was a good idea. Perhaps they don't realize that there's no possible enforcement of it that could prevent a GPT4 level model that runs on individual gpus from being trained? to the people who can do that, it's really obvious, and I don't think they're going to stop; but I could imagine them rushing harder if they think The Law is coming after them.

It's especially egregious because of accepting names without personal verification. That will probably amplify the negative response.

It may not be possible to prevent GPT4-sized models, but it probably is possible to prevent GPT-5-sized models, if the large companies sign on and don't want it to be public knowledge that they did it. Right?

"Don't do anything for 6 months" is a ridiculous exaggeration. The proposal is to stop training for 6 months. You can do research on smaller models without training the large one.

I agree it is debatable whether "beyond a reasonable doubt" standard is appropriate, but it seems entirely sane to pause for 6 months and use that time to, for example, discuss which standard is appropriate.

Other arguments you made seem to say "we shouldn't cooperate if the other side defects", and I agree, that's game theory 101, but that's not an argument against cooperating? If you are saying anything more, please elaborate.

This letter seems underhanded and deliberately vague in the worst case, or best case confused.

The one concrete, non-regulatory call for action is, as far as I can tell, "Stop training GPT-5." (I don't know anyone at all who is training a system with more compute than GPT-4, other than OpenAI.) Why stop training GPT-5? It literally doesn't say. Instead, it has the a long suggestive string of rhetorical questions about bad things an AI could cause, without actually accusing GPT-5 of any of them.

Which of them would GPT-5 break? Is it "Should we let machines...

...Suppose you are one of the first rats introduced onto a pristine island. It is full of yummy plants and you live an idyllic life lounging about, eating, and composing great works of art (you’re one of those rats from The Rats of NIMH).

You live a long life, mate, and have a dozen children. All of them have a dozen children, and so on. In a couple generations, the island has ten thousand rats and has reached its carrying capacity. Now there’s not enough food and space to go around, and a certain percent of each new generation dies in order to keep the popula

Not sure where you're going with this. It seems to me that political methods (such as petitions, public pressure, threat of legislation) can be used to restrain the actions of large/mainstream companies, and that training models one or two OOM larger than GPT4 will be quite expensive and may well be done mostly or exclusively within large companies of the sort that can be restrained in this sort of way.

Noting that I share my extended thoughts here: https://www.lesswrong.com/posts/FcaE6Q9EcdqyByxzp/on-the-fli-open-letter

Reported on the BBC today. It also appeared as the first news headline on BBC Radio 4 at 6pm today

ETA: Also the second item on Radio 4 news at 10pm (second to King Charles' visit to Germany), and the first headline on the midnight news (the royal visit was second).

But but think of the cute widdle baby shoggoths! https://www.reddit.com/r/StableDiffusion/comments/1254aso/sam_altman_with_baby_gpt4/

It seems unlikely that AI labs are going to comply with this petition. Supposing that this is the case, does this petition help, hurt, or have no impact on AI safety, compared to the counterfactual where it doesn't exist?

All possibilities seem plausible to me. Maybe it's ignored so it just doesn't matter. Maybe it burns political capital or establishes a norm of "everyone ignores those silly AI safety people and nothing bad happens". Maybe it raises awareness and does important things for building the AI safety coalition.

Modeling social reality is always hard, but has there been much analysis of what messaging one ought to use here, separate from the question of what policies one ought to want?

Given almost certainty that Russia, China and perhaps some other despotic regimes ignore this does it:

1. help at all?

2. could it actually make the world less safe (If one of these countries gains a significant military AI lead as a result)

Why do you think China will ignore it? This is "it's going too fast, we need some time", and China also needs some time for all the same reason. For example, China is censoring Google with Great Firewall, so if Google is to be replaced by ChatGPT, they need time to prepare to censor ChatGPT. Great Firewall wasn't built in a day. See Father of China's Great Firewall raises concerns about ChatGPT-like services from SCMP.

China also seems to be quite far behind the west in terms of LLM

This doesn't match my impression. For example, THUDM(Tsing Hua University Data Mining lab) is one of the most impressive group in the world in terms of actually doing large LLM training runs.

Demis Hassabis didn't sign the letter, but has previously said that DeepMind potentially has a responsibility to hit pause at some point:

'Avengers assembled' for AI Safety: Pause AI development to prove things mathematically

Hannah Fry (17:07):

You said you've got this sort of 20-year prediction and then simultaneously where society is in terms of understanding and grappling with these ideas. Do you think that DeepMind has a responsibility to hit pause at any point?

Demis Hassabis (17:24):

...Potentially. I always imagine that as we got closer to the sort of gray

Since nobody outside of OpenAI knows how GPT-4 works, nobody has any idea whether any specific system will be "more powerful than GPT-4". This request is therefore kind of nonsensical. Unless, of course, the letter is specifically targeted at OpenAI and nobody else.

It leaves a bad taste in my mouth that most of the signatories have simply been outcompeted, and would likely not have signed this if they had not been.

More importantly, I find it very unlikely that an international, comprehensive moratorium could be implemented. We had that debate a long time ago already.

What I can envision is OpenAI, and LLMs, being blocked, ultimately in favour of other companies and other AIs. And I increasingly do not think that is a good thing.

Compared with their competitors, I think OpenAI is doing a better job on the safety front, ...

The LessWrong comments here are generally (quite) (brutal), and I think I disagree, which I'll try to outline very briefly below. But I think it may be generally more fruitful here to ask some questions I had to break down the possible subpoints of disagreement as to the goodness of this letter.

I expected some negative reaction because I know that Elon is generally looked down upon by the EAs that I know, with some solid backing to those claims when it comes to AI given that he cofounded OpenAI, but with the (immediate) (press) (attention) it's getti...

Signatories include Yoshua Bengio, Stuart Russell, Elon Musk, Steve Wozniak, Yuval Noah Hariri, Andrew Yang, Connor Leahy (Conjecture), and Emad Mostaque (Stability).

Edit: covered in NYT, BBC, WaPo, NBC, ABC, CNN, CBS, Time, etc. See also Eliezer's piece in Time.

Edit 2: see FLI's FAQ.

Edit 3: see FLI's report Policymaking in the Pause.

Edit 4: see AAAI's open letter.