EDIT: Read a summary of this post on Twitter

Working in the field of genetics is a bizarre experience. No one seems to be interested in the most interesting applications of their research.

We’ve spent the better part of the last two decades unravelling exactly how the human genome works and which specific letter changes in our DNA affect things like diabetes risk or college graduation rates. Our knowledge has advanced to the point where, if we had a safe and reliable means of modifying genes in embryos, we could literally create superbabies. Children that would live multiple decades longer than their non-engineered peers, have the raw intellectual horsepower to do Nobel prize worthy scientific research, and very rarely suffer from depression or other mental health disorders.

The scientific establishment, however, seems to not have gotten the memo. If you suggest we engineer the genes of future generations to make their lives better, they will often make some frightened noises, mention “ethical issues” without ever clarifying what they mean, or abruptly change the subject. It’s as if humanity invented electricity and decided the only interesting thing to do with it was make washing machines.

I didn’t understand just how dysfunctional things were until I attended a conference on polygenic embryo screening in late 2023. I remember sitting through three days of talks at a hotel in Boston, watching prominent tenured professors in the field of genetics take turns misrepresenting their own data and denouncing attempts to make children healthier through genetic screening. It is difficult to convey the actual level of insanity if you haven’t seen it yourself.

As a direct consequence, there is low-hanging fruit absolutely everywhere. You can literally do novel groundbreaking research on germline engineering as an internet weirdo with an obsession and sufficient time on your hands. The scientific establishment is too busy with their washing machines to think about light bulbs or computers.

This blog post is the culmination of a few months of research by myself and my cofounder into the lightbulbs and computers of genetics: how to do large scale, heritable editing of the human genome to improve everything from diabetes risk to intelligence. I will summarize the current state of our knowledge and lay out a technical roadmap examining how the remaining barriers might be overcome.

We’ll begin with the topic of the insane conference in Boston; embryo selection.

How to make (slightly) superbabies

Two years ago, a stealth mode startup called Heliospect began quietly offering parents the ability to have genetically optimized children.

The proposition was fairly simple; if you and your spouse went through IVF and produced a bunch of embryos, Heliospect could perform a kind of genetic fortune-telling.

They could show you each embryo’s risk of diabetes. They could tell you how likely each one was to drop out of high school. They could even tell you how smart each of them was likely to be.

After reading each embryo's genome and ranking them according to the importance of each of these traits, the best would be implanted in the mother. If all went well, 9 months later a baby would pop out that has a slight genetic advantage relative to its counterfactual siblings.

The service wasn’t perfect; Heliospect’s tests could give you a rough idea of each embryo’s genetic predispositions, but nothing more.

Still, this was enough to increase your future child’s IQ by around 3-7 points or increase their quality adjusted life expectancy by about 1-4 years. And though Heliospect wasn’t the first company to offer embryo selection to reduce disease risk, they were the first to offer selection specifically for enhancement.

The curious among you might wonder why the expected gain from this service is “3-7 IQ points”. Why not more? And why the range?

There are a few variables impacting the expected benefit, but the biggest is the number of embryos available to choose from.

Each embryo has a different genome, and thus different genetic predispositions. Sometimes during the process of sperm and egg formation, one of the embryos will get lucky and a lot of the genes that increase IQ will end up in the same embryo.

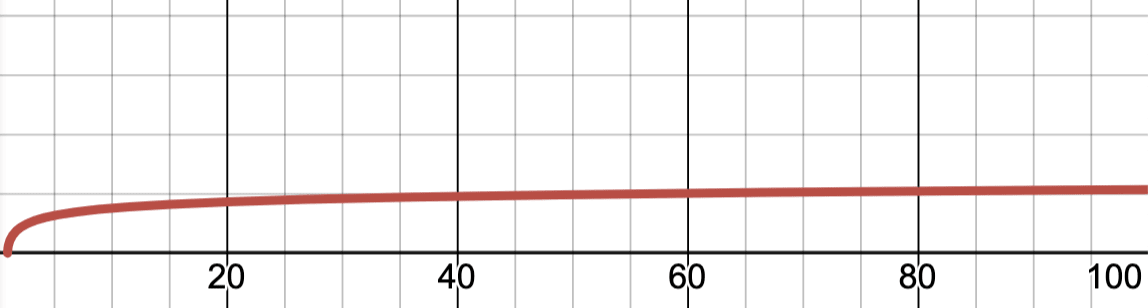

The more embryos, the better the best one will be in expectation. There is a “scaling law” describing how good you can expect the best embryo to be based on how many embryos you’ve produced.

With two embryos, the best one would have an expected IQ about 2 points above parental average. WIth 10 the best would be about 6 points better.

But the gains plateau quickly after that. 100 embryos would give a gain of 10.5 points, and 200 just 11.5.

If you graph IQ gain as a function of the number of embryos available, it pretty quickly becomes clear that we simply aren’t going to make any superbabies by increasing the number of embryos we choose from.

The line goes nearly flat after ~40 or so. If we really want to unlock the potential of the human genome, we need a better technique.

How to do better than embryo selection

When we select embryos, it’s a bit like flipping coins and hoping most of them land on heads. Even if you do this a few dozen times, your best run won’t have that many more heads than tails.

If we could somehow directly intervene to make some coins land on heads, we could get far, far better results.

The situation with genes is highly analogous; if we could swap out a bunch of the variants that increase cancer risk for ones that decrease cancer risk, we could do much better than embryo selection.

Gene editing is the perfect tool to make this happen. It lets us make highly specific changes at known locations in the genome where simple changes like swapping one base pair for another is known to have some positive effect.

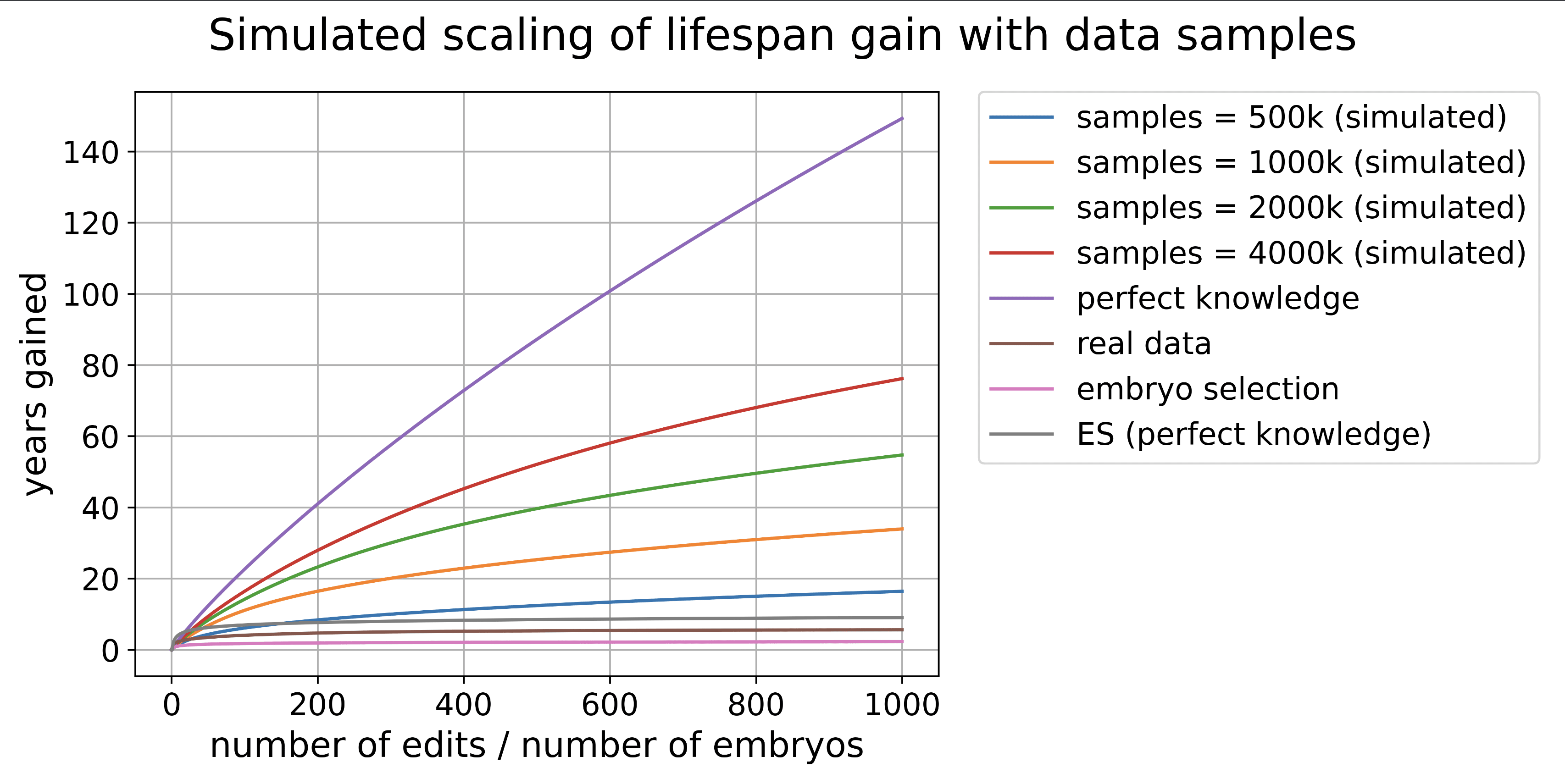

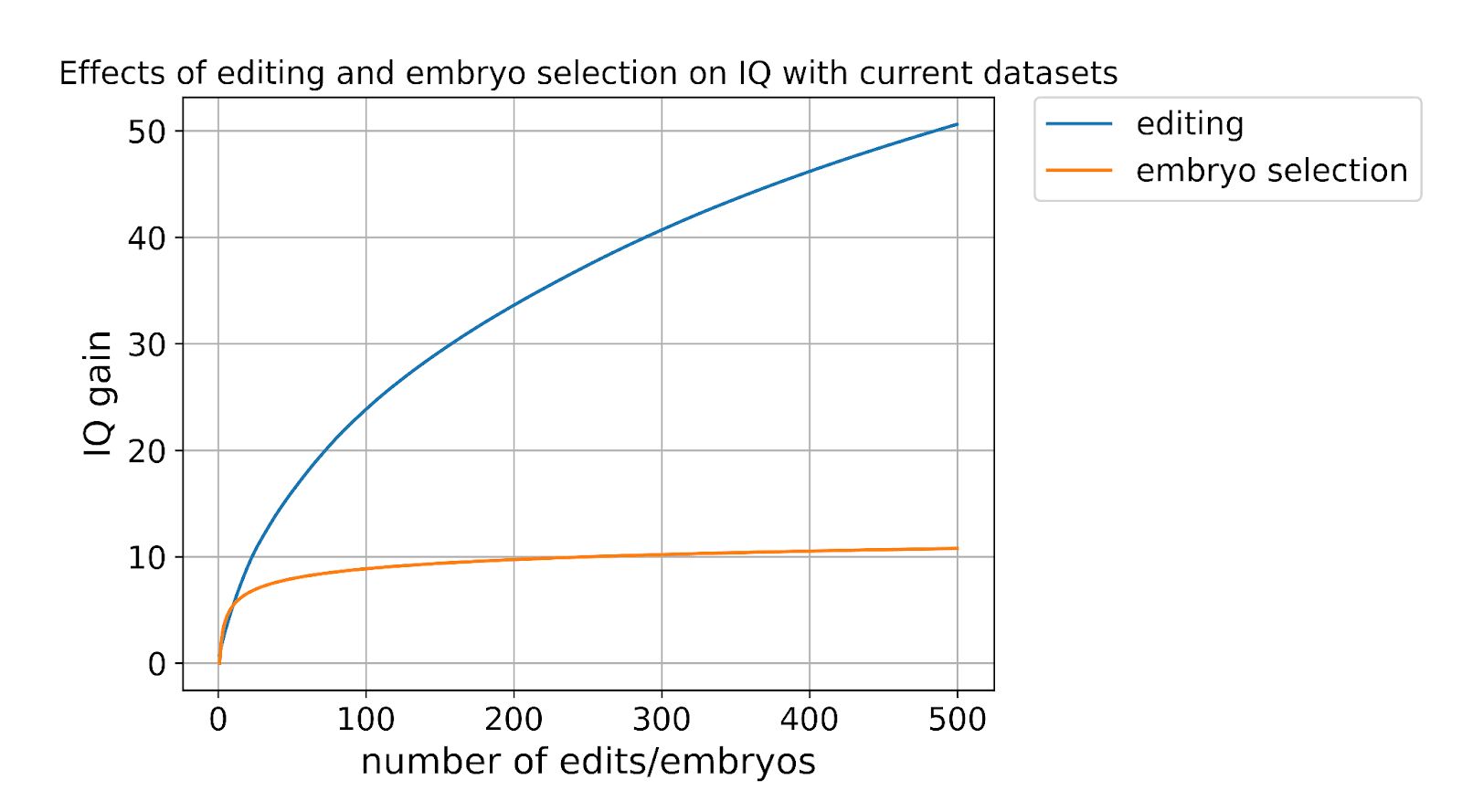

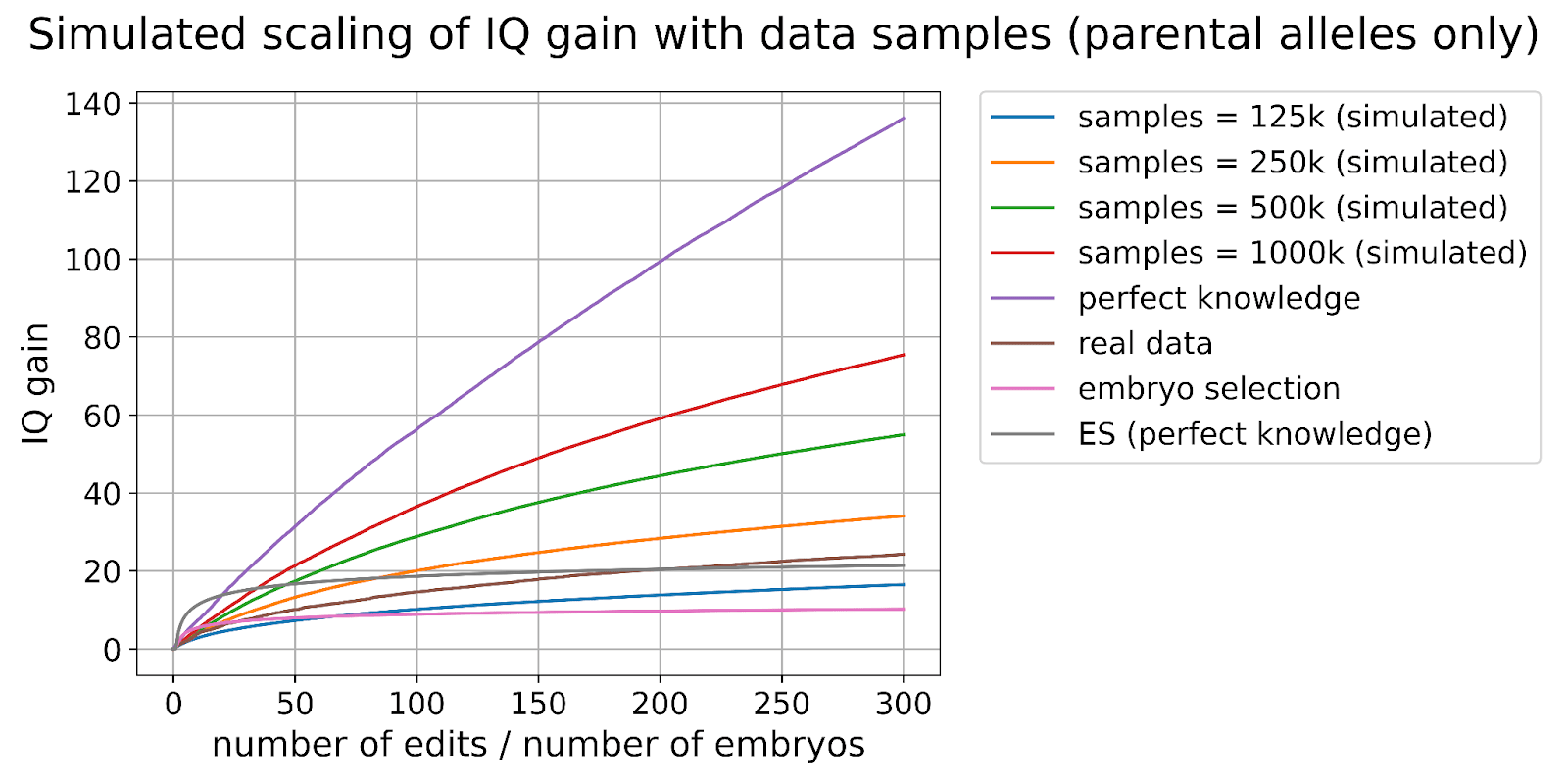

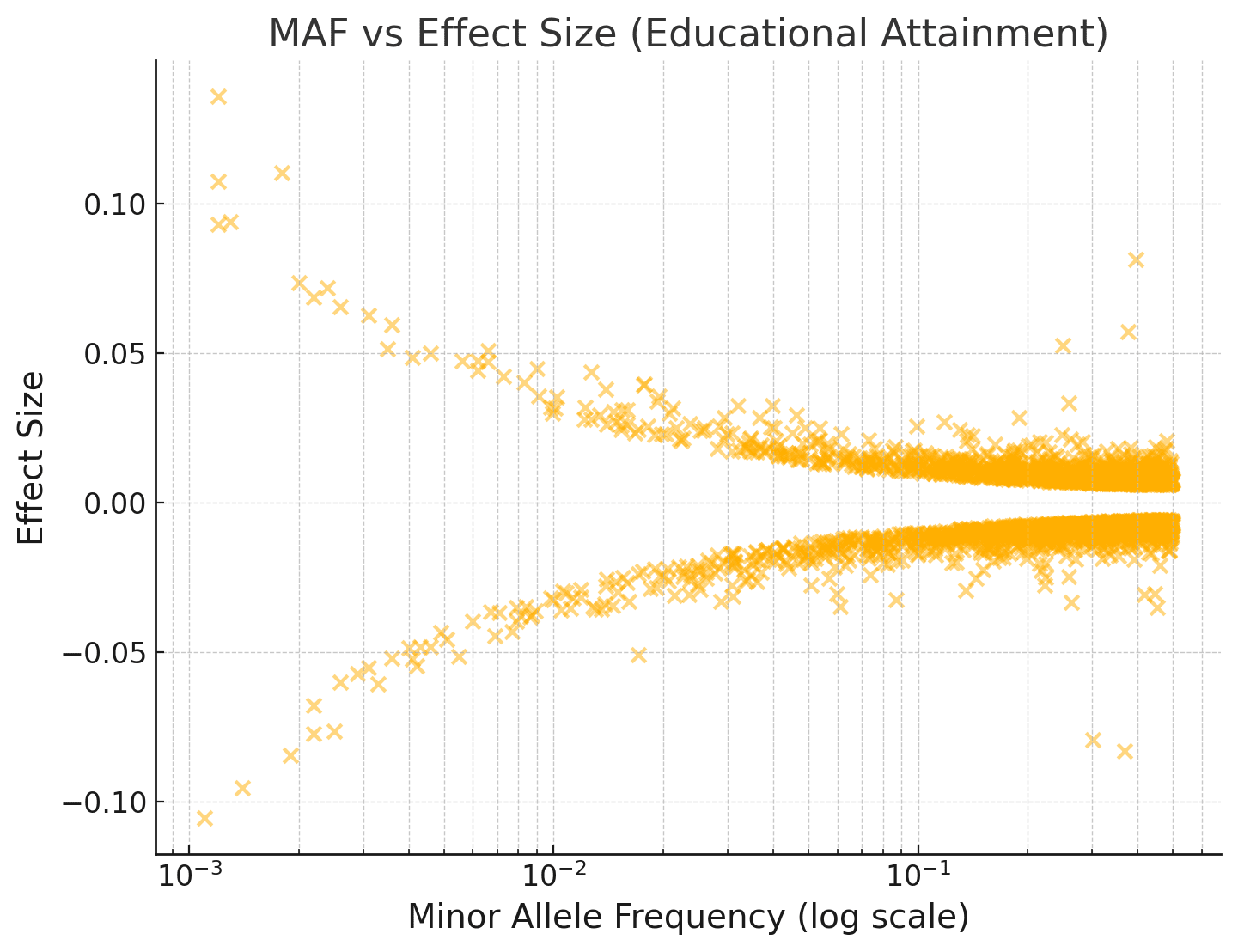

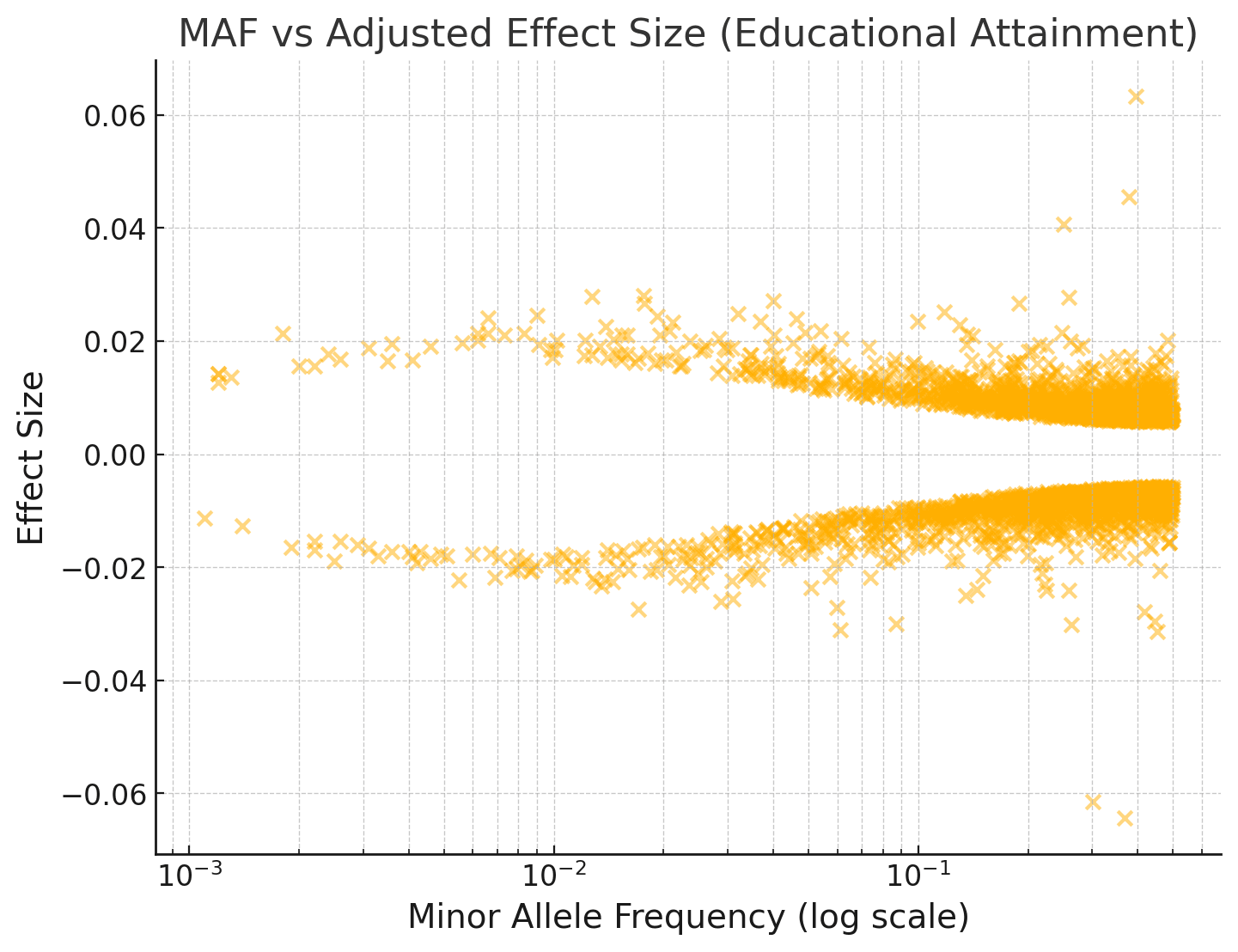

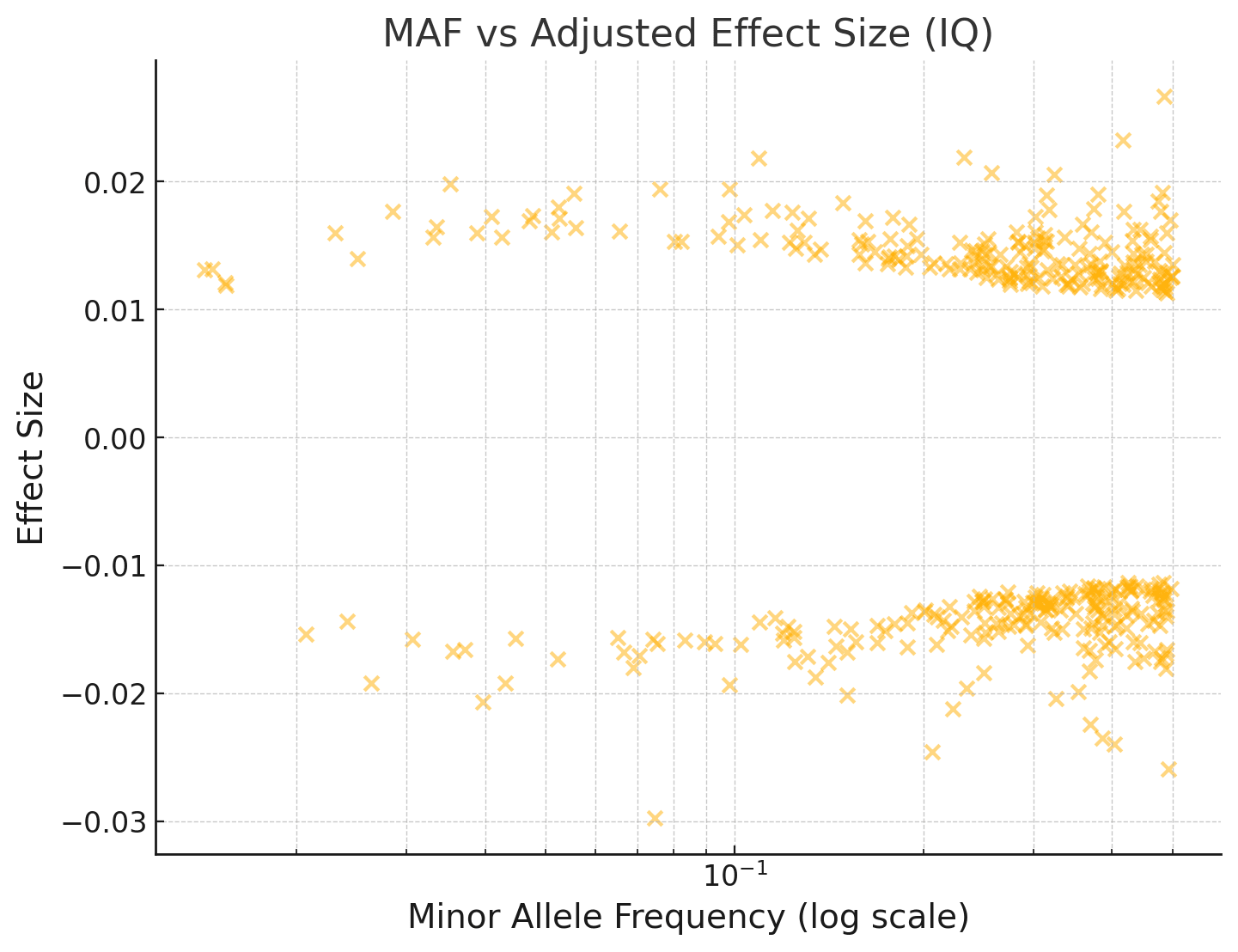

Let’s look again at the IQ gain graph from embryo selection and compare it with what could be achieved by editing using currently available data.

See the appendix for a full description of how this graph was generated and the assumptions we make.

If we had 500 embryos, the best one would have an IQ about 12 points above that of the parents. If we could make 500 gene edits, an embryo would have an IQ about 50 points higher than that of the parents.

Gene editing scales much, much better than embryo selection.

Some of you might be looking at the data above and wondering “well what baseline are we talking about? Are we talking about a 60 IQ point gain for someone with a starting IQ of 70?”

The answer is the expected gain is almost unaffected by the starting IQ. The human gene pool has so much untapped genetic potential that even the genome of a very, very smart person still has thousands of IQ decreasing variants that could potentially be altered.

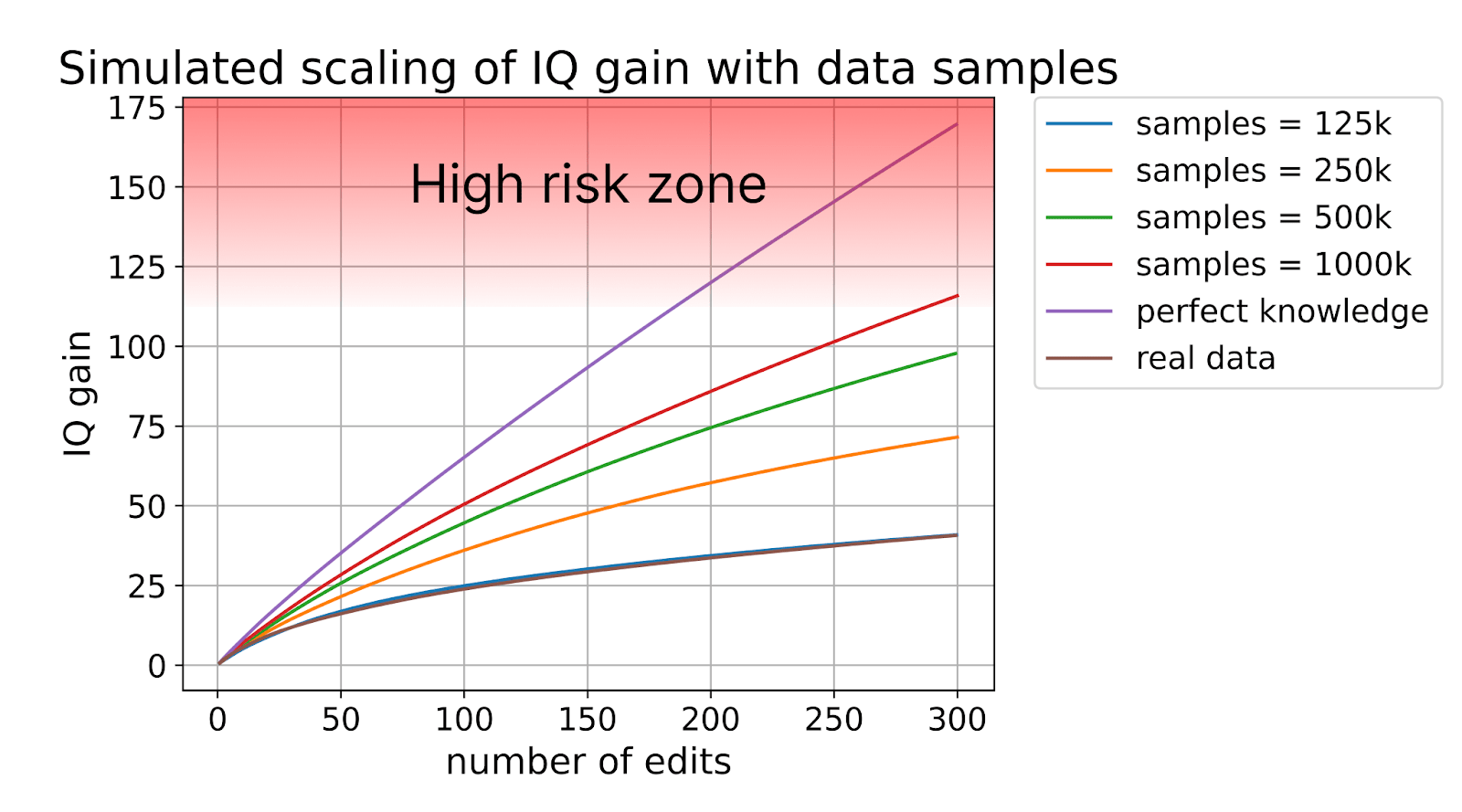

What’s even crazier is this is just the lower bound on what we could achieve. We haven’t even used all the data we could for fine-mapping, and if any of the dozen or so biobanks out there decides to make an effort to collect more IQ phenotypes the expected gain would more than double.

Like machine learning, gene editing has scaling laws. With more data, you can get a larger improvement out of the same number of edits. And with a sufficiently large amount of data, the benefit of gene editing is unbelievably powerful.

Already with just 300 edits and a million genomes with matching IQ scores, we could make someone with a higher predisposition towards genius than anyone that has ever lived.

This won’t guarantee such an individual would be a genius; there are in fact many people with exceptionally high IQs who don’t end up making nobel prize worthy discoveries.

But it will significantly increase the chances; Nobel prize winners (especially those in math and sciences) tend to have IQs significantly above the population average.

It will make sense to be cautious about pushing beyond the limit of naturally occurring genomes since data about the side-effects of editing at such extremes is quite limited. We know from the last few millennia of selective breeding in agriculture and husbandry that it’s possible to push tens of standard deviations beyond any naturally occurring genome (more on this later), but animal breeders have the advantage of many generations of validation over which to refine their selection techniques. For the first generation of enhanced humans, we’ll want to be at least somewhat conservative, meaning we probably don’t want to push much outside the bounds of natural human variation.

Maximum human life expectancy

Perhaps even more than intelligence, health is a near universal human good. An obvious question when discussing the potential of gene editing is how large of an impact we could have on disease risk or longevity if we edited to improve them.

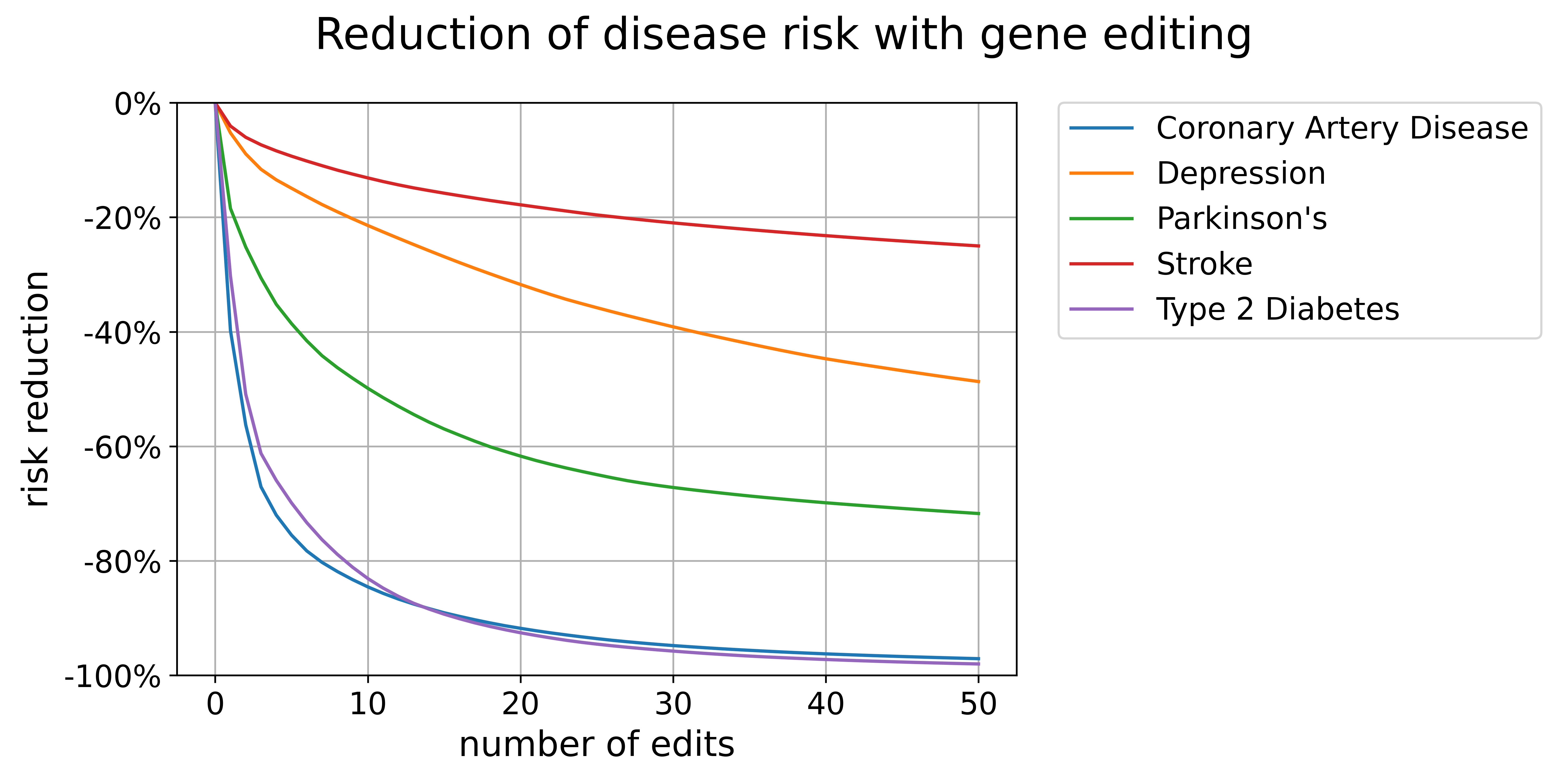

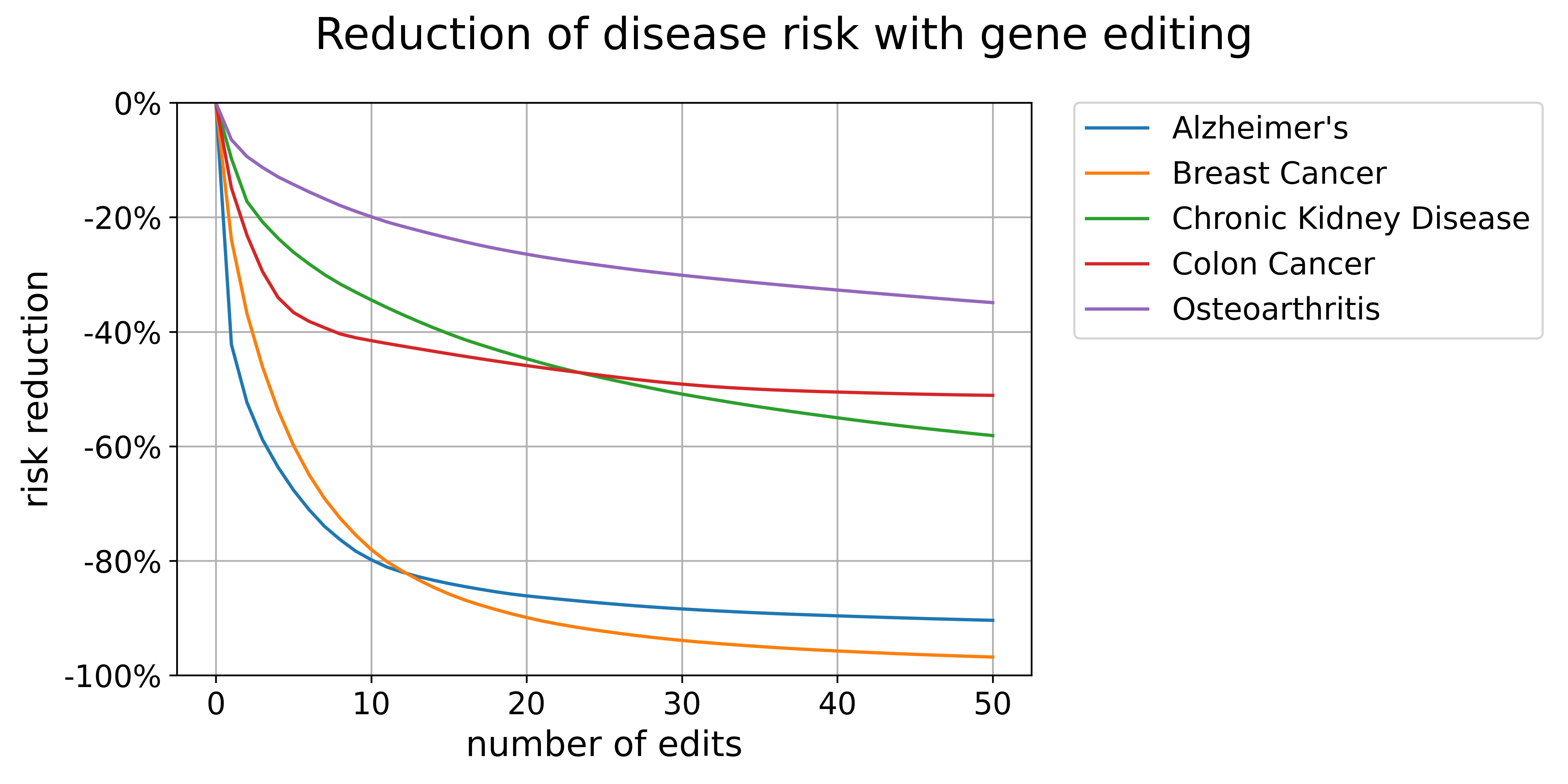

The size of reduction we could get from editing varies substantially by disease. Some conditions, like coronary artery disease and diabetes, can be nearly eliminated with just a handful of edits.

Others, like stroke and depression take far more edits and can’t be targeted quite as effectively.

You might wonder why there’s such a large difference between conditions. Perhaps this is a function of how heritable these diseases are. But that turns out to be only part of the story.

The other part is the effect size of common variants. Some diseases have several variants that are both common among the population and have huge effect sizes.

And the effect we can have on them with editing is incredible. Diabetes, inflammatory bowel disease, psoriasis, Alzheimer’s, and multiple sclerosis can be virtually eliminated with less than a dozen changes to the genome.

Interestingly, a large proportion of conditions with this property of being highly editable are autoimmune diseases. Anyone who knows a bit about human evolution over the last ten thousand years should not be too surprised by this; there has been incredibly strong selection pressure on the human immune system during that time. Millenia of plagues have made genetic regions encoding portions of the human immune system the single most genetically diverse and highly selected regions in the human genome.

As a result the genome is enriched for “wartime variants”; those that might save your life if the bubonic plague reemerges, but will mess you up in “peacetime” by giving you a horrible autoimmune condition.

This is, not coincidentally, one reason to not go completely crazy selecting against risk of autoimmune diseases: we don't want to make ourselves that much more vulnerable to once-per-century plagues. We know for a fact that some of the variants that increase their risk were protective against ancient plagues like the black death (see the appendix for a fuller discussion of this).

With most trait-affecting genetic variants, we can make any trade-offs explicit; if some of the genetic variants that reduce the risk of hypertension increase the risk of gallstones, you can explicitly quantify the tradeoff.

Not so with immune variants that protect against once-per-century plagues. I dig more into how to deal with this tradeoff in the appendix but the TL;DR is that you don’t want to “minimize” risk of autoimmune conditions. You just want to reduce their risk to a reasonable level while maintaining as much genetic diversity as possible.

Is everything a tradeoff?

A skeptical reader might finish the above section and conclude that any gene editing, no matter how benign, will carry serious tradeoffs.

I do not believe this to be the case. Though there is of course some risk of unintended side-effects (and we have particular reason to be cautious about this for autoimmune conditions), this is not a fully general counterargument to genetic engineering.

To start with, one can simply look at humans and ask “is genetic privilege a real thing?”

And the answer to anyone with eyes is obviously “yes”. Some people are born with the potential to be brilliant. Some people are very attractive. Some people can live well into their 90s while smoking cigarettes and eating junk food. Some people can sleep 4 hours a night for decades with no ill effects.

And this isn’t just some environmentally induced superpower either. If a parent has one of these advantages, their children are significantly more likely than a stranger to share it. So it is obvious that we could improve many things just by giving people genes closer to those of the most genetically privileged.

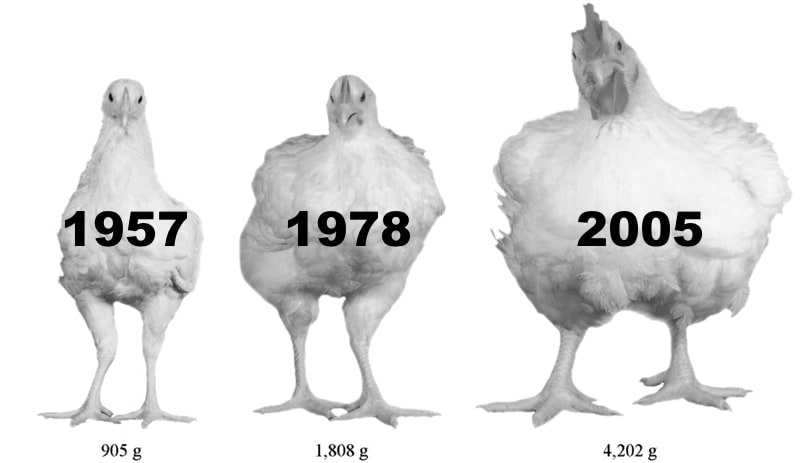

But there is evidence from animal breeding that we can go substantially farther than the upper end of the human range when it comes to genetic engineering.

Take chickens. While literally no one would enjoy living the life of a modern broiler chicken, it is undeniable that we have been extremely successful in modifying them for human needs.

We’ve increased the weight of chickens by about 40 standard deviations relative to their wild ancestors, the red junglefowl. That’s the equivalent of making a human being that is 14 feet tall; an absurd amount of change. And these changes in chickens are mostly NOT the result of new mutations, but rather the result of getting all the big chicken genes into a single chicken.

Some of you might point out that modern chickens are not especially healthy. And that’s true! But it’s the result of a conscious choice on the part of breeders who only care about health to the extent that it matters for productivity. The health/productivity tradeoff preferences are much, much different for humans.

So unless the genetic architecture of human traits is fundamentally different from those of cows, chickens, and all other domesticated animals (and we have strong evidence this is not the case), we should in fact be able to substantially impact human traits in desirable ways and to (eventually) push human health and abilities to far beyond their naturally occurring levels.

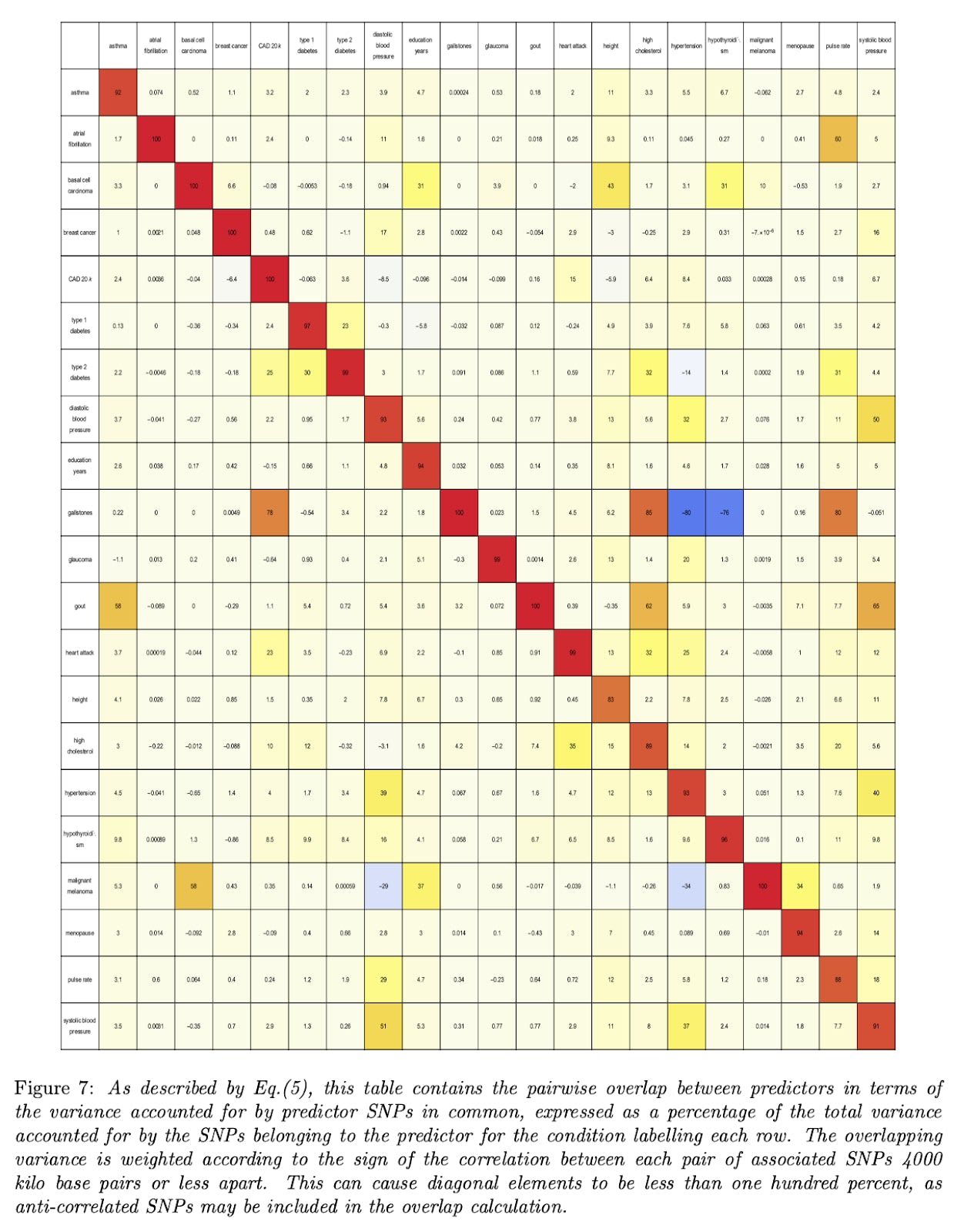

But we can do even better than these vague arguments. Suppose you’re worried that if we edit genes to decrease the risk of one disease, we might inadvertently increase the risk of another. To see how big of an issue this might be, let’s look at a correlation matrix of the genetic variants involved in determining complex disease risks like diabetes and breast cancer:

With a few notable exceptions, there is not very much correlation between different diseases. Most disease have a genetic correlation of between 0 and 5%.

And the correlations that DO exist are mostly positive. That's why most of the boxes are yellowish instead of bluish. Editing embryos to reduce the risk of one disease usually results in a tiny reduction of others.

To the extent it doesn’t, you can always edit variants to target BOTH diseases. Even if they are negatively correlated, you can still have a positive effect on both.

This kind of pre-emptive editing targeting multiple diseases is where I think this field is ultimately headed. Those of you in the longevity field have long understood that even if we cure one or two of the deadliest conditions like heart disease or cancers, it would only add a couple of years to human life expectancy. Too many other bodily systems are breaking down at around the same time.

But what if we could go after 5 diseases at once? Or ten? What if we stopped thinking about diseases as distinct categories and instead asked ourselves how to directly create a long, healthy life expectancy?

In that case we could completely rethink how we analyze the genetics of health. We could directly measure life expectancy and edit variants that increase it the most.

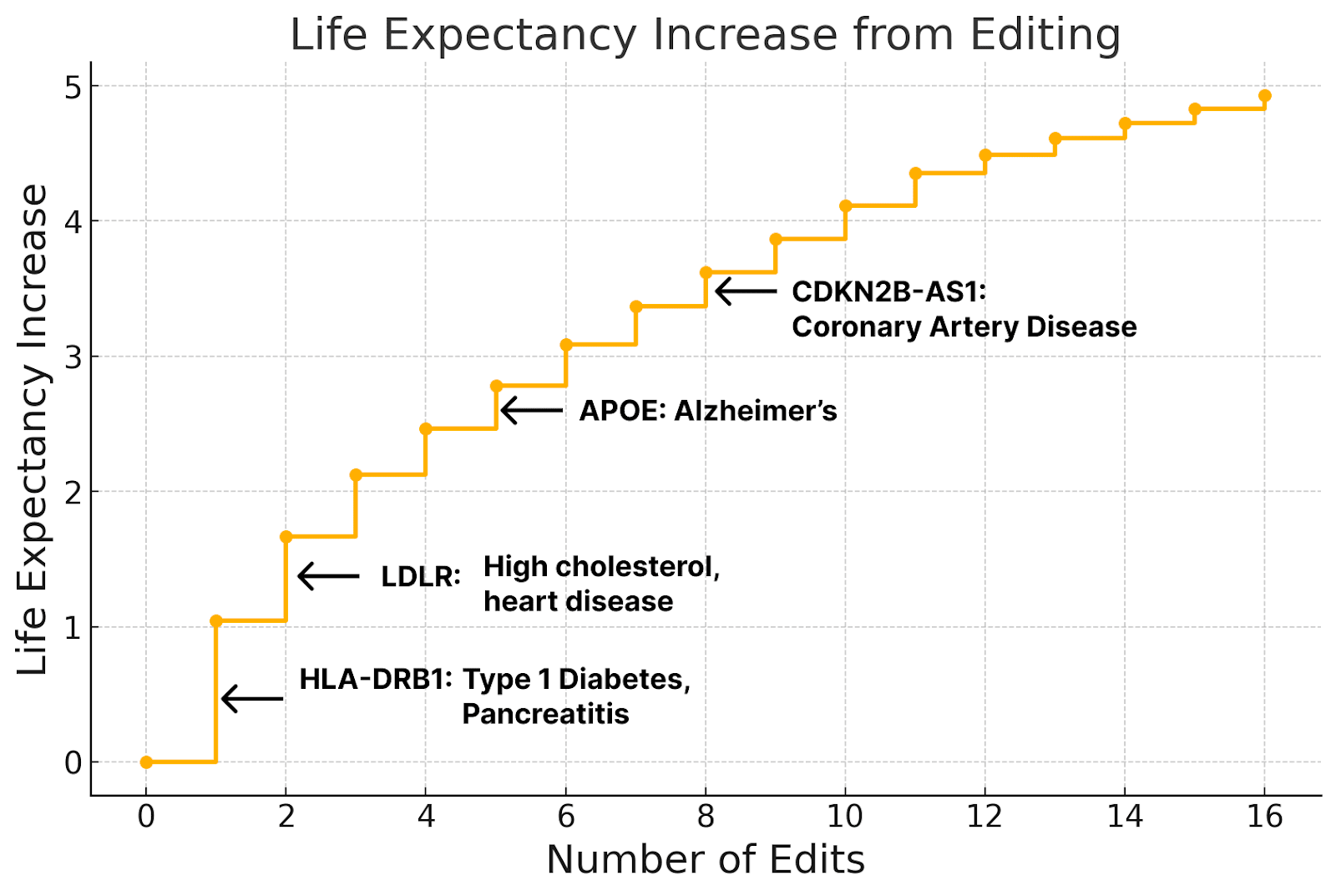

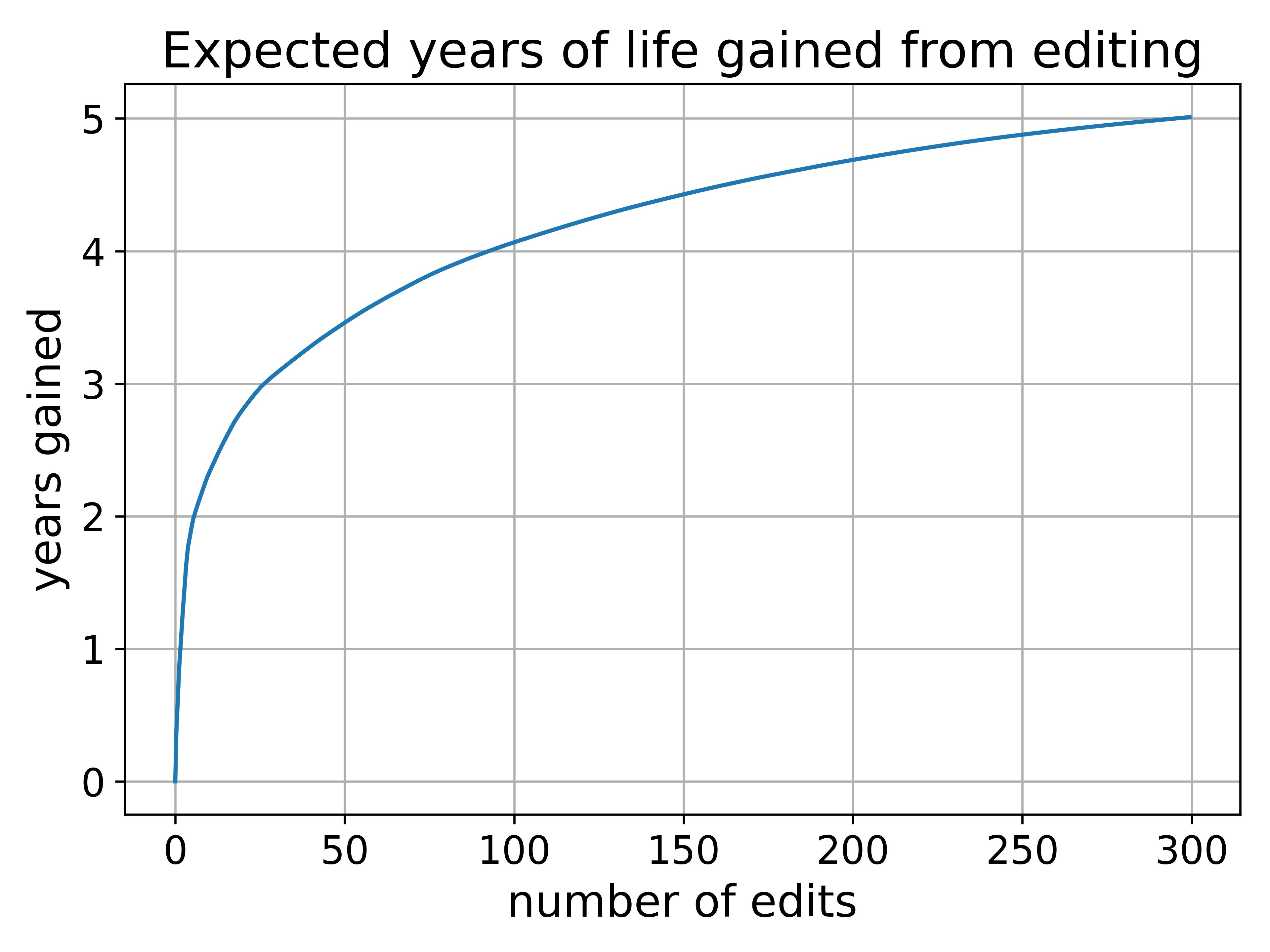

How large of an impact could we have with editing? My cofounder pulled data from the largest genome-wide association study of life expectancy we could find and graphed the results.

4-5 years. That’s how much of an impact we could have on life expectancy with editing. This impact is already on part with the combined effects of eliminating both heart disease and cancer, but it's not exactly an earth-shattering change.

Unlike disease risk or IQ, life expectancy gains are currently much more limited by our lack of data. We just don't have enough genomes from dead people to understand very well which genes are involved in life expectancy.

But if we WERE to get more, the effects of edting could be tremendous.

Life expectancy gain from editing as a function of the amount of data used in the training set. If you want to figure out life expectancy after editing, just add ~77 years to whatever is shown on the graph.

At the upper limit, a life expectancy increase of 75 years is perhaps not too implausible.

I think in practice we would probably not push much beyond 50 additional years of life expectancy simply because that would already take us to the ragged edge of how long naturally occurring humans stick around. But in a few generations we could probably start pushing lifespans in the high hundreds or low 200s.

Some might worry that by targeting life expectancy directly rather than diseases, the result might be people who stay very sick for a very long time. I think that’s extremely unlikely to be the case.

For one thing, even very expensive modern medicine can’t keep very sick people alive for more than a few extra years in most cases. But for another, we can actually zoom in to the graph shown above and LOOK at exactly which variants are being targeted.

When we do, we find that many of the variants that have the largest impact on life expectancy are in fact risk variants for various diseases. In other words, editing for life expectancy directly targets many of the diseases that bring life to a premature end.

This chart also shows why treating aging is so hard; the genetics of breakdown aren’t just related to one organ system or one source of damage like epigenetic aging. They’re ALL OVER the place; in dozens of different organ systems.

Results like these are one of the reasons why I think virtually all current anti-aging treatments are destined to fail. The one exception is tissue replacement, of the kind being pursued by Jean Hebert and a few others.

Gene editing is another exception, though one with more limited power than replacement. We really can edit genes that affect dozens if not hundreds of bodily systems.

So to summarize; we have the data. We have at least a reasonably good probabilistic idea of which genes do what. And we know we can keep side-effects of editing relatively minimal.

So how do we actually do the editing?

How to make an edited embryo

The easiest way to make a gene-edited embryo is very simple; you fertilize an egg with a sperm, then you squirt some editors (specifically CRISPR) onto the embryo. These editors get inside the embryo and edit the genes.

This method has actually been used in human embryos before! In 2018 Chinese scientist He Jiankui created the first ever gene edited embryos by using this technique. All three of the children born from these embryos are healthy 6 years later (despite widespread outrage and condemnation at the time).

Today we could probably do somewhat more interesting editing with this technique by going after multiple genes at once; Alzheimer’s risk, for example, can be almost eliminated with maybe 10-20 edits.

But there are issues. Editors mess up every now and then, and ideally one would hope to VERIFY that there were no unintentional edits made during the editing process.

This CAN be done! If all the cells in the embryo have the same genome, you can take a little sample of the embryo and sequence the genome to figure out whether any unintentional edits were made. And we already do this kind of embryo sampling as a routine part of fertility treatments.

But cells are only guaranteed to have the same genome if all the edits are made before the first cell division. If an edit is made afterwards, then some cells will have it and some cells won’t.

You can mostly guarantee no editing after the first cell division by injecting anti-CRISPR proteins into the embryo before the first cell division. This disables any remaining editors, ensuring all the embryo’s tissues have the same genome and allowing you to check whether you’ve made the edits you want.

The other option is you can just shrug and say “Well if our testing shows that this process produces few enough off-targets, it probably doesn’t matter if some of the cells get edited and some don’t. As long as we don’t mess up anything important it will be fine”. After all, there are already substantial genetic differences between different cells in the same person, so the current evidence suggests it’s not that big of a deal.

But either way, there are fundamental limits to the number of edits you can make this way. You can only cram so many editors inside the cell at once without killing the cell. The cellular repair processes crucial for editing can only work so fast (though there are ways to upregulate some of them). And after a few cell divisions the embryo’s size increases, making delivery of editors to the inner tissues very difficult.

So while you can make perhaps up to 5 edits in that 1 day window (possibly more if my company succeeds with our research), that isn’t nearly enough to have a significant effect on highly polygenic traits like depression risk or intelligence or life expectancy.

Fortunately, there is another way; make the edits in a stem cell, then turn that stem cell into an embryo. And there has been a significant breakthrough made in this area recently.

Embryos from stem cells

On December 22nd 2023, an interesting paper was published in the pages of Cell. Its authors claimed to have discovered what had long been considered a holy grail in stem cell biology: a method of creating naive embryonic stem cells.

I first learned of the paper two weeks later when the paper’s principal investigator, Sergiy Velychko, left a comment about the work on my LessWrong blog post.

It’s not often that I have a physical response to a research paper, but this was one of the few exceptions. Goosebumps; they did what?? Is this actually real?

Velychko and his collaborators had discovered that by modifying a single amino acid in one of the proteins used to create stem cells, they could create a more powerful type of stem cell capable of giving rise to an entirely new organism. And unlike previous techniques, Velychko’s wasn’t just limited to mice and rats; it seemed to work in all mammals including humans.

If Velychko’s technique works as well in primates as the early data suggests, it could enable gene editing on a previously impossible scale. We could make dozens or even hundreds of edits in stem cells, then turn those stem cells into embryos. Once we can do this, germline gene editing will go from being a niche tool useful for treating a handful of diseases, to perhaps the most important technology ever developed.

Iterated CRISPR

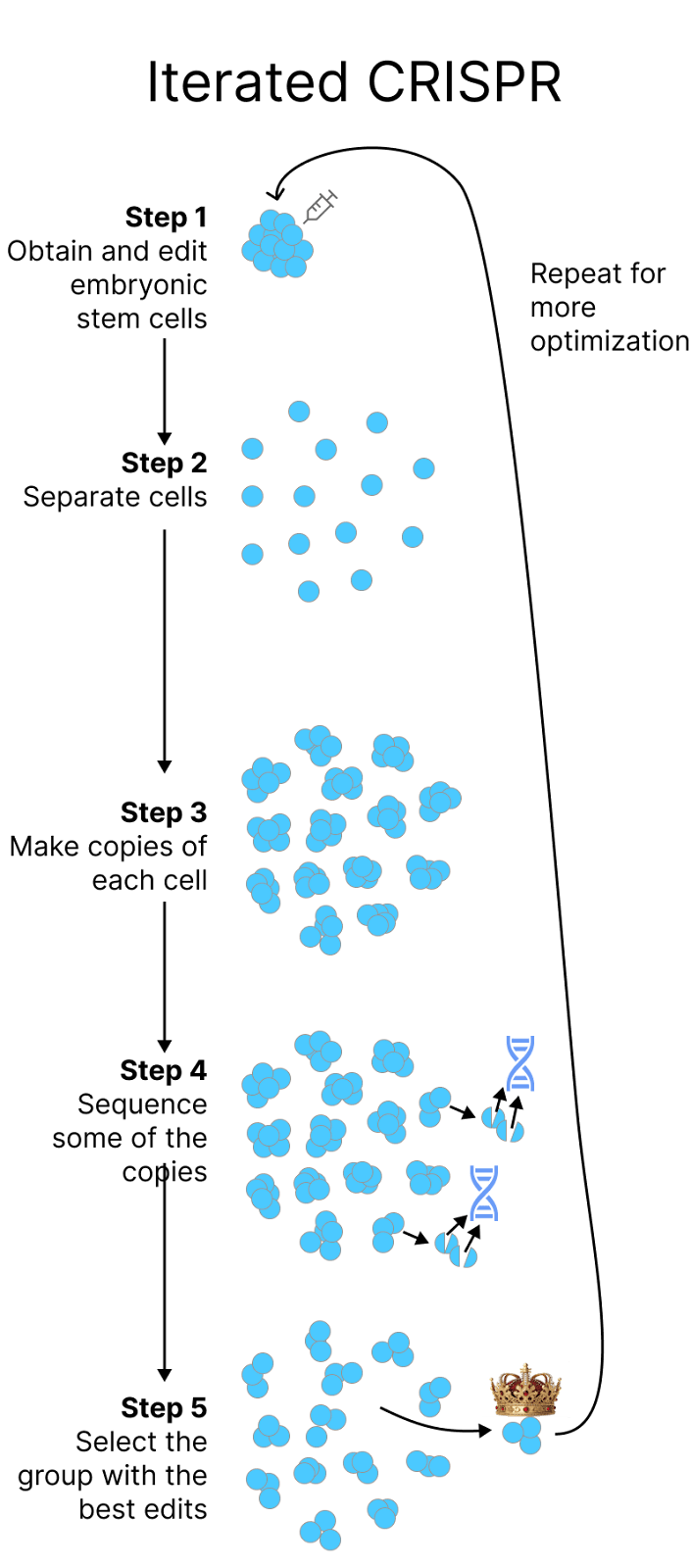

To explain why, we need to return to the method used by He Jiankui to make his embryos. The main limitation with Jiankui’s technique is the limited time window during which edits can be made, and the inability to go back and fix mistakes or “weed out” cells that are damaged by the editors.

With Jiankui’s technique, all edits have to be made in the first 24 hours, or at most the first few days. Mistakes can’t be corrected, and any serious ones do irreparable damage to the embryo.

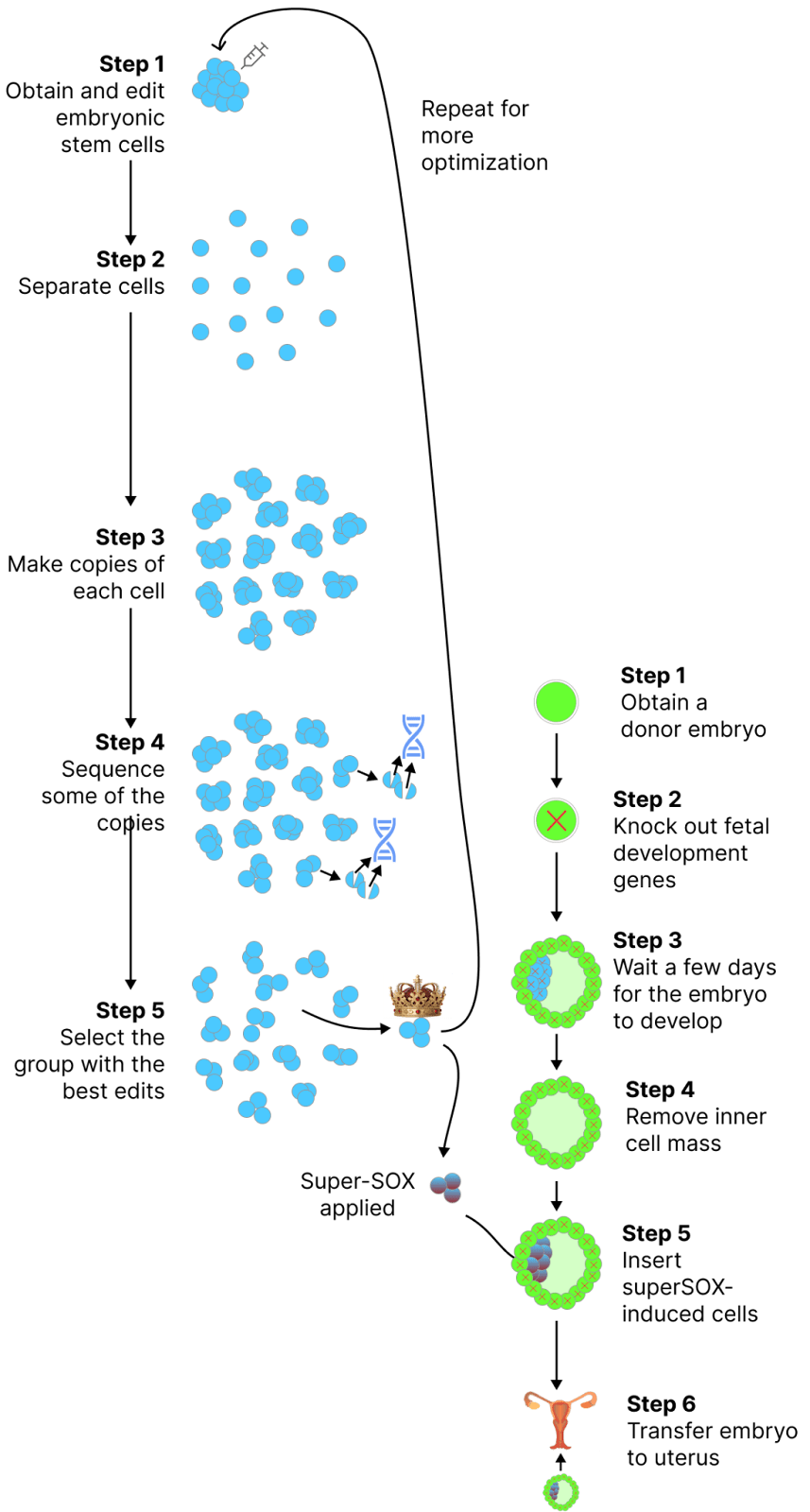

If we could somehow grow the cells separately, we could take our time making edits and VERIFY them all before implanting an embryo. We could edit,make copies of the cells, verify the edits, then make more edits, all in a loop. A protocol might look something like the following:

However, there’s one issue with the above protocol; the moment you remove stem cells from an embryo and begin to grow them, they lose their developmental potential. The stem cells become “primed”, meaning they can no longer form a complete organism.

So even if you are able to edit a bunch of genes in your stem cells to decrease heart attack risk, increase intelligence and decrease depression risk, it doesn’t matter. Your super cells are just that; they can’t make a baby.

And until late 2023, this is where the field was stuck.

Then along came Sergiy Velychko.

Sergiy Velychko and the story of Super-SOX

The story of his discovery, what it is, how it works, and how it was made, is one of the most interesting I’ve ever stumbled across.

In the early 2020s, Sergiy was a post doc at the Max Planck Institute in Germany where he was working on research related to stem cells.

Stem cells are normally created by “turning on” four proteins inside a skin cell. These proteins are called “Yamanaka factors” after the Japanese scientist who discovered them. When they are turned on, the gene expression of the skin cell is radically altered, changing it into a stem cell.

Sergiy had been experimenting with modifications to one particular Yamanaka factor named Oct4. He was trying to increase the efficiency with which he could convert skin cells into stem cells. Normally it is abysmally bad; less than 1%.

Unfortunately, very few of his experiments had yielded anything interesting. Most of his modifications to Oct4 just made it worse. Many broke it completely.

After a few years of running into one dead end after another, Sergiy gave up and moved on to another project converting skin cells into blood cells.

He ran a series of experiments involving a protein called Sox17 E57K, a mutant of Sox17 known for its surprising ability to make stem cells (normal Sox17 can’t do this). Sergiy wanted to see if he could combine the mutant Sox17 with one of his old broken Oct4 mutants to directly make blood cells from skin.

To prove that the combination worked, Sergiy needed to set up a control group. Specifically he needed to show that the combination did NOT produce stem cells. Without this control group there would be no way to prove that he was making skin cells DIRECTLY into blood cells instead of making them into stem cells which became blood cells afterwards.

This should have been easy. His previous work had shown the Oct4 mutant wasn’t capable of making stem cells, even when combined with all the other normal reprogramming factors.

But something very surprising happened; the control group failed. The broken Oct4, which he had previously shown to be incapable of making stem cells, was doing just that.

What is going on?

Most scientists would have chalked up this outcome to contamination or bad experimental setup, or perhaps some weird quirk of nature that wasn’t particularly interesting. Indeed many of Sergiy’s colleagues who he informed of the result found it uninteresting.

So what if you could make stem cells with a weird combination of proteins? It was still less efficient than normal Yamanaka factors and there didn’t seem to be any very compelling reasons to believe it was worth looking into.

But Sergiy felt differently. He had spent enough time studying the structure and functionality of Yamanaka factors to realize this result indicated something much deeper and stranger was going on; somehow the mutant Sox17 was “rescuing” the Oct4 mutant.

But how?

Determined to understand this better, Sergiy began a series of experiments. Piece by piece he began swapping parts of the mutant Sox17 protein into Sox2, the normal reprogramming factor, trying to better understand what exactly made the mutant Sox17 so special.

Sox17 differs in many places from Sox2, so it was reasonable to assume that whatever made it special involved multiple changes.

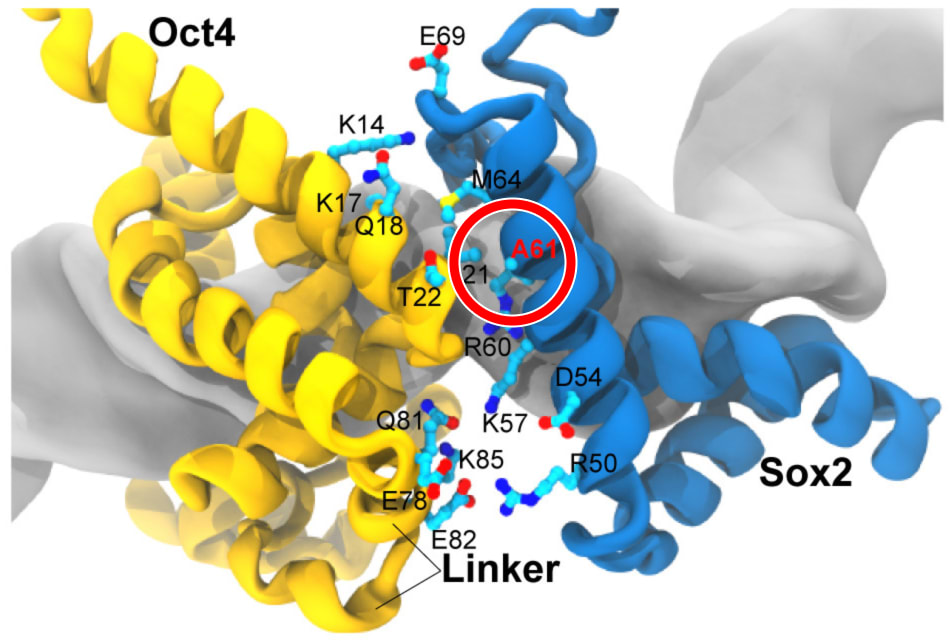

But that was not what Sergiy found. Instead, he found that he could replicate the behavior by changing a single amino acid.

Just one.

By swapping out an Alanine for a Valine at the 61st position in Sox2, it too could rescue the Oct4 mutants in the same way that the Sox17 mutant could.

What was going on? Sergiy pulled up some modeling software to try to better understand how such a simple change was making such a large difference.

When he saw the 3d structure, it all clicked. The amino acid swap occurred at the exact spot where Sox2 and the Oct4 mutants stuck to each other. It must be changing something about the bond formed between the two.

Further experiments confirmed this to be the case; the Alanine to Valine swap was increasing the strength of the bond between Sox and Oct by a thousand fold.

To those familiar with Yamanaka factors, it might seem exceptionally strange that modifying the bond BETWEEN Yamanaka factors could enable reprogramming.

Yamanaka factors are normally thought to work their magic by sticking to DNA and forming a sort of landing pad for a larger protein complex involved in gene expression.

But somehow the bond BETWEEN these Yamanaka factors was turning out to be just as important. (I dig more into why this is the case in the appendix)

After understanding the mechanism, Sergiy had a new idea. If one amino acid swap could enable decent reprogramming efficiency with a broken Oct4, what would it enable if it was combined with a working Oct4? Sergiy quickly ran the experiment, which produced another breakthrough. When combined with normal Oct4, super-SOX was making stem cells with at least ten times the efficiency of standard techniques.

After years of failed attempts, he had finally found his super reprogramming factor.

Super-SOX

Sergiy began testing other changes such as substituting Sox17’s C terminus domain (the part that sticks onto other proteins) into Sox2. By the time he was done with all of his improvements, his modified stem cell recipe was able to create stem cells at a rate 50 times that of normal reprogramming. The best starting cells were creating stem cells with 200x the efficiency. Sergiy dubbed the best version “super-SOX”.

By itself this would have been a huge discovery and a boon to stem cell scientists all over the world. But there was something even more interesting happening.

When Sergiy began looking at the gene expression of stem cells created with super-SOX, he noticed something incredible; these cells did not look like normal stem cells used in experiments for decades. Instead they looked like stem cells taken directly from a very early embryo.

These kinds of stem cells had been created in mice long ago, but they had never before been created for any other species except rats. Yet one after another, the signs (most notably activation of the Oct4 distal enhancer) indicated that Sergiy’s super-SOX was doing just that. It was making naive embryonic stem cells.

Mice from stem cells

To test whether what he thought he was seeing was real, Sergiy began experimenting on mice, trying to test the developmental potential of these new stem cells.

The logic of the experiment was simple; if he could grow a whole new mouse from super-SOX-derived stem cells, it would mean those cells had the same developmental potential as ones harvested directly from an early embryo.

This had been done before, but the efficiency was very low. Embryos made from stem cells often failed to develop after transfer, and those that did often died shortly after birth.

The experiment worked. And not only did it work, but the results were incredible. Super-SOX led to an 800% increase in the adult survival rate of stem cell derived mice relative to normal Sox2.

The red bar shows the adult survival rate of mice made from super-SOX derived iPSCs, when compared with the standard Yamanaka cocktail.

One particular cell line stood out; embryos derived from a line with an integrated SKM transgene were resulting in live births 90% of the time. That is ABOVE the live birth rate from normal conception, meaning super-SOX was able to beat nature.

Not everything is perfect; the experiments showed some loss of imprinting during super-SOX culturing. But there are ways to address this issue, and Sergiy believes there are still further optimizations to be made.

Why does super-SOX matter?

Remember how we previously had no way to turn the edited stem cells into an embryo? If super-SOX works as well in humans as the early data seems to indicate, that will no longer be the case. We’ll plausibly be able to take edited stem cells, apply super-SOX, stick them in a donor embryo, and MAKE a new person from them.

Readers might wonder why we need a donor embryo. If super-SOX cells can form an entirely new organism, what’s the point of the donor?

The answer is that although super-SOX cells form the fetus, they can’t form the placenta or the yolk sack (the fluid filled sack that the fetus floats in). Making cells that can form those tissues would require a further step beyond what Sergiy has done; a technique to create TOTIPOTENT stem cells.

So until someone figures out a way to do that, we’ll still need a donor embryo to form those tissues.

Let’s put it all together; if super-SOX works as well in humans as it does in mice, this is how you would make superbabies:

“Knock out fetal development genes” is one option to prevent the donor embryo’s cells from contributing to the fetus. There are other plausible methods to achieve this goal, such as overexpressing certain genes to solidify cell fate of the donor embryo’s cells before introducing super-SOX-derived cells.

How do we do this in humans?

The early data from the super-SOX in humans looks promising. Many of the key markers for naive pluripotency are activated by super-SOX, including the Oct4 distal enhancer, the most reliable cross-species indicator of naive developmental potential (note this discovery was made after publications so you actually can’t find it in the paper). Sergiy also showed that super-SOX induced HUMAN stem cells could contribute to the inner cell mass of mouse embryos, which is the first time this has ever been demonstrated.

But we don’t have enough evidence yet to start testing this in humans.

Before we can do so, we need to test super-SOX in primates. We need to show that you can make a monkey from super-SOX derived cells, and that those monkeys are healthy and survive to adulthood no less often than those that are conceived naturally.

If that can be demonstrated, ESPECIALLY if it can be demonstrated in conjunction with an editing protocol, we will have the evidence that we need to begin human trials.

Frustratingly, Sergiy has not yet been able to find anyone to fund these experiments. The paper on super-SOX came out a year ago, but to date I’ve only been able to raise about $100k for his research.

Unfortunately monkeys (specifically marmosets) are not cheap. To demonstrate germline transmission (the first step towards demonstrating safety in humans), Sergiy needs $4 million.

If any rich people out there think making superhumans is a worthwhile cause, this is a shovel ready project that is literally just waiting for funding; the primatology lab is ready to go, Sergiy is ready to go, they just need the money.

What if super-SOX doesn’t work?

So what if super-SOX doesn’t work? What if the primate trials conclude and we find that despite super-SOX showing promising early data in human stem cells and very promising mouse data, it is insufficient to make healthy primates? Is the superbabies project dead?

No. There are multiple alternatives to making an embryo from edited stem cells. Any of them would be sufficient to make the superbabies plan work (though some are more practical than others).

Eggs from Stem Cells

The first and most obvious alternative is creating eggs from stem cells. There are a half dozen startups, such as Conception and Ovelle, working on solving this problem right now, and if any of them succeeded we would have a working method to turn edited stem cells into a super egg.

These superbabies wouldn’t be quite as superlative as super-SOX derived embryos since only half of their DNA would be genetically engineered. But that would still be sufficient for multiple standard deviations of gain across traits such as intelligence, life expectancy, and others.

Another option is to make edited sperm.

Fluorescence-guided sperm selection

Edited sperm are potentially easier to make because you don’t need to recapitulate the whole sperm maturation process. The testicles can do it for you.

To explain I need to give a little bit of background on how sperm is formed naturally.

The inside of the testicles contain a bunch of little tubes called “seminiferous tubules”. These are home to a very important bunch of cells called spermatogonial stem cells. As you might be able to guess from the name, spermatogonial stem cells are in charge of making sperm. They sit inside the tubes and every now and then (though a process I won’t get into) they divide and one of the two resulting cells turns into sperm.

There’a complicated process taking place inside these tubules that allows for maturation of the sperm. You NEED this maturation process (or at least most of it) for the sperm to be capable of making a baby.

But we can’t recreate it in the lab yet.

So here’s an idea; how about instead of editing stem cells and try to turn them into sperm, we edit spermatogonial stem cells and stick them back in the testicles? The testicles could do the maturation for us.

You would need some way to distinguish the edited from the unedited sperm though, since the edited and the unedited sperm would get mixed together. You can solve this by adding in a protein to the edited stem cells that makes the sperm formed from them glow green. You can then use standard off-the-shelf flow cytometry to pick out the edited from the unedited sperm.

You also probably don’t want the baby to glow green, so it’s best to put the green glowing protein under the control of a tissue specific promoter. That way only the baby’s sperm would glow green rather than its whole body.

From a technical perspective, we’re probably not that far away from getting this working. We’ve successfully extracted and cultured human spermatogonial stem cells back in 2020. And we’ve managed to put them back in the testicles in non-human primates (this made functional sperm too!) So this is probably possible to do in humans.

The monkey experiments used alkylating chemotherapy to get rid of most of the existing stem cells before reinjecting the spermatogonial stem cells. Most people are not going to want to undergo chemotherapy to have superbabies, so there probably needs to be additional research done here to improve the transplantation success rates.

Still, most of the pieces are already in place for this to be tested.

Embryo cloning

Lastly, there is somatic cell nuclear transfer, or SCNT. SCNT is how dolly the sheep was cloned, though in this context we’d be using it to clone an embryo rather than an adult organism. SCNT is not currently safe enough for use in humans (many of the animals born using the technique have birth defects), but should advancements be made in this area it may become viable for human use.

What if none of that works?

If none of the above works, and the only technology my company can get working is the multiplex editing, we can always create super cows in the meantime. We have pretty good genetic predictors for a lot of traits in cows, and you can already use SCNT to make an edited cell into an embryo. The success rates aren’t as high as they are with natural conception, but no one really cares too much about that; if you can make one super bull, it can create many, many offspring with desirable traits such as improved disease resistance, better milk production, or a better metabolism that more efficiently converts feed into beef.

Unlike past GMO technologies, this one could work without inserting any non-cow genes into the cow genome; we could literally just put a bunch of the “extra beefy cow” genes into the same cow. You’d get a similar result from using traditional breeding or embryo selection; the editing would just massively speed up the process.

We could also likely use this tech to make farmed animals suffer less. A big reason factory farming sucks so much is because we’ve bred animals to get better at producing milk and meat but we’ve left all their instincts and wild drives intact. This creates a huge mismatch between what the animals want (and what they feel) and their current environment.

We could probably reduce suffering a decent bit just by decreasing these natural drives and directly reducing pain experienced by animals as the result of these practices.

Many animal advocates hate this idea because they believe we just shouldn’t use animals for making meat (and frankly they have a good point). But in the interim period where we’re still making meat from animals, this could make the scale of the moral disaster less bad, even if it still sucks.

What about legal issues?

At the moment, there is a rider attached to the annual appropriations bill in the United States that bans the FDA from considering any applications to perform germline gene editing.

Whether or not the FDA has the authority to enforce this ban is a question which has not yet been tested in court. The FDA does not have regulatory authority over IVF, so there is some reason to doubt its jurisdiction over this area.

Still, the legal situation in the United States at the moment isn’t exactly the most friendly to commercialization.

Fortunately, the United States is not the only country in the world. There are over a dozen countries which currently have no laws banning germline gene editing, and 5 where there are legal carve outs for editing in certain circumstances, such as editing to prevent a disease. Belgium, Colombia, Italy, Panama, and the UAE all have exceptions that allow heritable gene editing in certain circumstances (mostly related to health issues).

The very first application of gene editing will almost certainly be to prevent a disease that the parents have that they don’t want to pass on to their children. This is a serious enough issue that it is worth taking a small risk to prevent the child from going on to live an unhealthy, unhappy life.

From a technical standpoint, we are ready to do single gene editing in embryos RIGHT NOW. There are labs that have done this kind of editing in human embryos with undetectably low levels of off-target edits. My understanding is they are still working on improving their techniques to ensure no mosaicism of the resulting embryos, but it seems like they are pretty close to having that problem solved.

I am trying to convince one of them to launch a commercial entity outside the United States and get the ball rolling on this. This technology won’t make superbabies, but it COULD prevent monogenic diseases and reduce the risk of things like heart disease by editing PCSK9. If anyone is interested in funding this please reach out.

How we make this happen

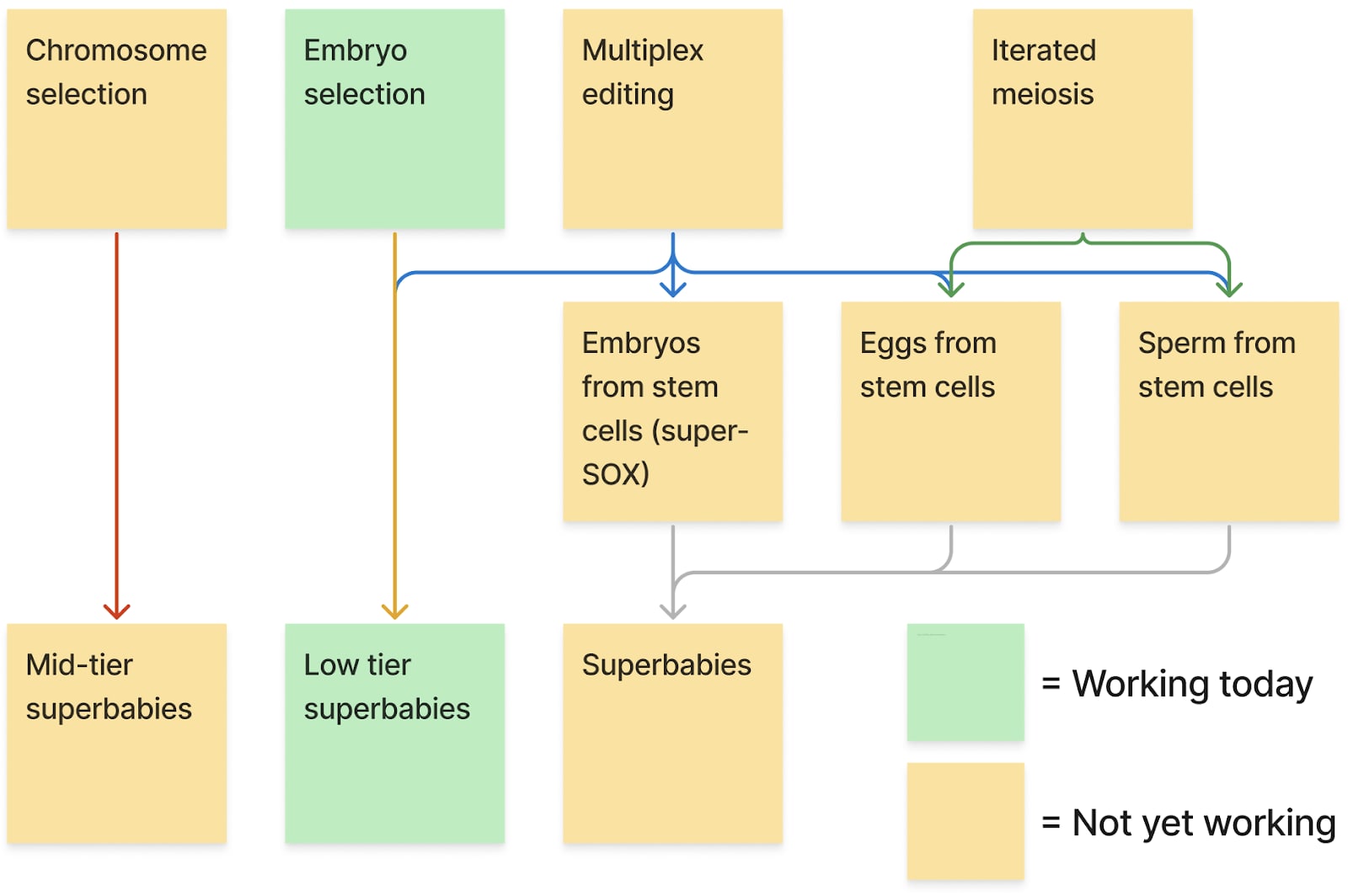

Here’s an oversimplified diagram of various protocols that could be used to make superbabies:

In this blog post I’ve mostly focused on the path that goes multiplex editing → embryos from stem cells → superbabies.

But there are other approaches to make an optimized genome. Chromosome selection is one I’ve only briefly mentioned in other posts, but one which completely bypasses the need for the kind of epigenetic correction necessary for other techniques. And there has been virtually no research on it despite the project appearing at least superficially feasible (use micromanipulators to move chromosomes from different sperm into the same egg).

Iterated meiotic selection is another approach to creating an optimized genome which I haven’t even touched on because it deserves its own post.

In my view the most sensible thing to do here is to place multiple bets; work on chromosome selection AND eggs from stem cells AND embryos from stem cells (a la super-SOX) AND sperm from stem cells (a la hulk sperm) AND multiplex editing all at the same time.

In the grand scheme, none of these projects are that expensive; my company will be trying to raise $8 million for our seed round in a few weeks which we believe will be enough for us to make significant progress on showing the ability to make dozens to hundreds of edits in stem cells (current state of the art is about 10 cumulative edits). Sergiy needs $4 million for super-SOX validation in primates, and probably additional funding beyond that to show the ability to create adult monkeys entirely from stem cells in conjunction with an editing protocol.

I don’t have precise estimates of the amounts needed for chromosome selection, but my guess is we could at the very least reduce uncertainty (and possibly make significant progress) with a year and $1-3 million.

Creating eggs from stem cells is somewhat more expensive. Conception has raised $38 million so far, and other companies have raised a smaller amount.

The approach I like the most here is the one taken by Ovelle, who is planning to use growth and transcription factors to replicate key parts of the environment in which eggs are produced rather than grow actual feeder cells to excrete those factors. If it works, this approach has the advantage of speed; it takes a long time to mature primordial germ cells into eggs replicating the natural process, so if you can recreate the process with transcription factors that saves a lot of time. Based on some conversations I’ve had with one of the founders I think $50 million could probably accelerate progress by about a year (though they are not looking to raise that much at the moment).

Making eggs from stem cells also has a very viable business even if none of the other technologies work; there are hundreds of thousands of couples desperate to have children that simply can’t produce enough eggs to have the kids they want.

This is the case for most of these technologies; multiplex editing will have a market to make super cows, gene therapy, basic research, and to do basic gene editing in embryos even if none of the other stuff works. Creating sperm from stem cells will have a market even without editing or iterated meiotic selection because you’ll be able to solve a certain kind of male infertility where the guy can’t create sperm. Embryo selection ALREADY has a rapidly growing market with an incredibly well-resourced customer base (you wouldn’t believe the number of billionaires and centimillionaires who used our services while I was working on embryo selection at Genomic Prediction). Chromosome selection might be able to just make superbabies in like a couple of years if we’re lucky and the research is straightforward.

So I think even pretty brain dead investors who somehow aren’t interested in fundamentally upgrading the human race will still see value in this.

Ahh yes, but what about AI?

Now we come to perhaps the biggest question of all.

Suppose this works. Suppose we can make genetically engineered superbabies. Will there even be time for them to grow up, or will AI take over first?

Given the current rate of improvement of AI, I would give a greater than 50% chance of AI having taken over the world before the first generation of superbabies grows up.

One might then reasonably ask what the point of all this is. Why work on making superbabies if it probably won’t matter?

There is currently no backup plan if we can’t solve alignment

If it turns out we can’t safely create digital gods and command them to carry out our will, then what? What do we do at that point?

No one has a backup plan. There is no solution like “oh, well we could just wait for X and then we could solve it.”

Superbabies is a backup plan; focus the energy of humanity’s collective genetic endowment into a single generation, and have THAT generation to solve problems like “figure out how to control digital superintelligence”.

It’s actually kind of nuts this isn’t the PRIMARY plan. Humanity has collectively decided to roll the dice on creating digital gods we don’t understand and may not be able to control instead of waiting a few decades for the super geniuses to grow up.

If we face some huge AI disaster, or if there’s a war between the US and China and no one can keep their chip fabs from getting blown up, what does that world look like? Almost no one is thinking about this kind of future.

But we should be. The current trajectory we’re on is utterly insane. Our CURRENT PLAN is to gamble 8 billion lives on the ability of a few profit driven entities to control digital minds we barely understand in the hopes it will give them and a handful of government officials permanent control over the world forever.

I really can’t emphasize just how fucking insane this is. People who think this is a sensible way for this technology to be rolled out are deluding themselves. The default outcome of the trajectory we’re on is death or disempowerment.

Maybe by some miracle that works and turns out well. Maybe Sam Altman will seize control of the US government and implement a global universal basic income and we’ll laugh about the days when we thought AGI might be a bad thing. I will just note that I am skeptical things will work out that way. Altman in particular seems to be currently trying to dismantle the non-profit he previously put into place to ensure the hypothetical benefits of AGI would be broadly distributed to everyone.

If the general public actually understood what these companies were doing and believed they were going to achieve it, we would be seeing the biggest protests in world history. You can’t just threaten the life and livelihood of 8 billion people and not expect pushback.

We are living in a twilight period where clued in people understand what’s coming but the general public hasn’t yet woken up. It is not a sustainable situation. Very few people understand that even if it goes “well”, their very survival will completely depend on the generosity of a few strangers who have no self-interested reason to care about them.

But people are going to figure this out sooner or later. And when they do, it would be pretty embarrassing if the only people with an alternative vision of the future are neo-luddite degrowthers who want people to unplug their refrigerators.

We need to start working on this NOW. Someone with initiative could have started on this project five years ago when prime editors came out and we finally had a means of editing most genetic variants in the human genome.

But no one has made it their job to make this happen. The academic institutions in charge of exploring these ideas are deeply compromised by insane ideologies. And the big commercial entities are too timid to do anything truly novel; once they discovered they had a technology that could potentially make a few tens of billions treating single gene genetic disorders, no one wanted to take any risks; better to take the easy, guaranteed money and spend your life on a lucrative endeavor improving the lives of 0.5% of the population than go for a hail mary project that will result in journalists writing lots of articles calling you a eugenicist.

I think in most worlds, gene editing won’t play a significant role in the larger strategic picture. But in perhaps 10-20%, where AGI just takes a long time or we have some kind of delay of superintelligence due to an AI disaster or war, this will become the most important technology in the world.

Given the expected value here and the relatively tiny amount of money needed to make significant progress (tens to hundreds of millions rather than tens to hundreds of billions), it would be kind of insane if we as a civilization didn’t make a serious effort to develop this tech.

Team Human

There are fundamentally two different kinds of futures that lie before us. In the first, we continue to let technology develop in every area as fast as it can with no long term planning or consideration of what kind of future we actually want to build.

We follow local incentive gradients, heedless of the consequences. No coordination is needed because nothing can possibly go wrong.

This is the world we are building right now. One in which humans are simply biological bootloaders for our digital replacements.

In the second world we take our time before summoning the digital gods. We make ourselves smarter, healthier, and wiser. We take our time and make sure we ACTUALLY UNDERSTAND WHAT WE’RE DOING before opening Pandora’s box.

This latter world is much more human than the first. It involves people making decisions and learning things. You and your children will actually have power and autonomy over your own lives in this world.

There will still be death and suffering and human fallibility in the second world (though less of all of these). We don’t get to magically paper over all problems in this world by saying “AI will fix it” and just crossing our fingers that it will be true. Instead we ourselves, or at least our children, will have to deal with the problems of the world.

But this world will be YOURS. It will belong to you and your children and your friends and your family. All of them will still matter, and if at some point we DO decide to continue along the road to build digital superintelligence, it will be because humanity (or at least its selected representatives) thought long and hard and decided that was a step worth taking.

If we want this kind of world, we need to start building it now. We need to start funding companies to work on the technical roadblocks to bringing superbabies to life. We need to break this stupid taboo around talking about creating genetically engineered people and make sure policymakers are actually informed of just how much this technology could improve human life. It is ludicrous beyond belief that we have gene therapies designed to edit genes for millions of dollars, yet editing those exact same genes in an embryo for a fraction of the money in a more controlled, more effective, more verifiable way is considered unethical.

If you’re interested in making this happen, be it as a biologist working in a wet lab, a funder, or a policymaker, please reach out to me. You can reach me at genesmithlesswrong@gmail.com or simply through a LessWrong private message. My company will be raising our seed round to work on multiplex editing in the next couple of weeks, so if you’re in venture capital and you want to make this happen, please get a hold of me.

Appendix

Amendments to this article as of 2025-03-05

This article has been amended in a few places. The disease graphs have been updated based on additional work we did using a newer, better version of our fine-mapping software to better account for causal uncertainty. The "benefit" of editing went down from these changes (meaning you can't get the same benefit from the same number of edits).

These graphs are constructed in basically the simplest possible way, by using a single GWAS. It's very likely that by the time we are ready to do actual human editing we will be able to do better than the graphs suggest.

The life expectancy increase from editing also went down (by a factor of 3!). This was, to put it bluntly, a pretty dumb mistake on our part. The life expectancy GWAS study we used had a non-standard way of reporting effect sizes, and when we discovered and corrected this the effect size went down.

iPSCs were named after the iPod

You might wonder why the i in iPSCs is lowercase. It’s literally because Yamanaka was a fan of the iPod and was inspired to use the same lowercase naming convention.

On autoimmune risk variants and plagues

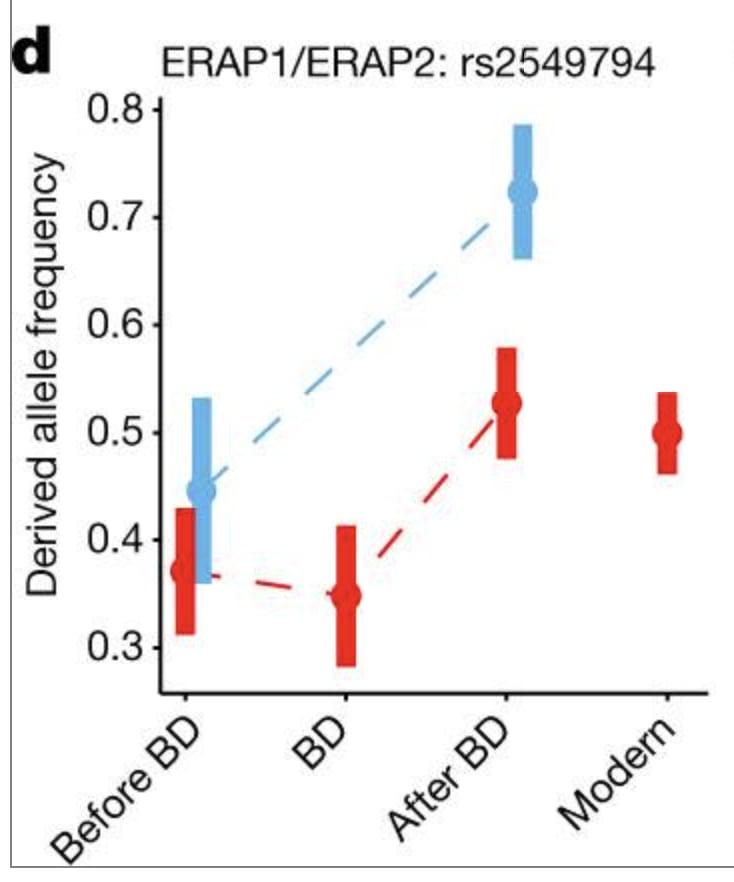

The studies on genes that have been protective against past plagues are kind of insane. There’s a genetic variant in the ERAP2 gene that jumped from 40% prevalence to 70% prevalence in ~100 years in Denmark thanks to its ability to reduce mortality from the bubonic plague.

For anyone not familiar with evolution, this is an INCREDIBLY fast spread of a genetic variant. It’s so fast that you can’t even really explain it by people with this gene out-reproducing those without it. You can only explain it if a large portion of the people without the genetic variant are dying in a short time period.

Today this same genetic variant is known to increase the risk of Crohn's disease and a variety of other autoimmune conditions.

ERAP variants aren’t the ONLY ones that protect against plague risk. There are half a dozen others mentioned in the study. So we aren’t going to make the entire population fragile to plague just by editing this variant.

Two simples strategies for minimizing autoimmune risk and pandemic vulnerability

There are two fairly straightforward ways to decrease the risk of autoimmune disease while minimizing population level vulnerability to future pandemics.

First of all, we can dig up plague victims from mass graves and look at their DNA. Variants that are overrepresented among people in mass burial sites compared with the modern population probably weren’t very helpful for surviving past pandemics. So we should be more cautious than usual about swapping people’s genetic variants to those of plague victims, even if it decreases the risk of autoimmune diseases.

Second, we should have an explicit policy of preserving genetic diversity in the human immune system. There’s a temptation to just edit the variants in a genome that have the largest positive impact on a measured trait. But in the case of the immune system, it’s much better from a population level perspective to decrease different people’s autoimmune risk in different ways.

“I don’t want someone else’s genes in my child”

One thing we didn’t mention in the post is what could be done with gene editing if we JUST restrict ourselves to editing in variants that are present in one of the two parents.

We ran some simulations and came to a somewhat surprising conclusion: there probably won’t be that big of a reduction if you do this!

So even if you’re an inheritance maximalist, you can still get major benefits from gene editing.

Could I use this technology to make a genetically enhanced clone of myself?

For some versions of this technology (in particular super-SOX or SCNT-derived solutions), you could indeed make a genetically enhanced clone of yourself.

Genetically enhanced clones are too weird to be appealing to most people, so I don’t think we’re ever going to see this kind of thing being done at scale. But maybe someday someone will start the “Me 2” movement.

Why does super-SOX work?

Super-SOX is a modification of the sox2 protein designed to increase the strength of the bond between it and Oct4. You might wonder why increasing the strength of this bond increases reprogramming efficiency and makes more developmentally potent stem cells.

There are two pieces to the answer. But to explain them I need to give you a bit of background first.

Sox2 (and all the other Yamanaka factors, for that matter), are transcription factors. This means they stick to DNA. They also stick to other proteins. You can think of them like a person that’s holding hands with the DNA and holding hands with another protein.

It’s beyond the scope of even this appendix to talk about all the other proteins than can bind to, but among the most important are a bunch of proteins that form something called the RNA transcriptase complex. RNA transcriptase is the giant enzyme that turns DNA into messenger RNA (which are then converted into proteins). RNA transcriptase can’t directly bind to DNA, so in order for it to do its thing, it needs a bunch of helper proteins which get it into position.

Sox2 and Oct4 are two such “helper proteins”. They’re crucial because they bind directly to DNA, which means the RNA transcriptase complex can’t even START forming unless Sox2 or Oct4 or both start the process off by sticking to DNA in the right spots.

This DNA binding ability is apparent in their very structure.

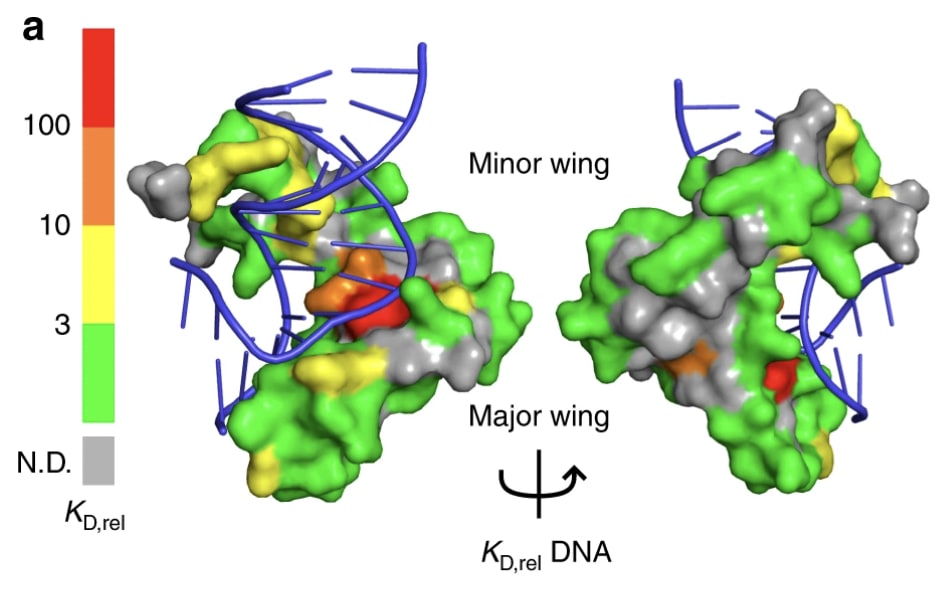

See figure 6a of this nature paper

See how the protein itself is folded in a way such that it kind of “wraps around” the DNA? That’s by design; it allows the protein to bind to the DNA if and only if the sequence matches with Oct2’s binding domain. So sox2 doesn’t just bind to any sequence of DNA. It needs a very specific one: 5′-(A/T)(A/T)CAAAG-3′ if you want to get technical about it.

This means that it doesn’t just bind anywhere. It only binds to DNA sequences that match with its binding domain.

And not every gene has such a sequence. Only some genes do, which means Sox2 won’t activate transcription of every gene.

So sox2 can bind to DNA and initiate transcription of certain genes, but the bonds Sox2 forms with DNA are… kind of weak. It often “comes off” the DNA when something else hits it or when the DNA just wiggles a little too much.

So sox2, along with all the other Yamanaka factors, are constantly coming and going from these binding sites on the DNA.

The fraction of time that sox2 and the other Yamanaka factors spend stuck to the DNA is a huge determining factor in whether or not the RNA transcriptase complex actually forms; the higher the percentage of the time they are bound to the DNA, the more often that complex forms and the more of that protein gets produced.

If we could somehow increase the strength of that bond, it would significantly increase the amount of proteins produced.

One way to do this would be to directly modify the protein so that it can stick to the DNA better. But another way would be to modify the strength of the bond it has with something ELSE that sticks to DNA in the same region.

And that’s exactly what super-SOX does. It increases the strength of the bond with oct4, which helps sox2 “hold on” to the DNA. Even if the DNA wiggles too much and sox2 gets knocked off, it will still be held down by oct4, which is bound right next to it.

It should be noted that not all genes have a binding motif for sox2 and oct4 right next to each other. But (incredibly), it seems that most of the key pluripotency genes, the ones that are really important for making naive embryonic stem cells, DO have such a binding motif.

That’s why super-SOX works. It increases transcription of genes with a sox-oct motif next to the gene. And it just so happens that the key genes to make naive cells have a sox-oct motif next to them.

This is almost certainly not an accident. Sox and Oct are naturally occuring proteins that play key roles in embryonic development. So the fact that sox/oct motifs play a key role in creating and maintaining naive embryonic stem cells is not all that surprising.

How was the IQ grain graph generated?

The graph for IQ was generated by ranking genetic variants by their effect sizes and showing how they add up if you put them all in the same genome. We take into account uncertainty about which of a cluster of nearby genetic variants is actually CAUSING the observed effect. We also adjust for the fact that the IQ test issued by UK Biobank isn’t particularly good. I believe it has a test/retest correlation of 0.61, which is significantly below the 0.9 of a gold standard IQ test.

We also account for assortative mating on IQ (our assumption is a correlation of 0.4, which we think is reasonable based on the research literature).

A huge amount of work went into dealing with missing SNPs in the datasets used to train these predictors. There’s too much math-y detail to get into in this post, but some of the genetic variants we would hope to measure a missing from the datasets and kman had to write custom fine-mapping software to deal with this.

We couldn’t find anyone else who had done this before, so we’ll probably publish a paper at some point explaining our technique and making the software kman wrote available to other genetics researchers.

If you’ve made it this far, please send me a DM! Most people don’t read 30 page blog posts and I always enjoy hearing from people that do. Let me know why you read all the way through this, what you enjoyed most, and what you think about the superbabies project.

Yeah, in Star Trek, genetic engineering for increased intelligence reliably produces arrogant bastards, but that's just so they don't have to show the consequences of genetic engineering on humans...