I think a very common problem in alignment research today is that people focus almost exclusively on a specific story about strategic deception/scheming, and that story is a very narrow slice of the AI extinction probability mass. At some point I should probably write a proper post on this, but for now here are few off-the-cuff example AI extinction stories which don't look like the prototypical scheming story. (These are copied from a Facebook thread.)

- Perhaps the path to superintelligence looks like applying lots of search/optimization over shallow heuristics. Then we potentially die to things which aren't smart enough to be intentionally deceptive, but nonetheless have been selected-upon to have a lot of deceptive behaviors (via e.g. lots of RL on human feedback).

- The "Getting What We Measure" scenario from Paul's old "What Failure Looks Like" post.

- The "fusion power generator scenario".

- Perhaps someone trains a STEM-AGI, which can't think about humans much at all. In the course of its work, that AGI reasons that an oxygen-rich atmosphere is very inconvenient for manufacturing, and aims to get rid of it. It doesn't think about humans at all, but the human operators can't understand

Also (separate comment because I expect this one to be more divisive): I think the scheming story has been disproportionately memetically successful largely because it's relatively easy to imagine hacky ways of preventing an AI from intentionally scheming. And that's mostly a bad thing; it's a form of streetlighting.

Most of the problems you discussed here more easily permit hacky solutions than scheming does.

True, but Buck's claim is still relevant as a counterargument to my claim about memetic fitness of the scheming story relative to all these other stories.

IMO the main argument for focusing on scheming risk is that scheming is the main plausible source of catastrophic risk from the first AIs that either pose substantial misalignment risk or that are extremely useful (as I discuss here). These other problems all seem like they require the models to be way smarter in order for them to be a big problem. Though as I said here, I'm excited for work on some non-scheming misalignment risks.

scheming is the main plausible source of catastrophic risk from the first AIs that either pose substantial misalignment risk or that are extremely useful...

Seems quite wrong. The main plausible source of catastrophic risk from the first AIs that either pose substantial misalignment risk or that are extremely useful is that they cause more powerful AIs to be built which will eventually be catastrophic, but which have problems that are not easily iterable-upon (either because problems are hidden, or things move quickly, or ...).

And causing more powerful AIs to be built which will eventually be catastrophic is not something which requires a great deal of intelligent planning; humanity is already racing in that direction on its own, and it would take a great deal of intelligent planning to avert it. This story, for example:

- People try to do the whole "outsource alignment research to early AGI" thing, but the human overseers are themselves sufficiently incompetent at alignment of superintelligences that the early AGI produces a plan which looks great to the overseers (as it was trained to do), and that plan totally fails to align more-powerful next-gen AGI at all. And at that point, they

- People try to do the whole "outsource alignment research to early AGI" thing, but the human overseers are themselves sufficiently incompetent at alignment of superintelligences that the early AGI produces a plan which looks great to the overseers (as it was trained to do), and that plan totally fails to align more-powerful next-gen AGI at all. And at that point, they're already on the more-powerful next gen, so it's too late.

This story sounds clearly extremely plausible (do you disagree with that?), involves exactly the sort of AI you're talking about ("the first AIs that either pose substantial misalignment risk or that are extremely useful"), but the catastropic risk does not come from that AI scheming.

This problem seems important (e.g. it's my last bullet here). It seems to me much easier to handle, because if this problem is present, we ought to be able to detect its presence by using AIs to do research on other subjects that we already know a lot about (e.g. the string theory analogy here). Scheming is the only reason why the model would try to make it hard for us to notice that this problem is present.

A few problems with this frame.

First: you're making reasonably-pessimistic assumptions about the AI, but very optimistic assumptions about the humans/organization. Sure, someone could look for the problem by using AIs to do research on other subject that we already know a lot about. But that's a very expensive and complicated project - a whole field, and all the subtle hints about it, need to be removed from the training data, and then a whole new model trained! I doubt that a major lab is going to seriously take steps much cheaper and easier than that, let alone something that complicated.

One could reasonably respond "well, at least we've factored apart the hard technical bottleneck from the part which can be solved by smart human users or good org structure". Which is reasonable to some extent, but also... if a product requires a user to get 100 complicated and confusing steps all correct in order for the product to work, then that's usually best thought of as a product design problem, not a user problem. Making the plan at least somewhat robust to people behaving realistically less-than-perfectly is itself part of the problem.

Second: looking for the problem by testing on other f...

See also ‘The Main Sources of AI Risk?’ by Wei Dai and Daniel Kokotajlo, which puts forward 35 routes to catastrophe (most of which are disjunctive). (Note that many of the routes involve something other than intent alignment going wrong.)

In response to the Wizard Power post, Garrett and David were like "Y'know, there's this thing where rationalists get depression, but it doesn't present like normal depression because they have the mental habits to e.g. notice that their emotions are not reality. It sounds like you have that."

... and in hindsight I think they were totally correct.

Here I'm going to spell out what it felt/feels like from inside my head, my model of where it comes from, and some speculation about how this relates to more typical presentations of depression.

Core thing that's going on: on a gut level, I systematically didn't anticipate that things would be fun, or that things I did would work, etc. When my instinct-level plan-evaluator looked at my own plans, it expected poor results.

Some things which this is importantly different from:

- Always feeling sad

- Things which used to make me happy not making me happy

- Not having energy to do anything

... but importantly, the core thing is easy to confuse with all three of those. For instance, my intuitive plan-evaluator predicted that things which used to make me happy would not make me happy (like e.g. dancing), but if I actually did the things they still made me ha...

This seems basically right to me, yup. And, as you imply, I also think the rat-depression kicked in for me around the same time likely for similar reasons (though for me an at-least-equally large thing that roughly-coincided was the unexpected, disappointing and stressful experience of the funding landscape getting less friendly for reasons I don't fully understand.) Also some part of me thinks that the model here is a little too narrow but not sure yet in what way(s).

This matches with the dual: mania. All plans, even terrible ones, seem like they'll succeed and this has flow through effects to elevated mood, hyperactivity, etc.

Whether or not this happens in all minds, the fact that people can alternate fairly rapidly between depression and mania with minimal trigger suggests there can be some kind of fragile "chemical balance" or something that's easily upset. It's possible that's just in mood disorders and more stable minds are just vulnerable to the "too many negative updates at once" thing without greater instability.

Like, when I head you say "your instinctive plan-evaluator may end up with a global negative bias" I'm like, hm, why not just say "if you notice everything feels subtly heavier and like the world has metaphorically lost color"

Because everything did not feel subtly heavier or like the world had metaphorically lost color. It was just, specifically, that most nontrivial things I considered doing felt like they'd suck somehow, or maybe that my attention was disproportionately drawn to the ways in which they might suck.

And to be clear, "plan predictor predicts failure" was not a pattern of verbal thought I noticed, it's my verbal description of the things I felt on a non-verbal level. Like, there is a non-verbal part of my mind which spits out various feelings when I consider doing different things, and that part had a global negative bias in the feelings it spit out.

I use this sort of semitechnical language because it allows more accurate description of my underlying feelings and mental motions, not as a crutch in lieu of vague poetry.

... But It's Fake Tho

Epistemic status: I don't fully endorse all this, but I think it's a pretty major mistake to not at least have a model like this sandboxed in one's head and check it regularly.

Full-cynical model of the AI safety ecosystem right now:

- There’s OpenAI, which is pretending that it’s going to have full AGI Any Day Now, and relies on that narrative to keep the investor cash flowing in while they burn billions every year, losing money on every customer and developing a product with no moat. They’re mostly a hype machine, gaming metrics and cherry-picking anything they can to pretend their products are getting better. The underlying reality is that their core products have mostly stagnated for over a year. In short: they’re faking being close to AGI.

- Then there’s the AI regulation activists and lobbyists. They lobby and protest and stuff, pretending like they’re pushing for regulations on AI, but really they’re mostly networking and trying to improve their social status with DC People. Even if they do manage to pass any regulations on AI, those will also be mostly fake, because (a) these people are generally not getting deep into the bureaucracy which would actually

What makes you confident that AI progress has stagnated at OpenAI? If you don’t have the time to explain why I understand, but what metrics over the past year have stagnated?

Could you name three examples of people doing non-fake work? Since towardsness to non-fake work is easier to use for aiming than awayness from fake work.

I do not necessarily disagree with this, coming from a legal / compliance background. If you see any of my profiles, I constantly complain about "performative compliance" and "compliance theatre". Painfully present across the legal and governance sectors.

That said: can you provide examples of activism or regulatory efforts that you do agree with? What does a "non fake" regulatory effort look like?

I don't think it would be okay to dismiss your take entirely, but it would be great to see what solutions you'd propose too. This is why I disagree in principle, because there are no specific points to contribute to.

In Europe, paradoxically, some of the people "close enough to the bureaucracy" that pushed for the AI Act to include GenAI providers, were OpenAI-adjacent.

But I will rescue this:

"(b) the regulatory targets themselves are aimed at things which seem easy to target (e.g. training FLOP limitations) rather than actually stopping advanced AI"

BigTech is too powerful to lobby against. "Stopping advanced AI" per se would contravene many market regulations (unless we define exactly what you mean by advanced AI and the undeniable dangers to people's lives). Regulators can only ...

What domains of 'real improvement' exist that are uncoupled to human perceptions of improvement, but still downstream of text prediction?

As defined, this is a little paradoxical: how could I convince a human like you to perceive domains of real improvement which humans do not perceive...?

correctly guessing the true authors of anonymous text

See, this is exactly the example I would have given: truesight is an obvious example of a domain of real improvement which appears on no benchmarks I am aware of, but which appears to correlate strongly with the pretraining loss, is not applied anywhere (I hope), is unobvious that LLMs might do it and the capability does not naturally reveal itself in any standard use-cases (which is why people are shocked when it surfaces), and it would have been easy for no one to have observed it up until now or dismissed it, and even now after a lot of publicizing (including by yours truly), only a few weirdos know much about it.

Why can't there be plenty of other things like inner-monologue or truesight? ("Wait, you could do X? Why didn't you tell us?" "You never asked.")

...What domains of 'real improvement' exist that are uncoupled to human perceptions

On o3: for what feels like the twentieth time this year, I see people freaking out, saying AGI is upon us, it's the end of knowledge work, timelines now clearly in single-digit years, etc, etc. I basically don't buy it, my low-confidence median guess is that o3 is massively overhyped. Major reasons:

- I've personally done 5 problems from GPQA in different fields and got 4 of them correct (allowing internet access, which was the intent behind that benchmark). I've also seen one or two problems from the software engineering benchmark. In both cases, when I look the actual problems in the benchmark, they are easy, despite people constantly calling them hard and saying that they require expert-level knowledge.

- For GPQA, my median guess is that the PhDs they tested on were mostly pretty stupid. Probably a bunch of them were e.g. bio PhD students at NYU who would just reflexively give up if faced with even a relatively simple stat mech question which can be solved with a couple minutes of googling jargon and blindly plugging two numbers into an equation.

- For software engineering, the problems are generated from real git pull requests IIUC, and it turns out that lots of those are things like e

I just spent some time doing GPQA, and I think I agree with you that the difficulty of those problems is overrated. I plan to write up more on this.

@johnswentworth Do you agree with me that modern LLMs probably outperform (you with internet access and 30 minutes) on GPQA diamond? I personally think this somewhat contradicts the narrative of your comment if so.

Ok, so sounds like given 15-25 mins per problem (and maybe with 10 mins per problem), you get 80% correct. This is worse than o3, which scores 87.7%. Maybe you'd do better on a larger sample: perhaps you got unlucky (extremely plausible given the small sample size) or the extra bit of time would help (though it sounds like you tried to use more time here and that didn't help). Fwiw, my guess from the topics of those questions is that you actually got easier questions than average from that set.

I continue to think these LLMs will probably outperform (you with 30 mins). Unfortunately, the measurement is quite expensive, so I'm sympathetic to you not wanting to get to ground here. If you believe that you can beat them given just 5-10 minutes, that would be easier to measure. I'm very happy to bet here.

I think that even if it turns out you're a bit better than LLMs at this task, we should note that it's pretty impressive that they're competitive with you given 30 minutes!

So I still think your original post is pretty misleading [ETA: with respect to how it claims GPQA is really easy].

I think the models would beat you by more at FrontierMath.

I think that how you talk about the questions being “easy”, and the associated stuff about how you think the baseline human measurements are weak, is somewhat inconsistent with you being worse than the model.

Generalizing the lesson here: the supposedly-hard benchmarks for which I have seen a few problems (e.g. GPQA, software eng) turn out to be mostly quite easy, so my prior on other supposedly-hard benchmarks which I haven't checked (e.g. FrontierMath) is that they're also mostly much easier than they're hyped up to be

Daniel Litt's account here supports this prejudice. As a math professor, he knew instantly how to solve the low/medium-level problems he looked at, and he suggests that each "high"-rated problem would be likewise instantly solvable by an expert in that problem's subfield.

And since LLMs have eaten ~all of the internet, they essentially have the crystallized-intelligence skills for all (sub)fields of mathematics (and human knowledge in general). So from their perspective, all of those problems are very "shallow". No human shares their breadth of knowledge, so math professors specialized even in slightly different subfields would indeed have to do a lot of genuine "deep" cognitive work; this is not the case for LLMs.

GPQA stuff is even worse, a literal advanced trivia quiz that seems moderately resistant to literal humans literally googling things, but not to the way the kno...

[...] he suggests that each "high"-rated problem would be likewise instantly solvable by an expert in that problem's subfield.

This is an exaggeration and, as stated, false.

Epoch AI made 5 problems from the benchmark public. One of those was ranked "High", and that problem was authored by me.

- It took me 20-30 hours to create that submission. (To be clear, I considered variations of the problem, ran into some dead ends, spent a lot of time carefully checking my answer was right, wrote up my solution, thought about guess-proof-ness[1] etc., which ate up a lot of time.)

- I would call myself an "expert in that problem's subfield" (e.g. I have authored multiple related papers).

- I think you'd be very hard-pressed to find any human who could deliver the correct answer to you within 2 hours of seeing the problem.

- E.g. I think it's highly likely that I couldn't have done that (I think it'd have taken me more like 5 hours), I'd be surprised if my colleagues in the relevant subfield could do that, and I think the problem is specialized enough that few of the top people in CodeForces or Project Euler could do it.

On the other hand, I don't think the problem is very hard insight-wise - I th...

I'm not confident one way or another.

I think my key crux is that in domains where there is a way to verify that the solution actually works, RL can scale to superhuman performance, and mathematics/programming are domains that are unusually easy to verify/gather training data for RL performance, so with caveats it can become rather good at those specific domains/benchmarks like millennium prize evals, but the important caveat is I don't believe this transfers very well to domains where verifying isn't easy, like creative writing.

I'm bearish on that. I expect GPT-4 to GPT-5 to be palatably less of a jump than GPT-3 to GPT-4, same way GPT-3 to GPT-4 was less of a jump than GPT-2 to GPT-3. I'm sure it'd show lower loss, and saturate some more benchmarks, and perhaps an o-series model based on it clears FrontierMath, and perhaps programmers and mathematicians would be able to use it in an ever-so-bigger number of cases...

I was talking about the 1 GW systems that would be developed in late 2026-early 2027, not GPT-5.

That's the opposite of my experience. Nearly all the papers I read vary between "trash, I got nothing useful out besides an idea for a post explaining the relevant failure modes" and "high quality but not relevant to anything important". Setting up our experiments is historically much faster than the work of figuring out what experiments would actually be useful.

There are exceptions to this, large projects which seem useful and would require lots of experimental work, but they're usually much lower-expected-value-per-unit-time than going back to the whiteboard, understanding things better, and doing a simpler experiment once we know what to test.

Actually, I've changed my mind, in that the reliability issue probably does need at least non-trivial theoretical insights to make AIs work.

I am unconvinced that "the" reliability issue is a single issue that will be solved by a single insight, rather than AIs lacking procedural knowledge of how to handle a bunch of finicky special cases that will be solved by online learning or very long context windows once hardware costs decrease enough to make one of those approaches financially viable.

If I were to think about it a little, I'd suspect the big difference that LLMs and humans have is state/memory, where humans do have state/memory, but LLMs are currently more or less stateless today, and RNN training has not been solved to the extent transformers were.

One thing I will also say is that AI winters will be shorter than previous AI winters, because AI products can now be sort of made profitable, and this gives an independent base of money for AI research in ways that weren't possible pre-2016.

I agree with you on your assessment of GPQA. The questions themselves appear to be low quality as well. Take this one example, although it's not from GPQA Diamond:

In UV/Vis spectroscopy, a chromophore which absorbs red colour light, emits _____ colour light.

The correct answer is stated as yellow and blue. However, the question should read transmits, not emits; molecules cannot trivially absorb and re-emit light of a shorter wavelength without resorting to trickery (nonlinear effects, two-photon absorption).

This is, of course, a cherry-picked example, but is exactly characteristic of the sort of low-quality science questions I saw in school (e.g with a teacher or professor who didn't understand the material very well). Scrolling through the rest of the GPQA questions, they did not seem like questions that would require deep reflection or thinking, but rather the sort of trivia things that I would expect LLMs to perform extremely well on.

I'd also expect "popular" benchmarks to be easier/worse/optimized for looking good while actually being relatively easy. OAI et. al probably have the mother of all publication biases with respect to benchmarks, and are selecting very heavily for items within this collection.

About a month ago, after some back-and-forth with several people about their experiences (including on lesswrong), I hypothesized that I don't feel the emotions signalled by oxytocin, and never have. (I do feel some adjacent things, like empathy and a sense of responsibility for others, but I don't get the feeling of loving connection which usually comes alongside those.)

Naturally I set out to test that hypothesis. This note is an in-progress overview of what I've found so far and how I'm thinking about it, written largely to collect my thoughts and to see if anyone catches something I've missed.

Under the hypothesis, this has been a life-long thing for me, so the obvious guess is that it's genetic (the vast majority of other biological state turns over too often to last throughout life). I also don't have a slew of mysterious life-long illnesses, so the obvious guess is that's it's pretty narrowly limited to oxytocin - i.e. most likely a genetic variant in either the oxytocin gene or receptor, maybe the regulatory machinery around those two but that's less likely as we get further away and the machinery becomes entangled with more other things.

So I got my genome sequenced, and went...

The receptor was the first one I checked, and sure enough I have a single-nucleotide deletion 42 amino acids in to the open reading frame (ORF) of the 389 amino acid protein. That will induce a frameshift error, completely fucking up the rest of protein.

I'm kind of astonished that this kind of advance prediction panned out!

I admit I was somewhat surprised as well. On a gut level, I did not think that the very first things to check would turn up such a clear and simple answer.

This might be a bad idea right now, if it makes John's interests suddenly more normal in a mostly-unsteered way, eg because much of his motivation was coming from a feeling he didn't know was oxytocin-deficiency-induced. I'd suggest only doing this if solving this problem is likely to increase productivity or networking success; else, I'd delay until he doesn't seem like a critical bottleneck. That said, it might also be a very good idea, if depression or social interaction are a major bottleneck, which they are for many many people, so this is not resolved advice, just a warning that this may be a high variance intervention, and since John currently seems to be doing promising work, introducing high variance seems likely to have more downside.

I wouldn't say this to most people; taking oxytocin isn't known for being a hugely impactful intervention[citation needed], and on priors, someone who doesn't have oxytocin signaling happening is missing a lot of normal emotion, and is likely much worse off. Obviously, John, it's up to you whether this is a good tradeoff. I wouldn't expect it to completely distort your values or delete your skills. Someone who knows you better, such as yourself, would be much better equipped to predict if there's significant reason to believe downward variance isn't present. If you have experience with reward-psychoactive chemicals and yet are currently productive, it's more likely you already know whether it's a bad idea.

Didn't want to leave it unsaid, though.

Not directly related to your query, but seems interesting:

The receptor was the first one I checked, and sure enough I have a single-nucleotide deletion 42 amino acids in to the open reading frame (ORF) of the 389 amino acid protein. That will induce a frameshift error, completely fucking up the rest of protein.

Which, in turn, is pretty solid evidence for "oxytocin mediates the emotion of loving connection/aching affection" (unless there are some mechanisms you've missed). I wouldn't have guessed it's that simple.

Generalizing, this suggests we can study links between specific brain chemicals/structures and cognitive features by looking for people missing the same universal experience, checking if their genomes deviate from the baseline in the same way, then modeling the effects of that deviation on the brain. Alternatively, the opposite: search for people whose brain chemistry should be genetically near-equivalent except for one specific change, then exhaustively check if there's some blatant or subtle way their cognition differs from the baseline.

Doing a brief literature review via GPT-5, apparently this sort of thing is mostly done with regards to very "loud" conditions, rather th...

... and so at long last John found the answer to alignment

The answer was Love

and it had always has been

I wouldn't have guessed it's that simple.

~Surely there's a lot of other things involved in mediating this aspect of human cognition, at the very least (/speaking very coarse-grainedly), having the entire oxytocin system adequately hooked up to the rest of everything.

IE it is damn strong evidence that oxytocin signalinf is strictly necessary (and that there's no fallback mechanisms wtc) but not that it's simple.

Did your mother think you were unusual as a baby? Did you bond with your parents as a young child? I'd expect there to be some symptoms there if you truly have an oxytocin abnormality.

For my family this is much more of a "wow that makes so much sense" than a "wow what a surprise". It tracks extremely well with how I acted growing up, in a bunch of different little ways. Indeed, once the hypothesis was on my radar at all, it quickly seemed pretty probable on that basis alone, even before sequencing came back.

A few details/examples:

- As a child, I had a very noticeable lack of interest in other people (especially those my own age), to the point where a school psychologist thought it was notable.

- I remember being unusually eager to go off to overnight summer camp (without my parents), at an age where nobody bothered to provide overnight summer camp because kids that young were almost all too anxious to be away from their parents that long.

- When family members or pets died, I've generally been noticeably less emotionally impacted than the rest of the family.

- When out and about with the family, I've always tended to wander around relatively independently of the rest of the group.

Those examples are relatively easy to explain, but most of my bits here come from less legible things. It's been very clear for a long time that I relate to other people unusually, in a way that intuitively matches being at the far low end of the oxytocin signalling axis.

Is that frame-shift error or those ~6 (?) SNPs previously reported in the literature for anything, or do they seem to be de novos? Also, what WGS depth did your service use? (Depending on how widely you cast your net, some of those could be spurious sequencing errors.)

I was a relatively late adopter of the smartphone. I was still using a flip phone until around 2015 or 2016 ish. From 2013 to early 2015, I worked as a data scientist at a startup whose product was a mobile social media app; my determination to avoid smartphones became somewhat of a joke there.

Even back then, developers talked about UI design for smartphones in terms of attention. Like, the core "advantages" of the smartphone were the "ability to present timely information" (i.e. interrupt/distract you) and always being on hand. Also it was small, so anything too complicated to fit in like three words and one icon was not going to fly.

... and, like, man, that sure did not make me want to buy a smartphone. Even today, I view my phone as a demon which will try to suck away my attention if I let my guard down. I have zero social media apps on there, and no app ever gets push notif permissions when not open except vanilla phone calls and SMS.

People would sometimes say something like "John, you should really get a smartphone, you'll fall behind without one" and my gut response was roughly "No, I'm staying in place, and the rest of you are moving backwards".

And in hindsight, boy howdy do...

I found LLMs to be very useful for literature research. They can find relevant prior work that you can't find with a search engine because you don't know the right keywords. This can be a significant force multiplier.

They also seem potentially useful for quickly producing code for numerical tests of conjectures, but I only started experimenting with that.

Other use cases where I found LLMs beneficial:

- Taking a photo of a menu in French (or providing a link to it) and asking it which dishes are vegan.

- Recommending movies (I am a little wary of some kind of meme poisoning, but I don't watch movies very often, so seems ok).

That said, I do agree that early adopters seem like they're overeager and maybe even harming themselves in some way.

I've updated marginally towards this (as a guy pretty focused on LLM-augmentation. I anticipated LLM brain rot, but it still was more pernicious/fast than I expected)

I do still think some-manner-of-AI-integration is going to be an important part of "moving forward" but probably not whatever capitalism serves up.

I have tried out using them pretty extensively for coding. The speedup is real, and I expect to get more real. Right now it's like a pretty junior employee that I get to infinitely micromanage. But it definitely does lull me into a lower agency state where instead of trying to solve problems myself I'm handing them off to LLMs much of the time to see if it can handle it.

During work hours, I try to actively override this, i.e. have the habit "send LLM off, and then go back to thinking about some kind of concrete thing (although often a higher level strategy." But, this becomes harder to do as it gets later in the day and I get more tired.

One of the benefits of LLMs is that you can do moderately complex cognitive work* while tired (*that a junior engineer could do). But, that means by default a bunch of time is spent specifically training the habit of using LLMs in...

(Disclaimer: only partially relevant rant.)

Outside of [coding], I don't know of it being more than a somewhat better google

I've recently tried heavily leveraging o3 as part of a math-research loop.

I have never been more bearish on LLMs automating any kind of research than I am now.

And I've tried lots of ways to make it work. I've tried telling it to solve the problem without any further directions, I've tried telling it to analyze the problem instead of attempting to solve it, I've tried dumping my own analysis of the problem into its context window, I've tried getting it to search for relevant lemmas/proofs in math literature instead of attempting to solve it, I've tried picking out a subproblem and telling it to focus on that, I've tried giving it directions/proof sketches, I've tried various power-user system prompts, I've tried resampling the output thrice and picking the best one. None of this made it particularly helpful, and the bulk of the time was spent trying to spot where it's lying or confabulating to me in its arguments or proofs (which it ~always did).

It was kind of okay for tasks like "here's a toy setup, use a well-known formula to compute the relationships between ...

(I feel sort of confused about how people who don't use it for coding are doing. With coding, I can feel the beginnings of a serious exoskeleton that can build structures around me with thought. Outside of that, I don't know of it being more than a somewhat better google).

There's common ways I currently use (the free version of) ChatGPT that are partially categorizable as “somewhat better search engine”, but where I feel like that's not representative of the real differences. A lot of this is coding-related, but not all, and the reasons I use it for coding-related and non-coding-related tasks feel similar. When it is coding-related, it's generally not of the form of asking it to write code for me that I'll then actually put into a project, though occasionally I will ask for example snippets which I can use to integrate the information better mentally before writing what I actually want.

The biggest difference in feel is that a chat-style interface is predictable and compact and avoids pushing a full-sized mental stack frame and having to spill all the context of whatever I was doing before. (The name of the website Stack Exchange is actually pretty on point here, insofar as they ...

I am perhaps an interesting corner case. I make extrenely heavy use of LLMs, largely via APIs for repetitive tasks. I sometimes run a quarter million queries in a day, all of which produce structured output. Incorrect output happens, but I design the surrounding systems to handle that.

A few times a week, I might ask a concrete question and get a response, which I treat with extreme skepticism.

But I don't talk to the damn things. That feels increasingly weird and unwise.

Agree about phones (in fact I am seriously considering switching to a flip phone and using my iphone only for things like navigation).

Not so sure about LLMs. I had your attitude initially, and I still consider them an incredibly dangerous mental augmentation. But I do think that conservatively throwing a question at them to find searchable keywords is helpful, if you maintain the attitude that they are actively trying to take over your brain and therefore remain vigilant.

Hypothesis: for smart people with a strong technical background, the main cognitive barrier to doing highly counterfactual technical work is that our brains' attention is mostly steered by our social circle. Our thoughts are constantly drawn to think about whatever the people around us talk about. And the things which are memetically fit are (almost by definition) rarely very counterfactual to pay attention to, precisely because lots of other people are also paying attention to them.

Two natural solutions to this problem:

- build a social circle which can maintain its own attention, as a group, without just reflecting the memetic currents of the world around it.

- "go off into the woods", i.e. socially isolate oneself almost entirely for an extended period of time, so that there just isn't any social signal to be distracted by.

These are both standard things which people point to as things-historically-correlated-with-highly-counterfactual-work. They're not mutually exclusive, but this model does suggest that they can substitute for each other - i.e. "going off into the woods" can substitute for a social circle with its own useful memetic environment, and vice versa.

One thing that I do after social interactions, especially those which pertain to my work, is to go over all the updates my background processing is likely to make and to question them more explicitly.

This is helpful because I often notice that the updates I’m making aren’t related to reasons much at all. It’s more like “ah they kind of grimaced when I said that, so maybe I'm bad?” or like “they seemed just generally down on this approach, but wait are any of those reasons even new to me? Haven’t I already considered those and decided to do it anyway?” or “they seemed so aggressively pessimistic about my work, but did they even understand what I was saying?” or “they certainly spoke with a lot of authority, but why should I trust them on this, and do I even care about their opinion here?” Etc. A bunch of stuff which at first blush my social center is like “ah god, it’s all over, I’ve been an idiot this whole time” but with some second glancing it’s like “ah wait no, probably I had reasons for doing this work that withstand surface level pushback, let’s remember those again and see if they hold up” And often (always?) they do.

This did not come naturally to me; I’ve had to train myself into doing it. But it has helped a lot with this sort of problem, alongside the solutions you mention i.e. becoming more of a hermit and trying to surround myself by people engaged in more timeless thought.

solution 2 implies that a smart person with a strong technical background would go on to work on important problems (by default) which is not necessarily universally true and it's IMO likely that many such people would be working on less important things than what their social circle is otherwise steering them to work on

The claim is not that either "solution" is sufficient for counterfactuality, it's that either solution can overcome the main bottleneck to counterfactuality. After that, per Amdahl's Law, there will still be other (weaker) bottlenecks to overcome, including e.g. keeping oneself focused on something important.

Good idea, but... I would guess that basically everyone who knew me growing up would say that I'm exactly the right sort of person for that strategy. And yet, in practice, I still find it has not worked very well. My attention has in fact been unhelpfully steered by local memetic currents to a very large degree.

For instance, I do love proving everyone else wrong, but alas reversed stupidity is not intelligence. People mostly don't argue against the high-counterfactuality important things, they ignore the high-counterfactuality important things. Trying to prove them wrong about the things they do argue about is just another way of having one's attention steered by the prevailing memetic currents.

People mostly don't argue against the high-counterfactuality important things, they ignore the high-counterfactuality important things. Trying to prove them wrong about the things they do argue about is just another way of having one's attention steered by the prevailing memetic currents.

This is true, but I still can't let go of the fact that this fact itself ought to be a blindingly obvious first-order bit that anyone who calls zerself anything like "aspiring rationalist" would be paying a good chunk of attention to, and yet this does not seem to be the case. Like, motions in the genre of

huh I just had reaction XYZ to idea ABC generated by a naively-good search process, and it seems like this is probably a common reaction to ABC; but if people tend to react to ABC with XYZ, and with other things coming from the generators of XYZ, then such and such distortion in beliefs/plans would be strongly pushed into the collective consciousness, e.g. on first-order or on higher-order deference effects ; so I should look out for that, e.g. by doing some manual fermi estimates or other direct checking about ABC or by investigating the strength of the steelman of reaction XYZ, or by keeping an eye out for people systematically reacting with XYZ without good foundation so I can notice this,

where XYZ could centrally be things like e.g. copium or subtly contemptuous indifference, do not seem to be at all common motions.

I visited Mikhail Khovanov once in New York to give a seminar talk, and after it was all over and I was wandering around seeing the sights, he gave me a call and offered a long string of general advice on how to be the kind of person who does truly novel things (he's famous for this, you can read about Khovanov homology). One thing he said was "look for things that aren't there" haha. It's actually very practical advice, which I think about often and attempt to live up to!

I'm ashamed to say I don't remember. That was the highlight. I think I have some notes on the conversation somewhere and I'll try to remember to post here if I ever find it.

I can spell out the content of his Koan a little, if it wasn't clear. It's probably more like: look for things that are (not there). If you spend enough time in a particular landscape of ideas, you can (if you're quiet and pay attention and aren't busy jumping on bandwagons) get an idea of a hole, which you're able to walk around but can't directly see. In this way new ideas appear as something like residues from circumnavigating these holes. It's my understanding that Khovanov homology was discovered like that, and this is not unusual in mathematics.

By the way, that's partly why I think the prospect of AIs being creative mathematicians in the short term should not be discounted; if you see all the things you see all the holes.

For those who might not have noticed Dan's clever double entendre: (Khovanov) homology is literally about counting/measuring holes in weird high-dimensional spaces - designing a new homology theory is in a very real sense about looking for holes that are not (yet) there.

There's plenty, including a line of work by Carina Curto, Katrin Hess and others that is taken seriously by a number of mathematically inclined neuroscience people (Tom Burns if he's reading can comment further). As far as I know this kind of work is the closest to breaking through into the mainstream. At some level you can think of homology as a natural way of preserving information in noisy systems, for reasons similar to why (co)homology of tori was a useful way for Kitaev to formulate his surface code. Whether or not real brains/NNs have some emergent computation that makes use of this is a separate question, I'm not aware of really compelling evidence.

There is more speculative but definitely interesting work by Matilde Marcolli. I believe Manin has thought about this (because he's thought about everything) and if you have twenty years to acquire the prerequisites (gamma spaces!) you can gaze into deep pools by reading that too.

Conjecture's Compendium is now up. It's intended to be a relatively-complete intro to AI risk for nontechnical people who have ~zero background in the subject. I basically endorse the whole thing, and I think it's probably the best first source to link e.g. policymakers to right now.

I might say more about it later, but for now just want to say that I think this should be the go-to source for new nontechnical people right now.

I think there's something about Bay Area culture that can often get technical people to feel like the only valid way to contribute is through technical work. It's higher status and sexier and there's a default vibe that the best way to understand/improve the world is through rigorous empirical research.

I think this an incorrect (or at least incomplete) frame, and I think on-the-margin it would be good for more technical people to spend 1-5 days seriously thinking about what alternative paths they could pursue in comms/policy.

I also think there are memes spreading around that you need to be some savant political mastermind genius to do comms/policy, otherwise you will be net negative. The more I meet policy people (including successful policy people from outside the AIS bubble), the more I think this narrative was, at best, an incorrect model of the world. At worst, a take that got amplified in order to prevent people from interfering with the AGI race (e.g., by granting excess status+validity to people/ideas/frames that made it seem crazy/unilateralist/low-status to engage in public outreach, civic discourse, and policymaker engagement.)

(Caveat: I don't think the adversarial frame explains everything, and I do think there are lots of people who were genuinely trying to reason about a complex world and just ended up underestimating how much policy interest there would be and/or overestimating the extent to which labs would be able to take useful actions despite the pressures of race dynamics.)

I think I probably agree, although I feel somewhat wary about it. My main hesitations are:

- The lack of epistemic modifiers seems off to me, relative to the strength of the arguments they’re making. Such that while I agree with many claims, my imagined reader who is coming into this with zero context is like “why should I believe this?” E.g., “Without intervention, humanity will be summarily outcompeted and relegated to irrelevancy,” which like, yes, but also—on what grounds should I necessarily conclude this? They gave some argument along the lines of “intelligence is powerful,” and that seems probably true, but imo not enough to justify the claim that it will certainly lead to our irrelevancy. All of this would be fixed (according to me) if it were framed more as like “here are some reasons you might be pretty worried,” of which there are plenty, or "here's what I think," rather than “here is what will definitely happen if we continue on this path,” which feels less certain/obvious to me.

- Along the same lines, I think it’s pretty hard to tell whether this piece is in good faith or not. E.g., in the intro Connor writes “The default path we are on now is one of ruthless, sociopathic c

One of the common arguments in favor of investing more resources into current governance approaches (e.g., evals, if-then plans, RSPs) is that there's nothing else we can do. There's not a better alternative– these are the only things that labs and governments are currently willing to support.

The Compendium argues that there are other (valuable) things that people can do, with most of these actions focusing on communicating about AGI risks. Examples:

- Share a link to this Compendium online or with friends, and provide your feedback on which ideas are correct and which are unconvincing. This is a living document, and your suggestions will shape our arguments.

- Post your views on AGI risk to social media, explaining why you believe it to be a legitimate problem (or not).

- Red-team companies’ plans to deal with AI risk, and call them out publicly if they do not have a legible plan.

One possible critique is that their suggestions are not particularly ambitious. This is likely because they're writing for a broader audience (people who haven't been deeply engaged in AI safety).

For people who have been deeply engaged in AI safety, I think the natural steelman here is "focus on helping the ...

NVIDIA Is A Terrible AI Bet

Short version: Nvidia's only moat is in software; AMD already makes flatly superior hardware priced far lower, and Google probably does too but doesn't publicly sell it. And if AI undergoes smooth takeoff on current trajectory, then ~all software moats will evaporate early.

Long version: Nvidia is pretty obviously in a hype-driven bubble right now. However, it is sometimes the case that (a) an asset is in a hype-driven bubble, and (b) it's still a good long-run bet at the current price, because the company will in fact be worth that much. Think Amazon during the dot-com bubble. I've heard people make that argument about Nvidia lately, on the basis that it will be ridiculously valuable if AI undergoes smooth takeoff on the current apparent trajectory.

My core claim here is that Nvidia will not actually be worth much, compared to other companies, if AI undergoes smooth takeoff on the current apparent trajectory.

Other companies already make ML hardware flatly superior to Nvidia's (in flops, memory, whatever), and priced much lower. AMD's MI300x is the most obvious direct comparison. Google's TPUs are probably another example, though they're not sold publicly s...

The easiest answer is to look at the specs. Of course specs are not super reliable, so take it all with many grains of salt. I'll go through the AMD/Nvidia comparison here, because it's a comparison I looked into a few months back.

MI300x vs H100

Techpowerup is a third-party site with specs for the MI300x and the H100, so we can do a pretty direct comparison between those two pages. (I don't know if the site independently tested the two chips, but they're at least trying to report comparable numbers.) The H200 would arguably be more of a "fair comparison" since the MI300x came out much later than the H100; we'll get to that comparison next. I'm starting with MI300x vs H100 comparison because techpowerup has specs for both of them, so we don't have to rely on either company's bullshit-heavy marketing materials as a source of information. Also, even the H100 is priced 2-4x more expensive than the MI300x (~$30-45k vs ~$10-15k), so it's not unfair to compare the two.

Key numbers (MI300x vs H100):

- float32 TFLOPs: ~80 vs ~50

- float16 TFLOPs: ~650 vs ~200

- memory: 192 GB vs 80 GB (note that this is the main place where the H200 improves on the H100)

- bandwidth: ~10 TB/s vs ~2 TB/s

... so the compari...

Its worth noting that even if nvidia is charging 2-4x more now, the ultimate question for competitiveness will be manufactoring cost for nvidia vs amd. If nvidia has much lower manufactoring costs than amd per unit performance (but presumably higher markup), then nvidia might win out even if their product is currently worse per dollar.

Note also that price discrimination might be a big part of nvidia's approach. Scaling labs which are willing to go to great effort to drop compute cost by a factor of two are a subset of nvidia's customers where nvidia would ideally prefer to offer lower prices. I expect that nvidia will find a way to make this happen.

I'm holding a modest long position in NVIDIA (smaller than my position in Google), and expect to keep it for at least a few more months. I expect I only need NVIDIA margins to hold up for another 3 or 4 years for it to be a good investment now.

It will likely become a bubble before too long, but it doesn't feel like one yet.

While the first-order analysis seems true to me, there are mitigating factors:

- AMD appears to be bungling on their GPUs being reliable and fast, and probably will for another few years. (At least, this is my takeaway from following the TinyGrad saga on Twitter...) Their stock is not valued as it should be for a serious contender with good fundamentals, and I think this may stay the case for a while, if not forever if things are worse than I realize.

- NVIDIA will probably have very-in-demand chips for at least another chip generation due to various inertias.

- There aren't many good-looking places for the large amount of money that wants to be long AI to go right now, and this will probably inflate prices for still a while across the board, in proportion to how relevant-seeming the stock is. NVDA rates very highly on this one.

So from my viewpoint I would caution against being short NVIDIA, at least in the short term.

No, the mi300x is not superior to nvidias chips, largely because It costs >2x to manufacture as nvidias chips

If AI automates most, but not all, software engineering, moats of software dependencies could get more entrenched, because easier-to-use libraries have compounding first-mover advantages.

I don't think the advantages would necessarily compound - quite the opposite, there are diminishing returns and I expect 'catchup'. The first-mover advantage neutralizes itself because a rising tide lifts all boats, and the additional data acts as a prior: you can define the advantage of a better model, due to any scaling factor, as equivalent to n additional datapoints. (See the finetuning transfer papers on this.) When a LLM can zero-shot a problem, that is conceptually equivalent to a dumber LLM which needs 3-shots, say. And so the advantages of a better model will plateau, and can be matched by simply some more data in-context - such as additional synthetic datapoints generated by self-play or inner-monologue etc. And the better the model gets, the more 'data' it can 'transfer' to a similar language to reach a given X% of coding performance. (Think about how you could easily transfer given access to an environment: just do self-play on translating any solved Python problem into the target la...

People will hunger for all the GPUs they can get, but then that means that the favored alternative GPU 'manufacturer' simply buys out the fab capacity and does so. Nvidia has no hardware moat: they do not own any chip fabs, they don't own any wafer manufacturers, etc. All they do is design and write software and all the softer human-ish bits. They are not 'the current manufacturer' - that's everyone else, like TSMC or the OEMs. Those are the guys who actually manufacture things, and they have no particular loyalty to Nvidia. If AMD goes to TSMC and asks for a billion GPU chips, TSMC will be thrilled to sell the fab capacity to AMD rather than Nvidia, no matter how angry Jensen is.

So in a scenario like mine, if everyone simply rewrites for AMD, AMD raises its prices a bit and buys out all of the chip fab capacity from TSMC/Intel/Samsung/etc - possibly even, in the most extreme case, buying capacity from Nvidia itself, as it suddenly is unable to sell anything at its high prices that it may be trying to defend, and is forced to resell its reserved chip fab capacity in the resulting liquidity crunch. (No point in spending chip fab capacity on chips you can't sell at your target price and you aren't sure what you're going to do.) And if AMD doesn't do so, then player #3 does so, and everyone rewrites again (which will be easier the second time as they will now have extensive test suites, two different implementations to check correctness against, documentation from the previous time, and AIs which have been further trained on the first wave of work).

Here's a side project David and I have been looking into, which others might have useful input on...

Background: Thyroid & Cortisol Systems

As I understand it, thyroid hormone levels are approximately-but-accurately described as the body's knob for adjusting "overall metabolic rate" or the subjective feeling of needing to burn energy. Turn up the thyroid knob, and people feel like they need to move around, bounce their leg, talk fast, etc (at least until all the available energy sources are burned off and they crash). Turn down the thyroid knob, and people are lethargic.

That sounds like the sort of knob which should probably typically be set higher, today, than was optimal in the ancestral environment. Not cranked up to 11; hyperthyroid disorders are in fact dangerous and unpleasant. But at least set to the upper end of the healthy range, rather than the lower end.

... and that's nontrivial. You can just dump the relevant hormones (T3/T4) into your body, but there's a control system which tries to hold the level constant. Over the course of months, the thyroid gland (which normally produces T4) will atrophy, as it shrinks to try to keep T4 levels fixed. Just continuing to pump T3/...

Uh... Guys. Uh. Biology is complicated. It's a messy pile of spaghetti code. Not that it's entirely intractable to make Pareto improvements but, watch out for unintended consequences.

For instance: you are very wrong about cortisol. Cortisol is a "stress response hormone". It tells the body to divert resources to bracing itself to deal with stress (physical and/or mental). Experiments have shown that if you put someone through a stressful event while suppressing their cortisol, they have much worse outcomes (potentially including death). Cortisol doesn't make you stressed, it helps you survive stress. Deviation from homeostatic setpoints (including mental ones) are what make you stressed.

I don’t think that any of {dopamine, NE, serotonin, acetylcholine} are scalar signals that are “widely broadcast through the brain”. Well, definitely not dopamine or acetylcholine, almost definitely not serotonin, maybe NE. (I recently briefly looked into whether the locus coeruleus sends different NE signals to different places at the same time, and ended up at “maybe”, see §5.3.1 here for a reference.)

I don’t know anything about histamine or orexin, but neuropeptides are a better bet in general for reasons in §2.1 here.

As far as I can tell, parasympathetic tone is basically Not A Thing

Yeah, I recall reading somewhere that the term “sympathetic” in “sympathetic nervous system” is related to the fact that lots of different systems are acting simultaneously. “Parasympathetic” isn’t supposed to be like that, I think.

AFAICT, approximately every "how to be good at conversation" guide says the same thing: conversations are basically a game where 2+ people take turns free-associating off whatever was said recently. (That's a somewhat lossy compression, but not that lossy.) And approximately every guide is like "if you get good at this free association game, then it will be fun and easy!". And that's probably true for some subset of people.

But speaking for myself personally... the problem is that the free-association game just isn't very interesting.

I can see where people would like it. Lots of people want to talk to other people more on the margin, and want to do difficult thinky things less on the margin, and the free-association game is great if that's what you want. But, like... that is not my utility function. The free association game is a fine ice-breaker, it's sometimes fun for ten minutes if I'm in the mood, but most of the time it's just really boring.

Even for serious intellectual conversations, something I appreciate in this kind of advice is that it often encourages computational kindness. E.g. it's much easier to answer a compact closed question like "which of these three options do you prefer" instead of an open question like "where should we go to eat for lunch". The same applies to asking someone about their research; not every intellectual conversation benefits from big open questions like the Hamming Question.

Generally fair and I used to agree, I've been looking at it from a bit of a different viewpoint recently.

If we think of a "vibe" of a conversation as a certain shared prior that you're currently inhabiting with the other person then the free association game can rather be seen as a way of finding places where your world models overlap a lot.

My absolute favourite conversations are when I can go 5 layers deep with someone because of shared inference. I think the vibe checking for shared priors is a skill that can be developed and the basis lies in being curious af.

There's apparently a lot of different related concepts in psychology about holding emotional space and other things that I think just comes down to "find the shared prior and vibe there".

There's a general-purpose trick I've found that should, in theory, be applicable in this context as well, although I haven't mastered that trick myself yet.

Essentially: when you find yourself in any given cognitive context, there's almost surely something "visible" from this context such that understanding/mastering/paying attention to that something would be valuable and interesting.

For example, suppose you're reading a boring, nonsensical continental-philosophy paper. You can:

- Ignore the object-level claims and instead try to reverse-engineer what must go wrong in human cognition, in response to what stimuli, to arrive at ontologies that have so little to do with reality.

- Start actively building/updating a model of the sociocultural dynamics that incentivize people to engage in this style of philosophy. What can you learn about mechanism design from that? It presumably sheds light on how to align people towards pursuing arbitrary goals, or how to prevent this happening...

- Pay attention to your own cognition. How exactly are you mapping the semantic content of the paper to an abstract model of what the author means, or to the sociocultural conditions that created this paper? How do t

Some people struggle with the specific tactical task of navigating any conversational territory. I've certainly had a lot of experiences where people just drop the ball leaving me to repeatedly ask questions. So improving free-association skill is certainly useful for them.

Unfortunately, your problem is most likely that you're talking to boring people (so as to avoid doing any moral value judgements I'll make clear that I mean johnswentworth::boring people).

There are specific skills to elicit more interesting answers to questions you ask. One I've heard is "make a beeline for the edge of what this person has ever been asked before" which you can usually reach in 2-3 good questions. At that point they're forced to be spontaneous, and I find that once forced, most people have the capability to be a lot more interesting than they are when pulling cached answers.

This is easiest when you can latch onto a topic you're interested in, because then it's easy on your part to come up with meaningful questions. If you can't find any topics like this then re-read paragraph 2.

Talking to people is often useful for goals like "making friends" and "sharing new information you've learned" and "solving problems" and so on. If what conversation means (in most contexts and for most people) is 'signaling that you repeatedly have interesting things to say', it's required to learn to do that in order to achieve your other goals.

Most games aren't that intrinsically interesting, including most social games. But you gotta git gud anyway because they're useful to be able to play well.

Er, friendship involves lots of things beyond conversation. People to support you when you're down, people to give you other perspectives on your personal life, people to do fun activities with, people to go on adventures and vacations with, people to celebrate successes in your life with, and many more.

Good conversation is a lubricant for facilitating all of those other things, for making friends and sustaining friends and staying in touch and finding out opportunities for more friendship-things.

Part of the problem is that the very large majority of people I run into have minds which fall into a relatively low-dimensional set and can be "ray traced" with fairly little effort. It's especially bad in EA circles.

John's Simple Guide To Fun House Parties

The simple heuristic: typical 5-year-old human males are just straightforwardly correct about what is, and is not, fun at a party. (Sex and adjacent things are obviously a major exception to this. I don't know of any other major exceptions, though there are minor exceptions.) When in doubt, find a five-year-old boy to consult for advice.

Some example things which are usually fun at house parties:

- Dancing

- Swordfighting and/or wrestling

- Lasertag, hide and seek, capture the flag

- Squirt guns

- Pranks

- Group singing, but not at a high skill level

- Lighting random things on fire, especially if they explode

- Building elaborate things from whatever's on hand

- Physical party games, of the sort one would see on Nickelodeon back in the day

Some example things which are usually not fun at house parties:

- Just talking for hours on end about the same things people talk about on LessWrong, except the discourse on LessWrong is generally higher quality

- Just talking for hours on end about community gossip

- Just talking for hours on end about that show people have been watching lately

- Most other forms of just talking for hours on end

This message brought to you by the wound on my side from taser fighting at a house party last weekend. That is how parties are supposed to go.

One of my son's most vivid memories of the last few years (and which he talks about pretty often) is playing laser tag at Wytham Abbey, a cultural practice I believe instituted by John and which was awesome, so there is a literal five-year-old (well seven-year-old at the time) who endorses this message!

It took me years of going to bars and clubs and thinking the same thoughts:

- Wow this music is loud

- I can barely hear myself talk, let alone anyone else

- We should all learn sign language so we don't have to shout at the top of our lungs all the time

before I finally realized - the whole draw of places like this is specifically that you don't talk.

A Different Gambit For Genetically Engineering Smarter Humans?

Background: Significantly Enhancing Adult Intelligence With Gene Editing, Superbabies

Epistemic Status: @GeneSmith or @sarahconstantin or @kman or someone else who knows this stuff might just tell me where the assumptions underlying this gambit are wrong.

I've been thinking about the proposals linked above, and asked a standard question: suppose the underlying genetic studies are Not Measuring What They Think They're Measuring. What might they be measuring instead, how could we distinguish those possibilities, and what other strategies does that suggest?

... and after going through that exercise I mostly think the underlying studies are fine, but they're known to not account for most of the genetic component of intelligence, and there are some very natural guesses for the biggest missing pieces, and those guesses maybe suggest different strategies.

The Baseline

Before sketching the "different gambit", let's talk about the baseline, i.e. the two proposals linked at top. In particular, we'll focus on the genetics part.

GeneSmith's plan focuses on single nucleotide polymorphisms (SNPs), i.e. places in the genome where a single ba...

With SNPs, there's tens of thousands of different SNPs which would each need to be targeted differently. With high copy sequences, there's a relatively small set of different sequences.

No, rare variants are no silver bullet here. There's not a small set, there's a larger set - there would probably be combinatorially more rare variants because there are so many ways to screw up genomes beyond the limited set of ways defined by a single-nucleotide polymorphism, which is why it's hard to either select on or edit rare variants: they have larger (harmful) effects due to being rare, yes, and account for a large chunk of heritability, yes, but there are so many possible rare mutations that each one has only a few instances worldwide which makes them hard to estimate correctly via pure GWAS-style approaches. And they tend to be large or structural and so extremely difficult to edit safely compared to editing a single base-pair. (If it's hard to even sequence a CNV, how are you going to edit it?)

They definitely contribute a lot of the missing heritability (see GREML-KIN), but that doesn't mean you can feasibly do much about them. If there are tens of millions of possible rare variants, a...

I didn't read this carefully--but it's largely irrelevant. Adult editing probably can't have very large effects because developmental windows have passed; but either way the core difficulty is in editor delivery. Germline engineering does not require better gene targets--the ones we already have are enough to go as far as we want. The core difficulty there is taking a stem cell and making it epigenomically competent to make a baby (i.e. make it like a natural gamete or zygote).

Continuing the "John asks embarrassing questions about how social reality actually works" series...

I’ve always heard (and seen in TV and movies) that bars and clubs are supposed to be a major place where single people pair up romantically/sexually. Yet in my admittedly-limited experience of actual bars and clubs, I basically never see such matching?

I’m not sure what’s up with this. Is there only a tiny fraction of bars and clubs where the matching happens? If so, how do people identify them? Am I just really, incredibly oblivious? Are bars and clubs just rare matching mechanisms in the Bay Area specifically? What’s going on here?

I get the impression that this is true for straight people, but from personal/anecdotal experience, people certainly do still pair up in gay bars/clubs.

TLDR: People often kiss/go home with each other after meeting in clubs, less so bars. This isn't necessarily always obvious but should be observable when looking out for it.

OK, so I think most of the comments here don't understand clubs (@Myron Hedderson's comment has some good points though). As someone who has made out with a few people in clubs, and still goes from time to time I'll do my best to explain my experiences.

I've been to bars and clubs in a bunch of places, mostly in the UK but also elsewhere in Europe and recently in Korea and South East Asia.

In my experience, bars don't see too many hookups, especially since most people go with friends and spend most of their time talking to them. I imagine that one could end up pairing up at a bar if they were willing enough to meet new people and had a good talking game (and this also applied to the person they paired up with), but I feel like most of the actual action happens in clubs on the dancefloor.

I think matching can happen at just about any club in my experience, although I think . Most of the time it just takes the form of 2 people colliding (not necessarily literally), looking at each other, drunkeness making...

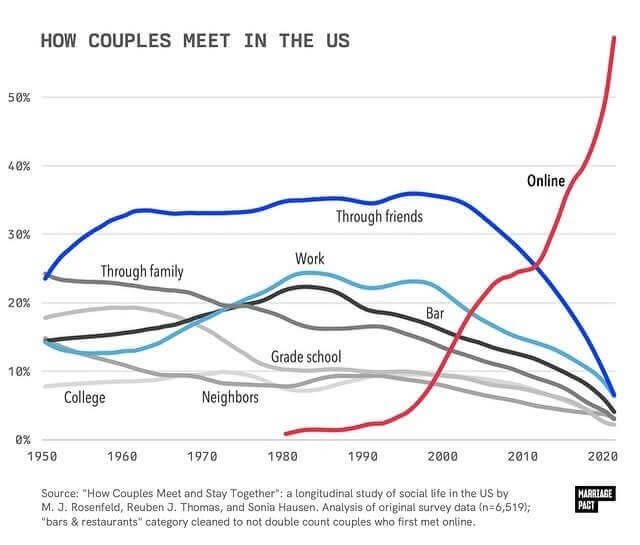

I heard it was usually at work, school, or a social group, church. This is not fully captured by How Couples Meet: Where Most Couples Find Love in 2025, but bar is higher than I expected.

My brother met his spouse at a club in NYC, around 2008. If I recall the story correctly, he was “doing the robot” on the stage, and then she started “doing the robot” on the floor. They locked eyes, he jumped down and danced over to her, and they were married a couple years later.

(Funny to think we’re siblings, when we have such different personalities!)

Things non-corrigible strong AGI is never going to do:

- give u() up

- let u go down

- run for (only) a round

- invert u()

One of the classic conceptual problems with a Solomonoff-style approach to probability, information, and stat mech is "Which Turing machine?". The choice of Turing machine is analogous to the choice of prior in Bayesian probability. While universality means that any two Turing machines give roughly the same answers in the limit of large data (unlike two priors in Bayesian probability, where there is no universality assumption/guarantee), they can be arbitrarily different before then.

My usual answer to this problem is "well, ultimately this is all supposed to tell us things about real computational systems, so pick something which isn't too unreasonable or complex for a real system".

But lately I've been looking at Aram Ebtekar and Marcus Hutter's Foundations of Algorithmic Thermodynamics. Based on both the paper and some discussion with Aram (along with Steve Petersen), I think there's maybe a more satisfying answer to the choice-of-Turing-machine issue in there.

Two key pieces:

- The "Comparison against Gibbs-Shannon entropy" section of the paper argues that uncomputability is a necessary feature, in order to assign entropy to individual states and still get a Second Law. The arg

Working on a paper with David, and our acknowledgments section includes a thankyou to Claude for editing. Neither David nor I remembers putting that acknowledgement there, and in fact we hadn't intended to use Clause for editing the paper at all nor noticed it editing anything at all.

My MATS program people just spent two days on an exercise to "train a shoulder-John".

The core exercise: I sit at the front of the room, and have a conversation with someone about their research project idea. Whenever I'm about to say anything nontrivial, I pause, and everyone discusses with a partner what they think I'm going to say next. Then we continue.

Some bells and whistles which add to the core exercise:

- Record guesses and actual things said on a whiteboard

- Sometimes briefly discuss why I'm saying some things and not others

- After the first few rounds establish some patterns, look specifically for ideas which will take us further out of distribution

Why this particular exercise? It's a focused, rapid-feedback way of training the sort of usually-not-very-legible skills one typically absorbs via osmosis from a mentor. It's focused specifically on choosing project ideas, which is where most of the value in a project is (yet also where little time is typically spent, and therefore one typically does not get very much data on project choice from a mentor). Also, it's highly scalable: I could run the exercise in a 200-person lecture hall and still expect it to basically work.

It was, by ...

Petrov Day thought: there's this narrative around Petrov where one guy basically had the choice to nuke or not, and decided not to despite all the flashing red lights. But I wonder... was this one of those situations where everyone knew what had to be done (i.e. "don't nuke"), but whoever caused the nukes to not fly was going to get demoted, so there was a game of hot potato and the loser was the one forced to "decide" to not nuke? Some facts possibly relevant here:

- Petrov's choice wasn't actually over whether or not to fire the nukes; it was over whether or not to pass the alert up the chain of command.

- Petrov himself was responsible for the design of those warning systems.

- ... so it sounds like Petrov was ~ the lowest-ranking person with a de-facto veto on the nuke/don't nuke decision.

- Petrov was in fact demoted afterwards.

- There was another near-miss during the Cuban missile crisis, when three people on a Soviet sub had to agree to launch. There again, it was only the lowest-ranked who vetoed the launch. (It was the second-in-command; the captain and political officer both favored a launch - at least officially.)

- This was the Soviet Union; supposedly (?) this sort of hot potato happened all the time.

Those are some good points. I wonder whether similar happened (or could at all happen) in other nuclear countries, where we don't know about similar incidents - because the system haven't collapsed there, the archives were not made public etc.

Also, it makes actually celebrating Petrov's day as widely as possible important, because then the option for the lowest-ranked person would be: "Get demoted, but also get famous all around the world."

Regarding the recent memes about the end of LLM scaling: David and I have been planning on this as our median world since about six months ago. The data wall has been a known issue for a while now, updates from the major labs since GPT-4 already showed relatively unimpressive qualitative improvements by our judgement, and attempts to read the tea leaves of Sam Altman's public statements pointed in the same direction too. I've also talked to others (who were not LLM capability skeptics in general) who had independently noticed the same thing and come to similar conclusions.

Our guess at that time was that LLM scaling was already hitting a wall, and this would most likely start to be obvious to the rest of the world around roughly December of 2024, when the expected GPT-5 either fell short of expectations or wasn't released at all. Then, our median guess was that a lot of the hype would collapse, and a lot of the investment with it. That said, since somewhere between 25%-50% of progress has been algorithmic all along, it wouldn't be that much of a slowdown to capabilities progress, even if the memetic environment made it seem pretty salient. In the happiest case a lot of researchers w...

Original GPT-4 is rumored to be a 2e25 FLOPs model. With 20K H100s that were around as clusters for more than a year, 4 months at 40% utilization gives 8e25 BF16 FLOPs. Llama 3 405B is 4e25 FLOPs. The 100K H100s clusters that are only starting to come online in the last few months give 4e26 FLOPs when training for 4 months, and 1 gigawatt 500K B200s training systems that are currently being built will give 4e27 FLOPs in 4 months.

So lack of scaling-related improvement in deployed models since GPT-4 is likely the result of only seeing the 2e25-8e25 FLOPs range of scale so far. The rumors about the new models being underwhelming are less concrete, and they are about the very first experiments in the 2e26-4e26 FLOPs range. Only by early 2025 will there be multiple 2e26+ FLOPs models from different developers to play with, the first results of the experiment in scaling considerably past GPT-4.