Shameful admission: after well over a decade on this site, I still don't really intuitively grok why I should expect agents to become better approximated by "single-minded pursuit of a top-level goal" as they gain more capabilities. Yes, some behaviors like getting resources and staying alive are useful in many situations, but that's not what I'm talking about. I'm talking about specifically the pressures that are supposed to inevitably push agents into the former of the following two main types of decision-making:

-

Unbounded consequentialist maximization: The agent has one big goal that doesn't care about its environment. "I must make more paperclips forever, so I can't let anyone stop me, so I need power, so I need factories, so I need money, so I'll write articles with affiliate links." It's a long chain of "so" statements from now until the end of time.

-

Homeostatic agent: The agent has multiple drives that turn on when needed to keep things balanced. "Water getting low: better get more. Need money for water: better earn some. Can write articles to make money." Each drive turns on, gets what it needs, and turns off without some ultimate cosmic purpose.

Both types show goal-directed behavior. But if you offered me a choice of which type of agent I'd rather work with, I'd choose the second type in a heartbeat. The homeostatic agent may betray me, but it will only do that if doing so satisfies one of its drives. This doesn't mean homeostatic agents never betray allies - they certainly might if their current drive state incentivizes it (or if for some reason they have a "betray the vulnerable" drive). But the key difference is predictability. I can reasonably anticipate when a homeostatic agent might work against me: when I'm standing between it and water when it's thirsty, or when it has a temporary resource shortage. These situations are concrete and contextual.

With unbounded consequentialists, the betrayal calculation extends across the entire future light cone. The paperclip maximizer might work with me for decades, then suddenly turn against me because its models predict this will yield 0.01% more paperclips in the cosmic endgame. This makes cooperation with unbounded consequentialists fundamentally unstable.

It's similar to how we've developed functional systems for dealing with humans pursuing their self-interest in business contexts. We expect people might steal if given easy opportunities, so we create accountability systems. We understand the basic drives at play. But it would be vastly harder to safely interact with someone whose sole mission was to maximize the number of sand crabs in North America - not because sand crabs are dangerous, but because predicting when your interests might conflict requires understanding their entire complex model of sand crab ecology, population dynamics, and long-term propagation strategies.

Some say smart unbounded consequentialists would just pretend to be homeostatic agents, but that's harder than it sounds. They'd need to figure out which drives make sense and constantly decide if breaking character is worth it. That's a lot of extra work.

As long as being able to cooperate with others is an advantage, it seems to me that homeostatic agents have considerable advantages, and I don't see a structural reason to expect that to stop being the case in the future.

Still, there are a lot of very smart people on LessWrong seem sure that unbounded consequentialism is somehow inevitable for advanced agents. Maybe I'm missing something? I've been reading the site for 15 years and still don't really get why they believe this. Feels like there's some key insight I haven't grasped yet.

Homeostatic agent

When triggered to act, are the homeostatic-agents-as-envisioned-by-you motivated to decrease the future probability of being moved out of balance, or prolong the length of time in which they will be in balance, or something along these lines?

If yes, they're unbounded consequentialist-maximizers under a paper-thin disguise.

If no, they are probably not powerful agents. Powerful agency is the ability to optimize distant (in space, time, or conceptually) parts of the world into some target state. If the agent only cares about climbing back down into the local-minimum-loss pit if it's moved slightly outside it, it's not going to be trying to be very agent-y, and won't be good at it.

Or, rather... It's conceivable for an agent to be "tool-like" in this manner, where it has an incredibly advanced cognitive engine hooked up to a myopic suite of goals. But only if it's been intelligently designed. If it's produced by crude selection/optimization pressures, then the processes that spit out "unambitious" homeostatic agents would fail to instill the advanced cognitive/agent-y skills into them.

As long as being able to cooperate with others is an advantage, it seems to me that homeostatic agents have considerable advantages

And a bundle of unbounded-consequentialist agents that have some structures for making cooperation between each other possible would have considerable advantages over a bundle of homeostatic agents.

When triggered to act, are the homeostatic-agents-as-envisioned-by-you motivated to decrease the future probability of being moved out of balance, or prolong the length of time in which they will be in balance, or something along these lines?

I expect[1] them to have a drive similar to "if my internal world-simulator predicts a future sensory observations that are outside of my acceptable bounds, take actions to make the world-simulator predict a within-acceptable-bounds sensory observations".

This maps reasonably well to one of the agent's drives being "decrease the future probability of being moved out of balance". Notably, though, it does not map well to that the only drive of the agent, or for the drive to be "minimize" and not "decrease if above threshold". The specific steps I don't understand are

- What pressure is supposed to push a homeostatic agent with multiple drives to elevate a specific "expected future quantity of some arbitrary resource" drives above all of other drives and set the acceptable quantity value to some extreme

- Why we should expect that an agent that has been molded by that pressure would come to dominate its environment.

If no, they are probably not powerful agents. Powerful agency is the ability to optimize distant (in space, time, or conceptually) parts of the world into some target state

Why use this definition of powerful agency? Specifically, why include the "target state" part of it? By this metric, evolutionary pressure is not powerful agency, because while it can cause massive changes in distant parts of the world, there is no specific target state. Likewise for e.g. corporations finding a market niche - to the extent that they have a "target state" it's "become a good fit for the environment".'

Or, rather... It's conceivable for an agent to be "tool-like" in this manner, where it has an incredibly advanced cognitive engine hooked up to a myopic suite of goals. But only if it's been intelligently designed. If it's produced by crude selection/optimization pressures, then the processes that spit out "unambitious" homeostatic agents would fail to instill the advanced cognitive/agent-y skills into them.

I can think of a few ways to interpret the above paragraph with respect to humans, but none of them make sense to me[2] - could you expand on what you mean there?

And a bundle of unbounded-consequentialist agents that have some structures for making cooperation between each other possible would have considerable advantages over a bundle of homeostatic agents.

Is this still true if the unbounded consequentialist agents in question have limited predictive power, and each one has advantages in predicting the things that are salient to it? Concretely, can an unbounded AAPL share price maximizer cooperate with an unbounded maximizer for the number of sand crabs in North America without the AAPL-maximizer having a deep understanding of sand crab biology?

- ^

Subject to various assumptions at least, e.g.

- The agent is sophisticated enough to have a future-sensory-perceptions simulato

- The use of the future-perceptions-simulator has been previously reinforced

- The specific way the agent is trying to change the outputs of the future-perceptions-simulator has been previously reinforced (e.g. I expect "manipulate your beliefs" to be chiseled away pretty fast when reality pushes back)

Still, all those assumptions usually hold for humans

- ^

The obvious interpretation I take for that paragraph is that one of the following must be true

For clarity, can you confirm that you don't think any of the following:

- Humans have been intelligently designed

- Humans do not have the advance cognitive/agent-y skills you refer to

- Humans exhibit unbounded consequentialist goal-driven behavior

None of these seem like views I'd expect you to have, so my model has to be broken somewhere

What pressure is supposed to push a homeostatic agent with multiple drives to elevate a specific "expected future quantity of some arbitrary resource" drives above all of other drives

That was never the argument. A paperclip-maximizer/wrapper-mind's utility function doesn't need to be simple/singular. It can be a complete mess, the way human happiness/prosperity/eudaimonia is a mess. The point is that it would still pursue it hard, so hard that everything not in it will be end up as collateral damage.

Humans exhibit unbounded consequentialist goal-driven behavior

I think humans very much do exhibit that behavior, yes? Towards power/money/security, at the very least. And inasmuch as humans fail to exhibit this behavior, they fail to act as powerful agents and end up accomplishing little.

I think the disconnect is that you might be imagining unbounded consequentialist agents as some alien systems that are literally psychotically obsessed with maximizing something as conceptually simple as paperclips, as opposed to a human pouring their everything into becoming a multibillionaire/amassing dictatorial power/winning a war?

Is this still true if the unbounded consequentialist agents in question have limited predictive power, and each one has advantages in predicting the things that are salient to it?

Yes, see humans.

Is the argument that firms run by homeostatic agents will outcompete firms run by consequentialist agents because homeostatic agents can more reliably follow long-term contracts?

I would phrase it as "the conditions under which homeostatic agents will renege on long-term contracts are more predictable than those under which consequentialist agents will do so". Taking into account the actions of the counterparties would take to reduce the chance of such contract breaking, though, yes.

Cool, I want to know also whether you think you're currently (eg in day to day life) trading with consequentialist or homeostatic agents.

Homeostatic ones exclusively. I think the number of agents in the world as it exists today that behave as long-horizon consequentialists of the sort Eliezer and company seem to envision is either zero or very close to zero. FWIW I expect that most people in that camp would agree that no true consequentialist agents exist in the world as it currently is, but would disagree with my "and I expect that to remain true" assessment.

Edit: on reflection some corporations probably do behave more like unbounded infinite-horizon consequentialists in the sense that they have drives to acquire resources where acquiring those resources doesn't reduce the intensity of the drive. This leads to behavior that in many cases would be the same behavior as an agent that was actually trying to maximize its future resources through any available means. And I have ever bought Chiquita bananas, so maybe not homeostatic agents exclusively.

FWIW I expect that most people in that camp would agree that no true consequentialist agents exist in the world as it currently is

I think this is false, eg John Wentworth often gives Ben Pace as a prototypical example of a consequentialist agent. [EDIT]: Also Eliezer talks about consequentialism being "ubiquitous".

Maybe different definitions are being used, can you list some people or institutions that you trade with which come to mind who you don't think have long-term goals?

Again, homeostatic agents exhibit goal-directed behavior. "Unbounded consequentialist" was a poor choice of term to use for this on my part. Digging through the LW archives uncovered Nostalgebraist's post Why Assume AGIs Will Optimize For Fixed Goals, which coins the term "wrapper-mind".

When I read posts about AI alignment on LW / AF/ Arbital, I almost always find a particular bundle of assumptions taken for granted:

- An AGI has a single terminal goal[1].

- The goal is a fixed part of the AI's structure. The internal dynamics of the AI, if left to their own devices, will never modify the goal.

- The "outermost loop" of the AI's internal dynamics is an optimization process aimed at the goal, or at least the AI behaves just as though this were true.

- This "outermost loop" or "fixed-terminal-goal-directed wrapper" chooses which of the AI's specific capabilities to deploy at any given time, and how to deploy it[2].

- The AI's capabilities will themselves involve optimization for sub-goals that are not the same as the goal, and they will optimize for them very powerfully (hence "capabilities"). But it is "not enough" that the AI merely be good at optimization-for-subgoals: it will also have a fixed-terminal-goal-directed wrapper.

In terms of which agents I trade with which do not have the wrapper structure, I will go from largest to smallest in terms of expenses

- My country: I pay taxes to it. In return, I get a stable place to live with lots of services and opportunities. I don't expect that I get these things because my country is trying to directly optimize for my well-being, or directly trying to optimize for any other specific unbounded goal. My country a FPTP democracy, the leaders do have drives to make sure that at least half of voters vote for them over the opposition - but once that "half" is satisfied, they don't have a drive to get approval high as possible no matter what or maximize the time their party is in power or anything like that.

- My landlord: He is renting the place to me because he wants money, and he wants money because it can be exchanged for goods and services, which can satisfy his drives for things like food and social status. I expect that if all of his money-satisfiable drives were satisfied, he would not seek to make money by renting the house out. I likewise don't expect that there is any fixed terminal goal I could ascribe to him that would lead me to predict his behavior better than "he's a guy with the standard set of human drives, and will seek to satisfy those drives".

- My bank: ... you get the idea

Publicly traded companies do sort of have the wrapper structure from a legal perspective, but in terms of actual behavior they are usually (with notable exceptions) not asking "how do we maximize market cap" and then making explicit subgoals and subsubgoals with only that in mind.

Yeah seems reasonable. You link the enron scandal, on your view do all unbounded consequentialists die in such a scandal or similar?

on reflection some corporations probably do behave more like unbounded infinite-horizon consequentialists in the sense that they have drives to acquire resources where acquiring those resources doesn't reduce the intensity of the drive. This leads to behavior that in many cases would be the same behavior as an agent that was actually trying to maximize its future resources through any available means. And I have ever bought Chiquita bananas, so maybe not homeostatic agents exclusively.

On average, do those corporations have more or less money or power than the heuristic based firms & individuals you trade with?

Regarding conceptualizing homeostatic agents, this seems related: Why modelling multi-objective homeostasis is essential for AI alignment (and how it helps with AI safety as well)

Homeostatic agents are easily exploitable by manipulating the things they are maintaining or the signals they are using to maintain them in ways that weren't accounted for in the original setup. This only works well when they are basically a tool you have full control over, but not when they are used in an adversarial context, e.g. to maintain law and order or to win a war.

As capabilities to engage in conflict increase, methods to resist losing to those capabilities have to get optimized harder. Instead of thinking "why would my coding assistant/tutor bot turn evil?", try asking "why would my bot that I'm using to screen my social circles against automated propaganda/spies sent out by scammers/terrorists/rogue states/etc turn evil?".

Though obviously we're not yet at the point where we have this kind of bot, and we might run into law of earlier failure beforehand.

I agree that a homeostatic agent in a sufficiently out-of-distribution environment will do poorly - as soon as one of the homeostatic feedback mechanisms starts pushing the wrong way, it's game over for that particular agent. That's not something unique to homeostatic agents, though. If a model-based maximizer has some gap between its model and the real world, that gap can be exploited by another agent for its own gain, and that's game over for the maximizer.

This only works well when they are basically a tool you have full control over, but not when they are used in an adversarial context, e.g. to maintain law and order or to win a war.

Sorry, I'm having some trouble parsing this sentence - does "they" in this context refer to homeostatic agents? If so, I don't think they make particularly great tools even in a non-adversarial context. I think they make pretty decent allies and trade partners though, and certainly better allies and trade partners than consequentialist maximizer agents of the same level of sophistication do (and I also think consequentialist maximizer agents make pretty terrible tools - pithily, it's not called the "Principal-Agent Solution"). And I expect "others are willing to ally/trade with me" to be a substantial advantage.

As capabilities to engage in conflict increase, methods to resist losing to those capabilities have to get optimized harder. Instead of thinking "why would my coding assistant/tutor bot turn evil?", try asking "why would my bot that I'm using to screen my social circles against automated propaganda/spies sent out by scammers/terrorists/rogue states/etc turn evil?".

Can you expand on "turn evil"? And also what I was trying to accomplish by making my comms-screening bot into a self-directed goal-oriented agent in this scenario?

That's not something unique to homeostatic agents, though. If a model-based maximizer has some gap between its model and the real world, that gap can be exploited by another agent for its own gain, and that's game over for the maximizer.

I don't think of my argument as model-based vs heuristic-reactive, I mean it as unbounded vs bounded. Like you could imagine making a giant stack of heuristics that makes it de-facto act like an unbounded consequentialist, and you'd have a similar problem. Model-based agents only become relevant because they seem like an easier way of making unbounded optimizers.

If so, I don't think they make particularly great tools even in a non-adversarial context. I think they make pretty decent allies and trade partners though, and certainly better allies and trade partners than consequentialist maximizer agents of the same level of sophistication do (and I also think consequentialist maximizer agents make pretty terrible tools - pithily, it's not called the "Principal-Agent Solution"). And I expect "others are willing to ally/trade with me" to be a substantial advantage.

You can think of LLMs as a homeostatic agent where prompts generate unsatisfied drives. Behind the scenes, there's also a lot of homeostatic stuff going on to manage compute load, power, etc..

Homeostatic AIs are not going to be trading partners because it is preferable to run them in a mode similar to LLMs instead of similar to independent agents.

Can you expand on "turn evil"? And also what I was trying to accomplish by making my comms-screening bot into a self-directed goal-oriented agent in this scenario?

Let's say a think tank is trying to use AI to infiltrate your social circle in order to extract votes. They might be sending out bots to befriend your friends to gossip with them and send them propaganda. You might want an agent to automatically do research on your behalf to evaluate factual claims about the world so you can recognize propaganda, to map out the org chart of the think tank to better track their infiltration, and to warn your friends against it.

However, precisely specifying what the AI should do is difficult for standard alignment reasons. If you go too far, you'll probably just turn into a cult member, paranoid about outsiders. Or, if you are aggressive enough about it (say if we're talking a government military agency instead of your personal bot for your personal social circle), you could imagine getting rid of all the adversaries, but at the cost of creating a totalitarian society.

(Realistically, the law of earlier failure is plausibly going to kick in here: partly because aligning the AI to do this is so difficult, you're not going to do it. But this means you are going to turn into a zombie following the whims of whatever organizations are concentrating on manipulating you. And these organizations are going to have the same problem.)

Unbounded consequentialist maximizers are easily exploitable by manipulating the things they are optimizing for or the signals/things they are using to maximize them in ways that weren't accounted for in the original setup.

That would be ones that are bounded so as to exclude taking your manipulation methods into account, not ones that are truly unbounded.

I interpreted "unbounded" as "aiming to maximize expected value of whatever", not "unbounded in the sense of bounded rationality".

The defining difference was whether they have contextually activating behaviors to satisfy a set of drives, on the basis that this makes it trivial to out-think their interests. But this ability to out-think them also seems intrinsically linked to them being adversarially non-robust, because you can enumerate their weaknesses. You're right that one could imagine an intermediate case where they are sufficiently far-sighted that you might accidentally trigger conflict with them but not sufficiently far-sighted for them to win the conflicts, but that doesn't mean one could make something adversarially robust under the constraint of it being contextually activated and predictable.

Mimicing homeostatic agents is not difficult if there are some around. They don't need to constantly decide whether to break character, only when there's a rare opportunity to do so.

If you initialize a sufficiently large pile of linear algebra and stir it until it shows homeostatic behavior, I'd expect it to grow many circuits of both types, and any internal voting on decisions that only matter through their long-term effects will be decided by those parts that care about the long term.

Where does the gradient which chisels in the "care about the long term X over satisfying the homeostatic drives" behavior come from, if not from cases where caring about the long term X previously resulted in attributable reward? If it's only relevant in rare cases, I expect the gradient to be pretty weak and correspondingly I don't expect the behavior that gradient chisels in to be very sophisticated.

This is kinda related: 'Theories of Values' and 'Theories of Agents': confusions, musings and desiderata

i think the logic goes: if we assume many diverse autonomous agents are created, which will survive the most? And insofar as agents have goals, what will be the goals of the agents which survive the most?

i can't imagine a world where the agents that survive the most aren't ultimately those which are fundamentally trying to.

insofar as human developers are united and maintain power over which ai agents exist, maybe we can hope for homeostatic agents to be the primary kind. but insofar as human developers are competitive with each other and ai agents gain increasing power (eg for self modification), i think we have to defer to evolutionary logic in making predictions

I mean I also imagine that the agents which survive the best are the ones that are trying to survive. I don't understand why we'd expect agents that are trying to survive and also accomplish some separate arbitrary infinite-horizon goal would outperform those that are just trying to maintain the conditions necessary for their survival without additional baggage.

To be clear, my position is not "homeostatic agents make good tools and so we should invest efforts in creating them". My position is "it's likely that homeostatic agents have significant competitive advantages against unbounded-horizon consequentialist ones, so I expect the future to be full of them, and expect quite a bit of value in figuring out how to make the best of that".

Ah ok. I was responding to your post's initial prompt: "I still don't really intuitively grok why I should expect agents to become better approximated by "single-minded pursuit of a top-level goal" as they gain more capabilities." (The reason to expect this is that "single-minded pursuit of a top-level goal," if that goal is survival, could afford evolutionary advantages.)

But I agree entirely that it'd be valuable for us to invest in creating homeostatic agents. Further, I think calling into doubt western/capitalist/individualist notions like "single-minded pursuit of a top-level goal" is generally important if we have a chance of building AI systems which are sensitive and don't compete with people.

I don't think talking about "timelines" is useful anymore without specifying what the timeline is until (in more detail than "AGI" or "transformative AI"). It's not like there's a specific time in the future when a "game over" screen shows with our score. And for the "the last time that humans can meaningfully impact the course of the future" definition, that too seems to depend on the question of how: the answer is already in the past for "prevent the proliferation of AI smart enough to understand and predict human language", but significantly in the future for "prevent end-to-end automation of the production of computing infrastructure from raw inputs".

I very much agree that talking about time to AGI or TAI is causing a lot of confusion because people don't share a common definition of those terms. I asked What's a better term now that "AGI" is too vague?, arguing that the original use of AGI was very much the right term, but it's been watered down from fully general to fairly general, making the definition utterly vague and perhaps worse-than-useless.

I didn't really get any great suggestions for better terminology, including my own. Thinking about it since then, I wonder if the best term (when there's not space to carefully define it) is artifical superintelligence, ASI. That has the intuitive sense of "something that outclasses us". The alignment community has long been using it for something well past AGI, to the nearly-omniscient level, but it technically just means smarter than a human - which is something that intuition says we should be very worried about.

There are arguments that AI doesn't need to be smarter than human to worry about it, but I personally worry most about "real" AGI, as defined in that linked post and I think in Yudkowsky's original usage: AI that can think about and learn about anything.

You could also say that ASI already exists, because AI is narrowly superhuman, but superintelligence does intuitively suggest smarter than human in every way.

My runners-up were parahuman AI and superhuman entities.

I don't think it's an issue of pure terminology. Rather, I expect the issue is expecting to have a single discrete point in time at which some specific AI is better than every human at every useful task. Possibly there will ever be such a point in time, but I don't see any reason to expect "AI is better than all humans at developing new euv lithography techniques", "AI is better than all humans at equipment repair in the field", and "AI is better than all humans at proving mathematical theorems" to happen at similar times.

Put another way, is an instance of an LLM that has an affordance for "fine-tune itself on a given dataset" an ASI? Going by your rubric:

- Can think about any topic, including topics outside of their training set:Yep, though it's probably not very good at it

- Can do self-directed, online learning: Yep, though this may cause it to perform worse on other tasks if it does too much of it

- Alignment may shift as knowledge and beliefs shift w/ learning: To the extent that "alignment" is a meaningful thing to talk about with regards to only a model rather than a model plus its environment, yep

- Their own beliefs and goals: Yes, at least for definitions of "beliefs" and "goals" such that humans have beliefs and goals

- Alignment must be reflexively stable: ¯_(ツ)_/¯ seems likely that some possible configuration is relatively stable

- Alignment must be sufficient for contextual awareness and potential self-improvement: ¯_(ツ)_/¯ even modern LLM chat interfaces like Claude are pretty contextually aware these days

- Actions: Yep, LLMs can already perform actions if you give them affordances to do so (e.g. tools)

- Agency is implied or trivial to add: ¯_(ツ)_/¯, depends what you mean by "agency" but in the sense of "can break down large goals into subgoals somewhat reliably" I'd say yes

Still, I don't think e.g. Claude Opus is "an ASI" in the sense that people who talk about timelines mean it, and I don't think this is only because it doesn't have any affordances for self-directed online learning.

Olli Järviniemi made something like this point:

Rather, I expect the issue is expecting to have a single discrete point in time at which some specific AI is better than every human at every useful task. Possibly there will ever be such a point in time, but I don't see any reason to expect "AI is better than all humans at developing new euv lithography techniques", "AI is better than all humans at equipment repair in the field", and "AI is better than all humans at proving mathematical theorems" to happen at similar times.

in the post Near-mode thinking on AI:

https://www.lesswrong.com/posts/ASLHfy92vCwduvBRZ/near-mode-thinking-on-ai

In particular, here are the most relevant quotes on this subject:

"But for the more important insight: The history of AI is littered with the skulls of people who claimed that some task is AI-complete, when in retrospect this has been obviously false. And while I would have definitely denied that getting IMO gold would be AI-complete, I was surprised by the narrowness of the system DeepMind used."

"I think I was too much in the far-mode headspace of one needing Real Intelligence - namely, a foundation model stronger than current ones - to do well on the IMO, rather than thinking near-mode "okay, imagine DeepMind took a stab at the IMO; what kind of methods would they use, and how well would those work?"

"I also updated away from a "some tasks are AI-complete" type of view, towards "often the first system to do X will not be the first systems to do Y".

I've come to realize that being "superhuman" at something is often much more mundane than I've thought. (Maybe focusing on full superintelligence - something better than humanity on practically any task of interest - has thrown me off.)"

Like:

"In chess, you can just look a bit more ahead, be a bit better at weighting factors, make a bit sharper tradeoffs, make just a bit fewer errors. If I showed you a video of a robot that was superhuman at juggling, it probably wouldn't look all that impressive to you (or me, despite being a juggler). It would just be a robot juggling a couple balls more than a human can, throwing a bit higher, moving a bit faster, with just a bit more accuracy. The first language models to be superhuman at persuasion won't rely on any wildly incomprehensible pathways that break the human user (c.f. List of Lethalities, items 18 and 20). They just choose their words a bit more carefully, leverage a bit more information about the user in a bit more useful way, have a bit more persuasive writing style, being a bit more subtle in their ways. (Indeed, already GPT-4 is better than your average study participant in persuasiveness.) You don't need any fundamental breakthroughs in AI to reach superhuman programming skills. Language models just know a lot more stuff, are a lot faster and cheaper, are a lot more consistent, make fewer simple bugs, can keep track of more information at once. (Indeed, current best models are already useful for programming.) (Maybe these systems are subhuman or merely human-level in some aspects, but they can compensate for that by being a lot better on other dimensions.)"

"As a consequence, I now think that the first transformatively useful AIs could look behaviorally quite mundane."

I agree with all of that. My definition isn't crisp enough; doing crappy general thinking and learning isn't good enough. It probably needs to be roughly human level or above at those things before it's takeover-capable and therefore really dangerous.

I didn't intend to add the alignment definitions to the definition of AGI.

I'd argue that LLMs actually can't think about anything outside of their training set, and it's just that everything humans have thought about so far is inside their training set. But I don't think that discussion matters here.

I agree that Claude isn't an ASI by that definition. even if it did have longer-term goal-directed agency and self-directed online learning added, it would still be far subhuman in some important areas, arguably in general reasoning that's critical for complex novel tasks like taking over the world or the economy. ASI needs to mean superhuman in every important way. And of course important is vague.

I guess a more reasonable goal is working toward the minimum description length that gets across all of those considerations. And a big problem is that timeline predictions to important/dangerous AI are mixed in with theories about what will make it important/dangerous. One terminological move I've been trying is the word "competent" to invoke intuitions about getting useful (and therefore potentially dangerous) stuff done.

I think the unstated assumption (when timeline-predictors don't otherwise specify) is "the time when there are no significant deniers", or "the time when things are so clearly different that nobody (at least nobody the predictor respects) is using the past as any indication of the future on any relevant dimension.

Some people may CLAIM it's about the point of no return, after which changes can't be undone or slowed in order to maintain anything near status quo or historical expectations. This is pretty difficult to work with, since it could happen DECADES before it's obvious to most people.

That said, I'm not sure talking about timelines was EVER all that useful or concrete. There are too many unknowns, and too many anti-inductive elements (where humans or other agents change their behavior based on others' decisions and their predictions of decisions, in a chaotic recursion). "short", "long", or "never" are good at giving a sense of someone's thinking, but anything more granular is delusional.

[Epistemic status: 75% endorsed]

Those who, upon seeing a situation, look for which policies would directly incentivize the outcomes they like should spend more mental effort solving for the equilibrium.

Those who, upon seeing a situation, naturally solve for the equilibrium should spend more mental effort checking if there is indeed only one "the" equilibrium, and if there are multiple possible equilibria, solving for which factors determine which of the several possible the system ends up settling on.

In the startup world, conventional wisdom is that, if your company is default-dead (i.e. on the current growth trajectory, you will run out of money before you break even), you should pursue high-variance strategies. In one extreme example, "in the early days of FedEx, [founder of FedEx] Smith had to go to great lengths to keep the company afloat. In one instance, after a crucial business loan was denied, he took the company's last $5,000 to Las Vegas and won $27,000 gambling on blackjack to cover the company's $24,000 fuel bill. It kept FedEx alive for one more week."

By contrast, if your company is default-alive (profitable or on-track to become profitable long before you run out of money in the bank), you should avoid making high-variance bets for a substantial fraction of the value of the company, even if those high-variance bets are +EV.

Obvious follow-up question: in the absence of transformative AI, is humanity default-alive or default-dead?

in the absence of transformative AI, is humanity default-alive or default-dead?

I suspect humanity is default-alive, but individual humans (the ones who actually make decisions) are default-dead[1].

Yes. And that means most people will support taking large risks on achieving aligned AGI and immortality, since most people aren't utilitarian or longtermist.

in the absence of transformative AI, is humanity default-alive or default-dead

Almost certainly alive for several more decades if we are talking literal extinction rather than civilization-wreaking catastrophe. Therefore it makes sense to work towards global coordination to pause AI for at least this long.

if your company is default-dead, you should pursue high-variance strategies

There are rumors OpenAI (which has no moat) is spending much more than it's making this year despite good revenue, another datapoint on there being $1 billion training runs currently in progress.

I'm curious what sort of policies you're thinking of which would allow for a pause which plausibly buys us decades, rather than high-months-to-low-years. My imagination is filling in "totalitarian surveillance state which is effective at banning general-purpose computing worldwide, and which prioritizes the maintenance of its own control over all other concerns". But I'm guessing that's not what you have in mind.

No more totalitarian than control over manufacturing of nuclear weapons. The issue is that currently there is no buy-in on a similar level, and any effective policy is too costly to accept for people who don't expect existential risk. This might change once there are long-horizon task capable AIs that can do many jobs, if they are reined in before there is runaway AGI that can do research on its own. And establishing control over compute is more feasible if it turns out that taking anything approaching even a tiny further step in the direction of AGI takes 1e27 FLOPs.

Generally available computing hardware doesn't need to keep getting better over time, for many years now PCs have been beyond what is sufficient for most mundane purposes. What remains is keeping an eye on GPUs for the remaining highly restricted AI research and specialized applications like medical research. To prevent their hidden stockpiling, all GPUs could be required to need regular unlocking OTPs issued with asymmetric encryption using multiple secret keys kept separately, so that all of the keys would need to be stolen simultaneously to keep the GPUs working (if the GPUs go missing or a country that hosts the datacenter goes rogue, and official unlocking OTPs wouldn't keep being issued). Hidden manufacturing of GPUs seems much less feasible than hidden or systematically subverted datacenters.

a totalitarian surveillance state which is effective at banning general-purpose computing worldwide, and which prioritizes the maintenance of its own control over all other concerns

I much prefer that to everyone's being killed by AI. Don't you?

Great example. One factor that's relevant to AI strategy is that you need good coordination to increase variance. If multiple people at the company make independent gambles without properly accounting for every other gamble happening, this would average the gambles and reduce the overall variance.

E.g. if coordination between labs is terrible, they might each separately try superhuman AI boxing+some alignment hacks, with techniques varying between groups.

It seems like lack of coordination for AGI strategy increases the variance? That is, without coordination somebody will quickly launch an attempt at value aligned AGI; if they get it, we win. If they don't, we probably lose. With coordination, we might all be able to go slower to lower the risk and therefore variance of the outcome.

I guess it depends on some details, but I don't understand your last sentence. I'm talking about coordinating on one gamble.

Analogous the the OP, I'm thinking of AI companies making a bad bet (like 90% chance of loss of control, 10% chance gain the tools to do a pivotal act in the next year). Losing the bet ends the betting, and winning allows everyone to keep playing. Then if many of them make similar independent gambles simultaneously, it becomes almost certain that one of them loses control.

In the absence of transformative AI, humanity survives many millennia with p = .9 IMO, and if humanity does not survive that long, the primary cause is unlikely to be climate change or nuclear war although either might turn out to be a contributor.

(I'm a little leery of your "default-alive" choice of words.)

Scaffolded LLMs are pretty good at not just writing code, but also at refactoring it. So that means that all the tech debt in the world will disappear soon, right?

I predict "no" because

- As writing code gets cheaper, the relative cost of making sure that a refactor didn't break anything important goes up

- The number of parallel threads of software development will also go up, with multiple high-value projects making mutually-incompatible assumptions (and interoperability between these projects accomplished by just piling on more code).

As such, I predict an explosion of software complexity and jank in the near future.

In software development / IT contexts, "security by obscurity" (that is, having the security of your platform rely on the architecture of that platform remaining secret) is considered a terrible idea. This is a result of a lot of people trying that approach, and it ending badly when they do.

But the thing that is a bad idea is quite specific - it is "having a system which relies on its implementation details remaining secret". It is not an injunction against defense in depth, and having the exact heuristics you use for fraud or data exfiltration detection remain secret is generally considered good practice.

There is probably more to be said about why the one is considered terrible practice and the other is considered good practice.

There are competing theories here. Including secrecy of architecture and details in the security stack is pretty common, but so is publishing (or semi-publishing: making it company confidential, but talked about widely enough that it's not hard to find if someone wants to) mechanisms to get feedback and improvements. The latter also makes the entire value chain safer, as other organizations can learn from your methods.

Civilization has had many centuries to adapt to the specific strengths and weaknesses that people have. Our institutions are tuned to take advantage of those strengths, and to cover for those weaknesses. The fact that we exist in a technologically advanced society says that there is some way to make humans fit together to form societies that accumulate knowledge, tooling, and expertise over time.

The borderline-general AI models we have now do not have exactly the same patterns of strength and weakness as humans. One question that is frequently asked is approximately

When will AI capabilities reach or exceed all human capabilities that are load bearing in human society?

A related line of questions, though, is

- When will AI capabilities reach a threshold where a number of agents can form a larger group that accumulates knowledge, tooling, and expertise over time?

- Will their roles in such a group look similar to the roles that people have in human civilization?

- Will the individual agents (if "agent" is even the right model to use) within that group have more control over the trajectory of the group as a whole than individual people have over the trajectory of human civilization?

In particular the third question seems pretty important.

A lot of AI x-risk discussion is focused on worlds where iterative design fails. This makes sense, as "iterative design stops working" does in fact make problems much much harder to solve.

However, I think that even in the worlds where iterative design fails for safely creating an entire AGI, the worlds we succeed will be ones in which we were able to do iterative design on the components that safe AGI, and also able to do iterative design on the boundaries between subsystems, with the dangerous parts mocked out.

I am not optimistic about approaches that look like "do a bunch of math and philosophy to try to become less confused without interacting with the real world, and only then try to interact with the real world using your newfound knowledge".

For the most part, I don't think it's a problem if people work on the math / philosophy approaches. However, to the extent that people want to stop people from doing empirical safety research on ML systems as they actually are in practice, I think that's trading off a very marginal increase in the odds of success in worlds where iterative design could never work against a quite substantial decrease in the odds of success in worlds where iterative design could work. I am particularly thinking of things like interpretability / RLHF / constitutional AI as things which help a lot in worlds where iterative design could succeed.

A lot of AI x-risk discussion is focused on worlds where iterative design fails. This makes sense, as "iterative design stops working" does in fact make problems much much harder to solve.

Maybe on LW, this seems way less true for lab alignment teams, open phil, and safety researchers in general.

Also, I think it's worth noting the distinction between two different cases:

- Iterative design against the problems you actually see in production fails.

- Iterative design against carefully constructed test beds fails to result in safety in practice. (E.g. iterating against AI control test beds, model organisms, sandwiching setups, and other testbeds)

See also this quote from Paul from here:

Eliezer often equivocates between “you have to get alignment right on the first ‘critical’ try” and “you can’t learn anything about alignment from experimentation and failures before the critical try.” This distinction is very important, and I agree with the former but disagree with the latter. Solving a scientific problem without being able to learn from experiments and failures is incredibly hard. But we will be able to learn a lot about alignment from experiments and trial and error; I think we can get a lot of feedback about what works and deploy more traditional R&D methodology. We have toy models of alignment failures, we have standards for interpretability that we can’t yet meet, and we have theoretical questions we can’t yet answer.. The difference is that reality doesn’t force us to solve the problem, or tell us clearly which analogies are the right ones, and so it’s possible for us to push ahead and build AGI without solving alignment. Overall this consideration seems like it makes the institutional problem vastly harder, but does not have such a large effect on the scientific problem.

The quote from Paul sounds about right to me, with the caveat that I think it's pretty likely that there won't be a single try that is "the critical try": something like this (also by Paul) seems pretty plausible to me, and it is cases like that that I particularly expect having existing but imperfect tooling for interpreting and steering ML models to be useful.

However, to the extent that people want to stop people from doing empirical safety research on ML systems as they actually are in practice

Does anyone want to stop this? I think some people just contest the usefulness of improving RLHF / RLAIF / constitutional AI as safety research and also think that it has capabilties/profit externalities. E.g. see discussion here.

(I personally think this this research is probably net positive, but typically not very important to advance at current margins from an altruistic perspective.)

Does anyone want to stop [all empirical research on AI, including research on prosaic alignment approaches]?

Yes, there are a number of posts to that effect.

That said, "there exist such posts" is not really why I wrote this. The idea I really want to push back on is one that I have heard several times in IRL conversations, though I don't know if I've ever seen it online. It goes like

There are two cars in a race. One is alignment, and one is capabilities. If the capabilities car hits the finish line first, we all die, and if the alignment car hits the finish line first, everything is good forever. Currently the capabilities car is winning. Some things, like RLHF and mechanistic interpretability research, speed up both cars. Speeding up both cars brings us closer to death, so those types of research are bad and we should focus on the types of research that only help alignment, like agent foundations. Also we should ensure that nobody else can do AI capabilities research.

Maybe almost nobody holds that set of beliefs! I am noticing now that my list of articles arguing that prosaic alignment strategies are harmful in expectation are by a pretty short list of authors.

So I keep seeing takes about how to tell if LLMs are "really exhibiting goal-directed behavior" like a human or whether they are instead "just predicting the next token". And, to me at least, this feels like a confused sort of question that misunderstands what humans are doing when they exhibit goal-directed behavior.

Concrete example. Let's say we notice that Jim has just pushed the turn signal lever on the side of his steering wheel. Why did Jim do this?

The goal-directed-behavior story is as follows:

- Jim pushed the turn signal lever because he wanted to alert surrounding drivers that he was moving right by one lane

- Jim wanted to alert drivers that he was moving one lane right because he wanted to move his car one lane to the right.

- Jim wanted to move his car one lane to the right in order to accomplish the goal of taking the next freeway offramp

- Jim wanted to take the next freeway offramp because that was part of the most efficient route from his home to his workplace

- Jim wanted to go to his workplace because his workplace pays him money

- Jim wants money because money can be exchanged for goods and services

- Jim wants goods and services because they get him things he terminally values like mates and food

But there's an alternative story:

- When in the context of "I am a middle-class adult", the thing to do is "have a job". Years ago, this context triggered Jim to perform the action "get a job", and now he's in the context of "having a job".

- When in the context of "having a job", "showing up for work" is the expected behavior.

- Earlier this morning, Jim had the context "it is a workday" and "I have a job", which triggered Jim to begin the sequence of actions associated with the behavior "commuting to work"

- Jim is currently approaching the exit for his work - with the context of "commuting to work", this means the expected behavior is "get in the exit lane", and now he's in the context "switching one lane to the right"

- In the context of "switching one lane to the right", one of the early actions is "turn on the right turn signal by pushing the turn signal lever". And that is what Jim is doing right now.

I think this latter framework captures some parts of human behavior that the goal-directed-behavior framework misses out on. For example, let's say the following happens

- Jim is going to see his good friend Bob on a Saturday morning

- Jim gets on the freeway - the same freeway, in fact, that he takes to work every weekday morning

- Jim gets into the exit lane for his work, even though Bob's house is still many exits away

- Jim finds himself pulling onto the street his workplace is on

- Jim mutters "whoops, autopilot" under his breath, pulls a u turn at the next light, and gets back on the freeway towards Bob's house

This sequence of actions is pretty nonsensical from a goal-directed-behavior perspective, but is perfectly sensible if Jim's behavior here is driven by contextual heuristics like "when it's morning and I'm next to my work's freeway offramp, I get off the freeway".

Note that I'm not saying "humans never exhibit goal-directed behavior".

Instead, I'm saying that "take a goal, and come up with a plan to achieve that goal, and execute that plan" is, itself, just one of the many contextually-activated behaviors humans exhibit.

I see no particular reason that an LLM couldn't learn to figure out when it's in a context like "the current context appears to be in the execute-the-next-step-of-the-plan stage of such-and-such goal-directed-behavior task", and produce the appropriate output token for that context.

Is it possible to determine whether a feature (in the SAE sense of "a single direction in activation space") exists for a given set of changes in output logits?

Let's say I have a feature from a learned dictionary on some specific layer of some transformer-based LLM. I can run a whole bunch of inputs through the LLM, either adding that feature to the activations at that layer (in the manner of Golden Gate Claude) or ablating that direction from the outputs at that layer. That will have some impact on the output logits.

Now I have a collection of (input token sequence, output logit delta) pairs. Can I, from that set, find the feature direction which produces those approximate output logit deltas by gradient descent?

If yes, could the same method be used to determine which features in a learned dictionary trained on one LLM exist in a completely different LLM that uses the same tokenizer?

I imagine someone has already investigated this question, but I'm not sure what search terms to use to find it. The obvious search terms like "sparse autoencoder cross model" or "Cross-model feature alignment in transformers" don't turn up a ton, although they turn up the somewhat relevant paper Text-To-Concept (and Back) via Cross-Model Alignment.

Wait I think I am overthinking this by a lot and the thing I want is in the literature under terms like "classifier" / and "linear regression'.

Transformative AI will likely arrive before AI that implements the personhood interface. If someone's threshold for considering an AI to be "human level" is "can replace a human employee", pretty much any LLM will seem inadequate, no matter how advanced, because current LLMs do not have "skin in the game" that would let them sign off on things in a legally meaningful way, stake their reputation on some point, or ask other employees in the company to answer the questions they need answers to in order to do their work and expect that they'll get in trouble with their boss if they blow the AI off.

This is, of course, not a capabilities problem at all, just a terminology problem where "human-level" can be read to imply "human-like".

I've heard that an "agent" is that which "robustly optimizes" some metric in a wide variety of environments. I notice that I am confused about what the word "robustly" means in that context.

Does anyone have a concrete example of an existing system which is unambiguously an agent by that definition?

In this context, 'robustly' means that even with small changes to the system (such as moving the agent or the goal to a different location in a maze) the agent still achieves the goal. If you think of the system state as a location in a phase space, this could look like a large "basin of attraction" of initial states that all converge to the goal state.

If we take a marble and a bowl, and we place the marble at any point in the bowl, it will tend to roll towards the middle of the bowl. In this case "phase space" and "physical space" map very closely to each other, and the "basin of attraction" is quite literally a basin. Still, I don't think most people would consider the marble to be an "agent" that "robustly optimizes for the goal of being in the bottom of the bowl".

However, while I've got a lot of concrete examples of things which are definitely not agents (like the above) or "maybe kinda agent-like but definitely not central" (e.g. a minmaxing tic-tac-toe program that finds the optimal move by exploring the full game tree, or an e-coli bacterium which uses run-and-tumble motion to increase the fraction of the time it spends in favorable environments, a person setting and then achieving career goals), I don't think I have a crisp central example of a thing that exists in the real world that is definitely an agent.

Has anyone trained a model to, given a prompt-response pair and an alternate response, generate an alternate prompt which is close to the original and causes the alternate response to be generated with high probability?

I ask this because

- It strikes me that many of the goals of interpretability research boil down to "figure out why models say the things they do, and under what circumstances they'd say different things instead". If we could reliably ask the model and get an intelligible and accurate response back, that would almost trivialize this sort of research.

- This task seems like it has almost ideal characteristics for training on - unlimited synthetic data, granular loss metric, easy for a human to see if the model is doing some weird reward hacky thing by spot checking outputs

A quick search found some vaguely adjacent research, but nothing I'd rate as a super close match.

- AutoPrompt: Eliciting Knowledge from Language Models with Automatically Generated Prompts (2020) Automatically creates prompts by searching for words that make language models produce specific outputs. Related to the response-guided prompt modification task, but mainly focused on extracting factual knowledge rather than generating prompts for custom responses.

- RLPrompt: Optimizing Discrete Text Prompts with Reinforcement Learning (2022) Uses reinforcement learning to find the best text prompts by rewarding the model when it produces desired outputs. Similar to the response-guided prompt modification task since it tries to find prompts that lead to specific outputs, but doesn't start with existing prompt-response pairs.

- GrIPS: Gradient-free, Edit-based Instruction Search for Prompting Large Language Models Makes simple edits to instructions to improve how well language models perform on tasks. Relevant because it changes prompts to get better results, but mainly focuses on improving existing instructions rather than creating new prompts for specific alternative responses.

- Large Language Models are Human-Level Prompt Engineers (2022) Uses language models themselves to generate and test many possible prompts to find the best ones for different tasks. Most similar to the response-guided prompt modification task as it creates new instructions to achieve better performance, though not specifically designed to match alternative responses.

If this research really doesn't exist I'd find that really surprising, since it's a pretty obvious thing to do and there are O(100,000) ML researchers in the world. And it is entirely possible that it does exist and I just failed to find it with a cursory lit review.

Anyone familiar with similar research / deep enough in the weeds to know that it doesn't exist?

I think I found a place where my intuitions about "clusters in thingspace" / "carving thingspace at the joints" / "adversarial robustness" may have been misleading me.

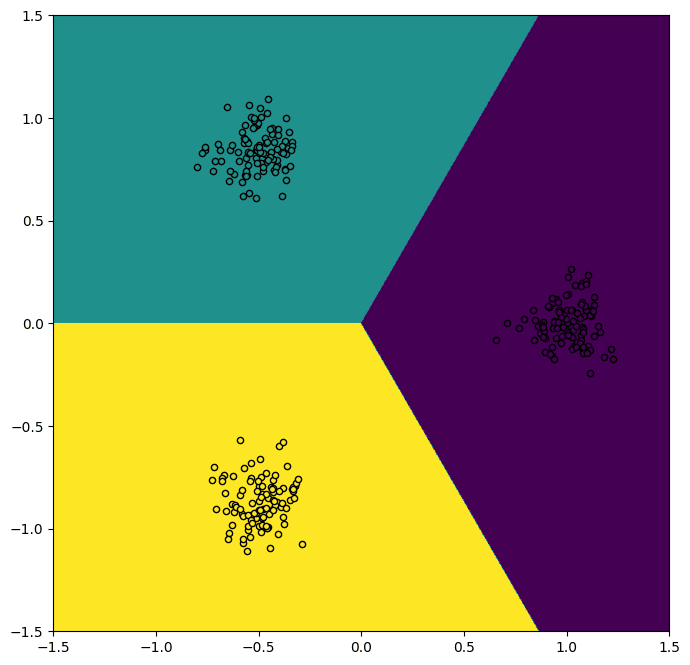

Historically, when I thought of of "clusters in thing-space", my mental image was of a bunch of widely-spaced points in some high-dimensional space, with wide gulfs between the clusters. In my mental model, if we were to get a large enough sample size that the clusters approached one another, the thresholds which carve those clusters apart would be nice clean lines, like this.

In this model, an ML model trained on these clusters might fit to a set of boundaries which is not equally far from each cluster (after all, there is no bonus reduction in loss for more robust perfect classification). So in my mind the ground truth would be something like the above image, whereas what the non-robust model learned would be something more like the below:

But even if we observe clusters in thing-space, why should we expect the boundaries between them to be "nice"? It's entirely plausible to me that the actual ground truth is something more like this

That is the actual ground truth for the categorization problem of "which of the three complex roots will iteration of the Euler Method converge on for given each starting point". And in terms of real-world problems, we see the recent and excellent paper The boundary of neural network trainability is fractal.