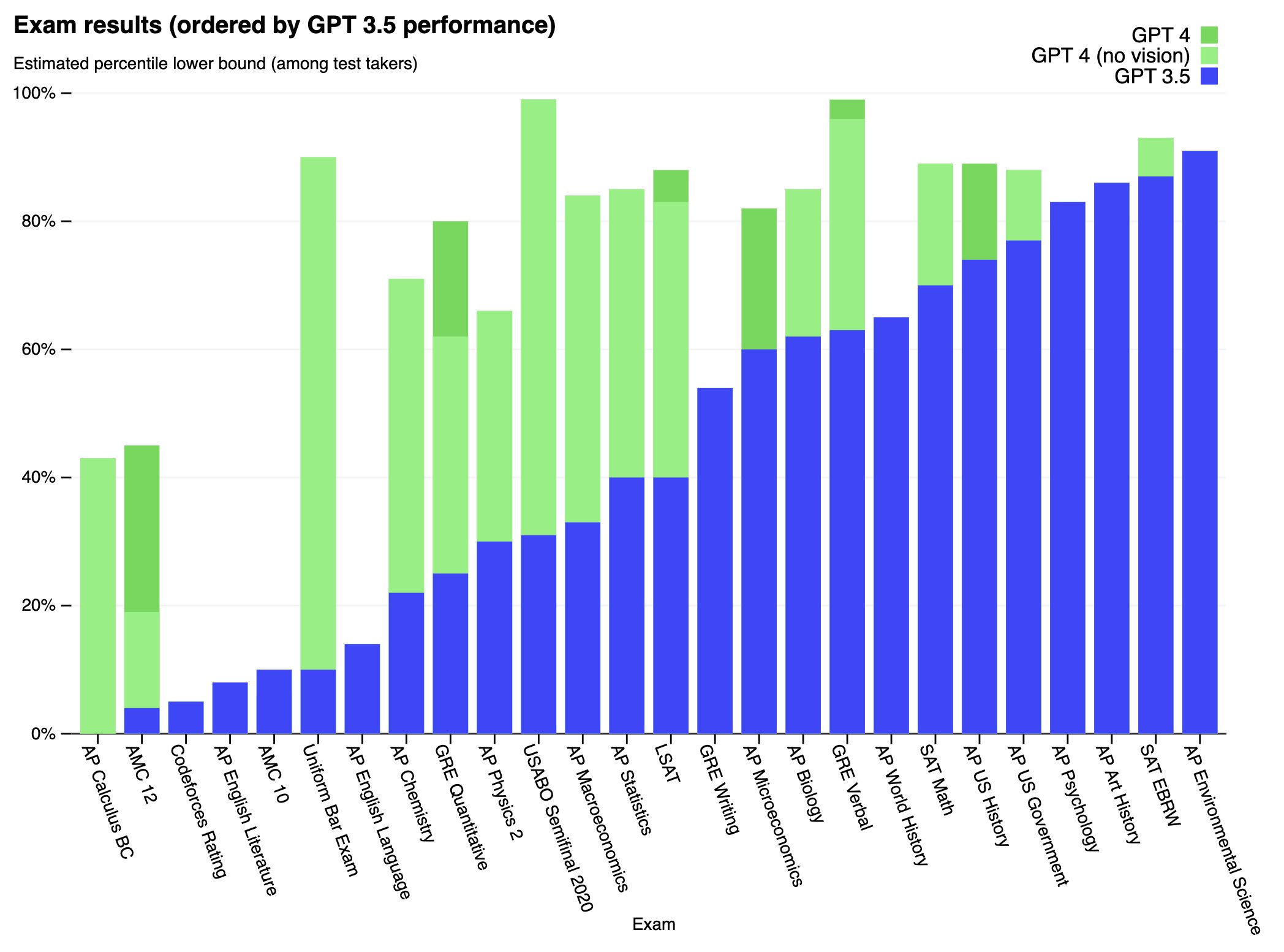

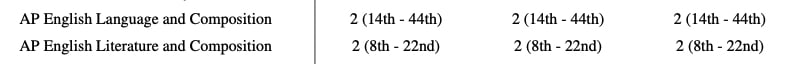

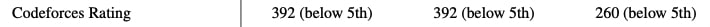

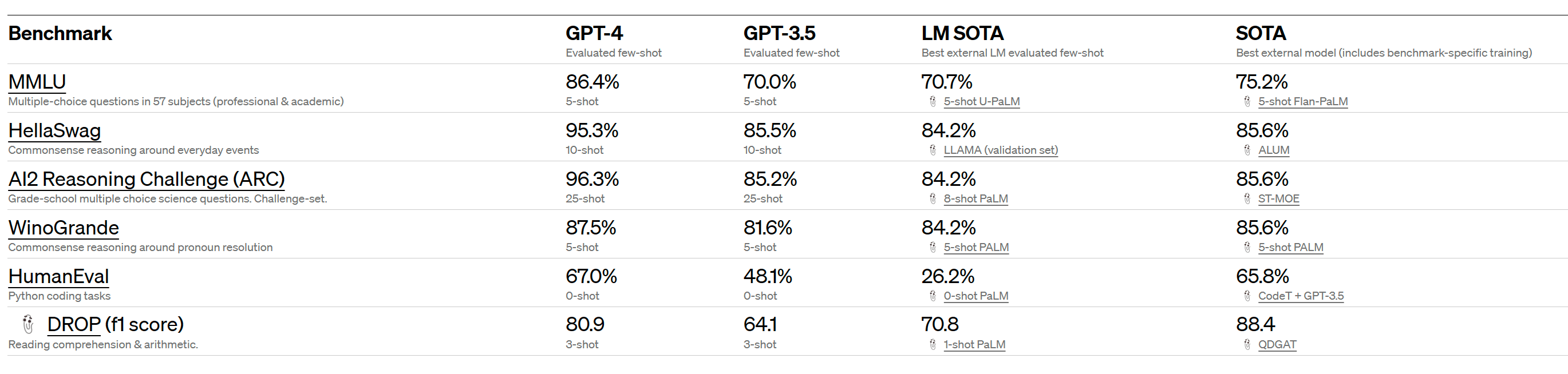

We’ve created GPT-4, the latest milestone in OpenAI’s effort in scaling up deep learning. GPT-4 is a large multimodal model (accepting image and text inputs, emitting text outputs) that, while worse than humans in many real-world scenarios, exhibits human-level performance on various professional and academic benchmarks.

Full paper available here: https://cdn.openai.com/papers/gpt-4.pdf

Bumping someone else's comment on the Gwern GPT-4 linkpost that now seems deleted:

This does seem like quite a significant jump (among all the general capabilities jumps shown in the rest of the paper. The previous SOTA was only 75.2% for Flan-PaLM (5-shot, finetuned, CoT + SC).

And that previous SOTA was for a model fine-tuned on MMLU, the few-shot capabilities actually jumped from 70.7% to 86.4%!!