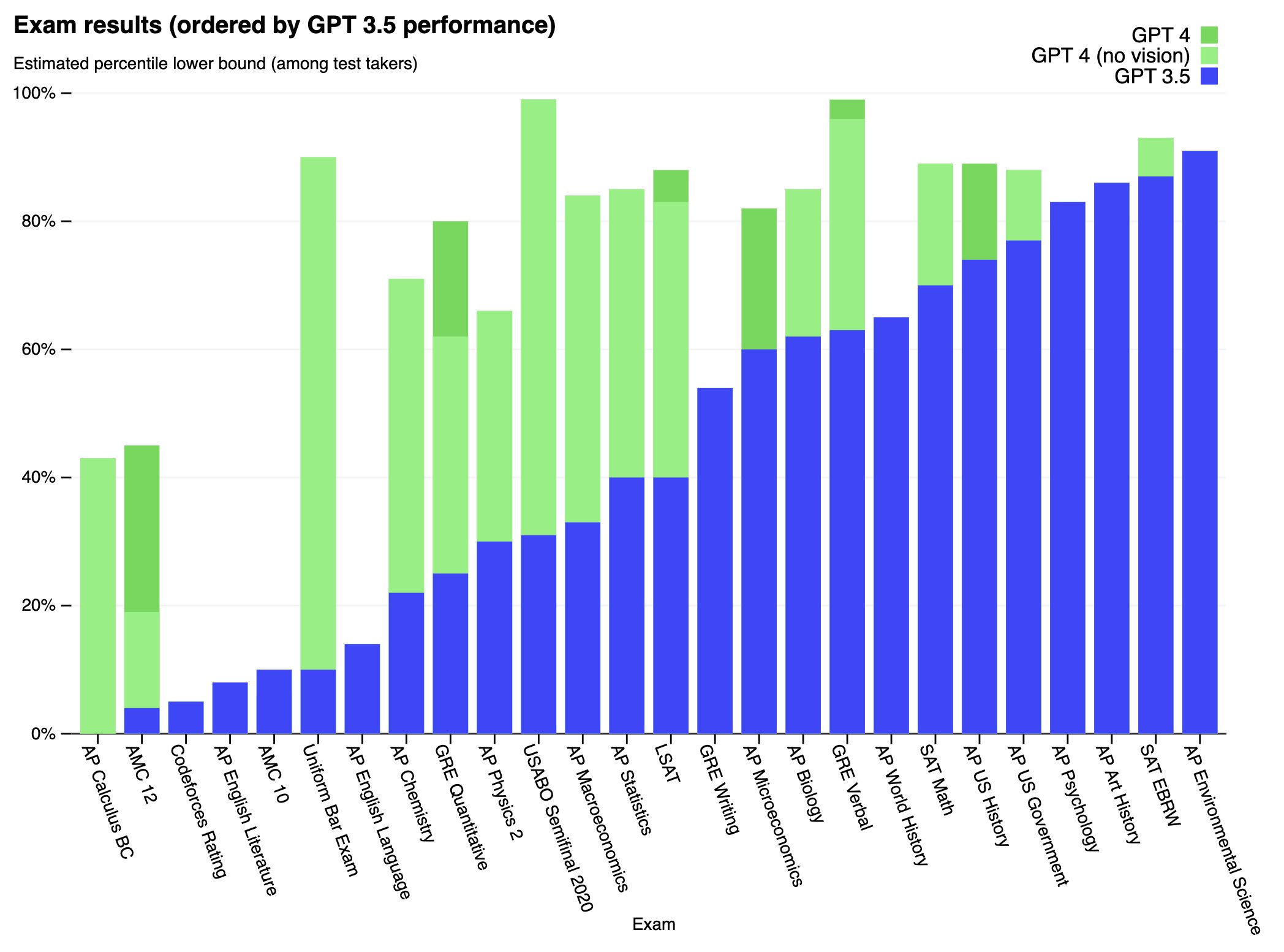

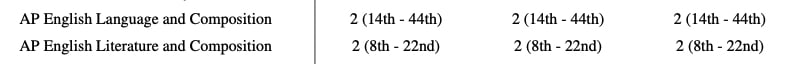

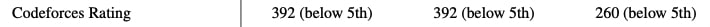

We’ve created GPT-4, the latest milestone in OpenAI’s effort in scaling up deep learning. GPT-4 is a large multimodal model (accepting image and text inputs, emitting text outputs) that, while worse than humans in many real-world scenarios, exhibits human-level performance on various professional and academic benchmarks.

Full paper available here: https://cdn.openai.com/papers/gpt-4.pdf

I think it's probably true that RLHF doesn't reduce to a proper scoring rule on factual questions, even if you ask the model to quantify its uncertainty, because the learned reward function doesn't make good quantitative tradeoffs.

That said, I think this is unrelated to the given graph. If it is forced to say either "yes" or "no" the RLHF model will just give the more likely answer100% of the time, which will show up as bad calibration on this graph. The point is that for most agents "the probability you say yes" is not the same as "the probability you think the answer is yes." This is the case for pretrained models.