Reviews 2021

Leaderboard

What does this post add to the conversation?

Two pictures of elephant seals.

How did this post affect you, your thinking, and your actions?

I am, if not deeply, but certainly affected by this post. I felt some kind of joy looking at these animals. It calmed my anger and made my thoughts somewhat happier. I started to believe the world can become a better place, and I would like to make it happen. This post made me a better person.

Does it make accurate claims? Does it carve reality at the joints? How do you know?

The title says elephant seals 2 and c...

This post provides a valuable reframing of a common question in futurology: "here's an effect I'm interested in -- what sorts of things could cause it?"

That style of reasoning ends by postulating causes. But causes have a life of their own: they don't just cause the one effect you're interested in, through the one causal pathway you were thinking about. They do all kinds of things.

In the case of AI and compute, it's common to ask

- Here's a hypothetical AI technology. How much compute would it require?

But once we have an answer to this quest...

Zack's series of posts in late 2020/early 2021 were really important to me. They were a sort of return to form for LessWrong, focusing on the valuable parts.

What are the parts of The Sequences which are still valuable? Mainly, the parts that build on top of Korzybski's General Semantics and focus hard core on map-territory distinctions. This part is timeless and a large part of the value that you could get by (re)reading The Sequences today. Yudkowsky's credulity about results from the social sciences and his mind projection fallacying his own mental quirk...

I read this post for the first time in 2022, and I came back to it at least twice.

What I found helpful

- The proposed solution: I actually do come back to the “honor” frame sometimes. I have little Rob Bensinger and Anna Salamon shoulder models that remind me to act with integrity and honor. And these shoulder models are especially helpful when I’m noticing (unhelpful) concerns about social status.

- A crisp and community-endorsed statement of the problem: It was nice to be like “oh yeah, this thing I’m experiencing is that thing that Anna Salamon calls PR

I still think this is great. Some minor updates, and an important note:

Minor updates: I'm a bit less concerned about AI-powered propaganda/persuasion than I was at the time, not sure why. Maybe I'm just in a more optimistic mood. See this critique for discussion. It's too early to tell whether reality is diverging from expectation on this front. I had been feeling mildly bad about my chatbot-centered narrative, as of a month ago, but given how ChatGPT was received I think things are basically on trend.

Diplomacy happened faster than I expected, though in a ...

I continue to believe that the Grabby Aliens model rests on an extremely sketchy foundation, namely the anthropic assumption “humanity is randomly-selected out of all intelligent civilizations in the past present and future”.

For one thing, given that the Grabby Aliens model does not weight civilizations by their populations, it follows that, if the Grabby Aliens model is right, then all the “popular” anthropic priors like SIA and SSA and UDASSA and so on are all wrong, IIUC.

For another (related) thing, in order to believe the Grabby Aliens model, we need t...

A Cached Belief

I find this Wired article an important exploration of an enormous wrong cached belief in the medical establishment: namely that based on its size, Covid would be transmitted exclusively via droplets (which quickly fall to the ground), rather than aerosols (which hang in the air). This justified a bunch of extremely costly Covid policy decisions and recommendations: like the endless exhortations to disinfect everything and to wash hands all the time. Or the misguided attempt to protect people from Covid by closing public parks and playgrounds...

- Oh man, what an interesting time to be writing this review!

- I've now written second drafts of an entire sequence that more or less begins with an abridged (or re-written?) version of "Catching the Spark". The provisional title of the sequence is "Nuts and Bolts Of Naturalism". (I'm still at least a month and probably more from beginning to publish the sequence, though.) This is the post in the sequence that's given me the most trouble; I've spent a lot of the past week trying to figure out where I stand with it.

- I think if I just had to answer "yes" or

I remain pretty happy with most of this, looking back -- I think this remains clear, accessible, and about as truthful as possible without getting too technical.

I do want to grade my conclusions / predictions, though.

(1). I predicted that this work would quickly be exceeded in sample efficiency. This was wrong -- it's been a bit over a year and EfficientZero is still SOTA on Atari. My 3-to-24-month timeframe hasn't run out, but I said that I expected "at least a 25% gain" towards the start of the time, which hasn't happened.

(2). There has been a shift to...

Uncharitable Summary

Most likely there’s something in the intuitions which got lost when transmitted to me via reading this text, but the mathematics itself seems pretty tautological to me (nevertheless I found it interesting since tautologies can have interesting structure! The proof itself was not trivial to me!).

Here is my uncharitable summary:

Assume you have a Markov chain M_0 → M_1 → M_2 → … → M_n → … of variables in the universe. Assume you know M_n and want to predict M_0. The Telephone theorem says two things:

- You don’t need to keep a

I really like this post. It's a crisp, useful insight, made via a memorable concrete example (plus a few others), in a very efficient way. And it has stayed with me.

This is a negative review of an admittedly highly-rated post.

The positives first; I think this post is highly reasonable and well written. I'm glad that it exists and think it contributes to the intellectual conversation in rationality. The examples help the reader reason better, and it contains many pieces of advice that I endorse.

But overall, 1) I ultimately disagree with its main point, and 2) it's way too strong/absolutist about it.

Throughout my life of attempting to have true beliefs and take effective actions, I have quite strongly learned some disti...

ELK was one of my first exposures to AI safety. I participated in the ELK contest shortly after moving to Berkeley to learn more about longtermism and AI safety. My review focuses on ELK’s impact on me, as well as my impressions of how ELK affected the Berkeley AIS community.

Things about ELK that I benefited from

Understanding ARC’s research methodology & the builder-breaker format. For me, most of the value of ELK came from seeing ELK’s builder-breaker research methodology in action. Much of the report focuses on presenting training strategies and pres...

I still think this is basically correct, and have raised my estimation of how important it is in x-risk in particular. The emphasis on doing The Most Important Thing and Making Large Bets push people against leaving slack, which I think leads to high value but irregular opportunities for gains being ignored.

This gave a satisfying "click" of how the Simulacra and Staghunt concepts fit together.

Things I would consider changing:

1. Lion Parable. In the comments, John expands on this post with a parable about lion-hunters who believe in "magical protection against lions." That parable is actually what I normally think of when I think of this post, and I was sad to learn it wasn't actually in the post. I'd add it in, maybe as the opening example.

2. Do we actually need the word "simulacrum 3"? Something on my mind since last year's review is "how much work are...

I suppose, with one day left to review 2021 posts, I can add my 2¢ to my own here.

Overall I still like this post. I still think it points at true things and says them pretty well.

I had intended it as a kind of guide or instruction manual for anyone who felt inspired to create a truly potent rationality dojo. I'm a bit saddened that, to the best of my knowledge, no one seems to have taken what I named here and made it their own enough to build a Beisutsu dojo. I would really have liked to see that.

But this post wasn't meant to persuade anyone to do it. It w...

If you judge your social media usage by whether the average post you read is good or bad, you are missing half of the picture. The rapid context switching incurs an invisible cost even if the interaction itself is positive, as does the fact that you expect to be interrupted. "[T]he knowledge that interruptions could come at every time will change your mental state", as Elizabeth puts it.

This is the main object-level message of this post, and I don't have any qualms with it. It's very similar to what Sam Harris talks about a lot (e.g., here), and it seems t...

Epistemic Status

I am an aspiring selection theorist and I have thoughts.

Why Selection Theorems?

Learning about selection theorems was very exciting. It's one of those concepts that felt so obviously right. A missing component in my alignment ontology that just clicked and made everything stronger.

Selection Theorems as a Compelling Agent Foundations Paradigm

There are many reasons to be sympathetic to agent foundations style safety research as it most directly engages the hard problems/core confusions of alignment/safety. However, one concer...

I'm not sure I use this particular price mechanism fairly often, but I think this post was involved in me moving toward often figuring out fair prices for things between friends and allies, which I think helps a lot. The post puts together lots of the relevant intuitions, which is what's so helpful about it. +4

The post is still largely up-to-date. In the intervening year, I mostly worked on the theory of regret bounds for infra-Bayesian bandits, and haven't made much progress on open problems in infra-Bayesian physicalism. On the other hand, I also haven't found any new problems with the framework.

The strongest objection to this formalism is the apparent contradiction between the monotonicity principle and the sort of preferences humans have. While my thinking about this problem evolved a little, I am still at a spot where every solution I know requires biting a...

Alexandros Marinos (LW profile) has a long series where he reviewed Scott's post:

The Potemkin argument is my public peer review of Scott Alexander’s essay on ivermectin. In this series of posts, I go through that essay in detail, working through the various claims made and examining their validity. My essays will follow the structure of Scott’s essay, structured in four primary units, with additional material to follow

This is his summary of the series, and this is the index. Here's the main part of the index:

...Introduction

Part 1: Introduction (TBC)

I remember reading this post and thinking it is very good and important. I have since pretty much forgot about it and it's insights, probably because I didn't think much about GDPs anyway. Rereading the post, I maintain that it is very good and important. Any discussion of GDP should be with the understanding of what this post says, which I summarized to myself like so (It's mostly a combination of edited excerpts from the post):

...Real GDP is usually calculated by adding up the total dollar value of all goods, using prices from some recent year (every few ye

The first elephant seal barely didn't make it into the book, but this is our last chance. Will the future readers of LessWrong remember the glory of elephant seal?

Most of the writing on simulacrum levels have left me feeling less able to reason about them, that they are too evil to contemplate. This post engaged with them as one fact in the world among many, which was already an improvement. I've found myself referring to this idea several times over the last two years, and it left me more alert to looking for other explanations in this class.

Thermodynamics is the deep theory behind steam engine design (and many other things) -- it doesn't tell you how to build a steam engine, but to design a good one you probably need to draw on it somewhat.

This post feels like a gesture at a deep theory behind truth-oriented forum / community design (and many other things) -- it certainly doesn't help tell you how to build one, but you have to think at least around what it talks about to design a good one. Also applicable to many other things, of course.

It also has virtue of being very short. Per-word one of my favorite posts.

I read this sequence and then went through the whole thing. Without this sequence I'd probably still be procrastinating / putting it off. I think everything else I could write in review is less important than how directly this impacted me.

Still, a review: (of the whole sequence, not just this post)

First off, it signposts well what it is and who it's for. I really appreciate when posts do that, and this clearly gives the top level focus and whats in/out.

This sequence is "How to do a thing" - a pretty big thing, with a lot of steps and bran...

I and some others on Lightcone team have continued to use this exercise from time to time. Jacob Lagerros got really into it, and would ask us to taboo 'should' whenever someone made a vague claim about what we should do. In all honesty this was pretty annoying. :P

But, it highlighted another use of tabooing 'should', which is checking what assumptions are shared between people. (i.e. John's post is mostly seems to be addressing "single player mode", where you notice your own shoulds and what ignorance that conceals. But sometimes, Alice totally under...

Summary

I summarize this post in a slightly reverse order. In AI alignment, one core question is how to think about utility maximization. What are agents doing that maximize utility? How does embeddedness play into this? What can we prove about such agents? Which types of systems become maximizers of utility in the first place?

This article reformulates expected utility maximization in equivalent terms in the hopes that the new formulation makes answering such questions easier. Concretely, a utility function u is given, and the goal of a u-maximizer is to ch...

The combination of this post, and an earlier John post (Parable of the Dammed) has given me some better language for understanding what's going on in negotiations and norm-setting, two topics that I think are quite valuable. The concept of "you could actually move the Empire State Building, maybe, and that'd affect the Schelling point of meeting places", was a useful intuition pump for both "you can move norm Schelling points around" (as well as how difficult to think of that task as).

Reviewing this quickly because it doesn't have a review.

I've linked this post to several people in the last year. I think it's valuable for people (especially junior researchers or researchers outside of major AIS hubs) to be able to have a "practical sense" of what doing independent alignment research can be like, how the LTFF grant application process works, and some of the tradeoffs of doing this kind of work.

This seems especially important for independent conceptual work, since this is the path that is least well-paved (relative to empirical work...

I like this post in part because of the dual nature of the conclusion, aimed at two different audiences. Focusing on the cost of implementing various coordination schemes seems... relatively unexamined on LW, I think. The list of life-lessons is intelligible, actionable, and short.

On the other hand, I think you could probably push it even further in "Secret of Our Success" tradition / culture direction. Because there's... a somewhat false claim in it: "Once upon a time, someone had to be the first person to invent each of these concepts."

This seems false ...

Returning to this essay, it continues to be my favorite Paul post (even What Failure Looks Like only comes second), and I think it's the best way to engage with Paul's work than anything else (including the Eliciting Latent Knowledge document, which feels less grounded in the x-risk problem, is less in Paul's native language, and gets detailed on just one idea for 10x the space thus communicating less of the big picture research goal). I feel I can understand all the arguments made in this post. I think this should be mandatory reading before reading Elici...

Epistemic Status: I don't actually know anything about machine learning or reinforcement learning and I'm just following your reasoning/explanation.

From each state, we can just check each possible action against the action-value function $q(s_t, a_t), and choose the action that returns the highest value from the action-value function. Greedy search against the action-value function for the optimal policy is thus equivalent to the optimal policy. For this reason, many algorithms try to learn the action-value function for the optimal policy.

This do...

This post is in my small list of +9s that I think count as a key part of how I think, where the post was responsible for clarifying my thinking on the subject. I've had a lingering confusion/nervousness about having extreme odds (anything beyond 100:1) but the name example shows that seeing odds ratios of 20,000,000:1 is just pretty common. I also appreciated Eliezer's corollary: "most beliefs worth having are extreme", this also influences how I think about my key beliefs.

(Haha, I just realized that I curated it back when it was published.)

Two years later, I suppose we know more than we did when the article was written. I would like to read some postscript explaining how well this article has aged.

Both this document and John himself have been useful resources to me as I launch into my own career studying aging in graduate school. One thing I think would have been really helpful here are more thorough citations and sourcing. It's hard to follow John's points ("In sarcopenia, one cross-section of the long muscle cell will fail first - a “ragged red” section - and then failure gradually spreads along the length.") and trace them back to any specific source, and it's also hard to know which of the synthetic insights are original to John and which are in...

I think this post was valuable for starting a conversation, but isn't the canonical reference post on Frame Control I'd eventually like to see in the world. But re-reading the comments here, I am struck by the wealth of great analysis and ideas in the ensuing discussion

John Wentworth's comment about Frame Independence:

...The most robust defense against abuse is to foster independence in the corresponding domain. [...] The most robust defense against financial abuse is to foster financial independence [...] if I am in not independent in some domain, then I am

Weakly positive on this one overall. I like Coase's theory of the firm, and like making analogies with it to other things. I don't think this application felt like it quite worked to me, and trying to write up why.

One thing is I think feels off is an incomplete understanding of the Coase paper. What I think the article gets correct: Coase looks at the difference between markets (economists preferred efficient mechanism) and firms / corporation, and observes that transaction costs (for people these would be contracts, but in general all tr...

Summary

- public discourse of politics is too focused on meta and not enough focused on object level

- the downsides are primarily in insufficient exploration of possibility space

Definitions

- "politics" is topics related to government, especially candidates for elected positions, and policy proposals

- opposite of meta is object level - specific policies, or specific impacts of specific actions, etc

- "meta" is focused on intangibles that are an abstraction away from some object-level feature, X, e.g. someones beliefs about X, or incentives around X, or media coverage v

I was less black-pilled when I wrote this - I also had the idea that though my own attempts to learn AI safety stuff had failed spectacularly perhaps I could encourage more gifted people to try the same. And given my skills or lack thereof, I was hoping this may be some way I could have an impact. As trying is the first filter. Though the world looks scarier now than when I wrote this, to those of high ability I would still say this: we are very close to a point where your genius will not be remarkable, where one can squeeze thoughts more beautiful and clear than you have any hope to achieve from a GPU. If there was ever a time to work on the actually important problems, it is surely now.

Self-Review

I feel pretty happy with this post in hindsight! Nothing major comes to mind that I'd want to change.

I think that agency is a really, really important concept, and one of the biggest drivers of ways my life has improved. But the notion of agency as a legible, articulated concept (rather than just an intuitive notion) is foreign to a lot of people, and jargon-y. I don't think there was previously a good post cleanly explaining the concept, and I'm very satisfied that this one exists and that I can point people to it.

I particularly like my framin...

My first reaction when this post came out was being mad Duncan got the credit for an idea I also had, and wrote a different post than the one I would have written if I'd realized this needed a post. But at the end of the day the post exists and my post is imaginary, and it has saved me time in conversations with other people because now they have the concept neatly labeled.

This post consists of comments on summaries of a debate about the nature and difficulty of the alignment problem. The original debate was between Eliezer Yudkowsky and Richard Ngo but this post does not contain the content from that debate. This posts is mostly of commentary by Jaan Tallinn on that debate, with comments by Eliezer.

The post provides a kind of fascinating level of insight into true insider conversations about AI alignment. How do Eliezer and Jaan converse about alignment? Sure, this is a public setting, so perhaps they communicate differentl...

I like this because it reminds me:

- before complaining about someone not making the obvious choice, first ask if that option actually exists (e.g. are they capable of doing it?)

- before complaining about a bad decision, to ask if the better alternatives actually exist (people aren't choosing a bad option because they think it's better than a good option; they're choosing it because all other options are worse)

However, since I use it for my own thinking, I think of it more as an imaginary/mirage option instead of a fabricated option. It is indeed an option...

I was always surprised that small changes in public perception, a slight change in consumption or political opinion can have large effects. This post introduced the concept of the social behaviour curves for me, and it feels like explains quite a lot of things. The writer presents some example behaviours and movements (like why revolutions start slowly or why societal changes are sticky), and then it provides clear explanations for them using this model. Which explains how to use social behaviour curves and verifies some of the model's predictions at the s...

I've written a bunch elsewhere about object-level thoughts on ELK. For this review, I want to focus instead on meta-level points.

I think ELK was very well-made; I think it did a great job of explaining itself with lots of surface area, explaining a way to think about solutions (the builder-breaker cycle), bridging the gap between toy demonstrations and philosophical problems, and focusing lots of attention on the same thing at the same time. In terms of impact on the growth and development on the AI safety community, I think this is one of the most importa...

This post has a lot of particular charms, but also touches on a generally under-represented subject in LessWrong: the simple power of deliberate practice and competence. The community seems saturated with the kind of thinking that goes [let's reason about this endeavor from all angles and meta-angles and find the exact cheat code to game reality] at the expense of the simple [git gud scrub]. Of course, gitting gud at reason is one very important aspect of gitting gud in general, but only one aspect.

The fixation on calibration and correctness in this commun...

Self-Review

If you read this post, and wanted to put any of it into practice, I'd love to hear how it went! Whether you tried things and it failed, tried things and it worked, or never got round to trying anything at all. It's hard to reflect on a self-help post without data on how much it helped!

Personal reflections: I overall think this is pretty solid advice, and am very happy I wrote this post! I wrote this a year and a half ago, about an experiment I ran 4 years ago, and given all that, this holds up pretty well. I've refined my approach a fair bit, b...

This post has continued to be an important part of Ray's sense of How To Be a Good Citizen, and was one of the posts feeding into the Coordination Frontier sequence.

As an experiment, I'm going to start by listing the things that stuck with me, without rereading the post, and then note down things that seem important upon actual re-reading:

Things that stuck with me

- If you're going to break an agreement, let people know as early as possible.

- Try to take on as much of the cost of the renege-ing as you can.

- Insofar as you can't take on the costs of renege-ing in

There's a scarcity of stories about how things could go wrong with AI which are not centered on the "single advanced misaligned research project" scenario. This post (and the mentioned RAAP post by Critch) helps partially fill that gap.

It definitely helped me picture / feel some of what some potential worlds look like, to the degree I currently think something like this -- albeit probably slower, as mentioned in the story -- is more likely than the misaligned research project disaster.

It also is a (1) pretty good / fun story and (2) mentions the elements within the story which the author feels are unlikely, which is virtuous and helps prevent higher detail from being mistaken for plausibility.

- Paul's post on takeoff speed had long been IMO the last major public step in the dialogue on this subject (not forgetting to honorably mention Katja's crazy discontinuous progress examples and Kokotajlo's arguments against using GPD as a metric), and I found it exceedingly valuable to read how it reads to someone else who has put in a great deal of work into figuring out what's true about the topic, thinks about it in very different ways, and has come to different views on it. I found this very valuable for my own understanding of the subject, and I felt I

A great example of taking the initiative and actually trying something that looks useful, even when it would be weird or frowned upon in normal society. I would like to see a post-review, but I'm not even sure if that matters. Going ahead and trying something that seems obviously useful, but weird and no one else is doing is already hard enough. This post was inspiring.

This post very cleverly uses Conway's Game of Life as an intuition pump for reasoning about agency in general. I found it to be both compelling, and a natural extension of the other work on LW relating to agency & optimization. The post also spurred some object-level engineering work in Life, trying to find a pattern that clears away Ash. It also spurred people in the comments to think more deeply about the implications of the reversibility of the laws of physics. It's also reasonably self-contained, making it a good candidate for inclusion in the Review books.

I think the general claim this post makes is

- incredibly important

- well argued

- non obvious to many people

I think there's an objection here that value != consumption of material resources, hence the constraints on growth may be far higher than the author calculates. Still, the article is great

Every few weeks I have the argument with someone that clearly AI will increase GDP drastically before it kills everyone. The arguments in this post are usually my first response. GDP doesn't mean what you think it means, and we don't actually really know how to measure economic output in the context of something like an AI takeoff, and this is important because that means you can't use GDP as a fire alarm, even in slow takeoff scenarios.

Partly I just want to signal-boost this kind of message.

But I also just really like the way this post covers the topic. I didn't have words for some of these effects before, like how your goals and strategies might change even if your values stay the same.

The whole post feels like a great invitation to the topic IMO.

I didn't reread it in detail just now. I might have more thoughts were I to do so. I just want this to have a shot at inclusion in final voting. Getting unconfused about self-love is, IMO, way more important than most models people discuss on this site.

I consider this post as one of the most important ever written on issues of timelines and AI doom scenario. Not because it's perfect (some of its assumptions are unconvincing), but because it highlights a key aspect of AI Risk and the alignment problem which is so easy to miss coming from a rationalist mindset: it doesn't require an agent to take over the whole world. It is not about agency.

What RAAPs show instead is that even in a purely structural setting, where agency doesn't matter, these problem still crop up!

This insight was already present in Drexle...

I did eventually get covid.

As was the general pattern with this whole RADVAC episode, it's ambiguous how much the vaccine helped. I caught covid in May 2022, about 15 months after the radvac doses, and about 13 months after my traditional vaccine (one shot J&J). In the intervening 15 months, my general mentality about covid was "I no longer need to give a shit"; I ate in restaurants multiple times per week, rode BART to and from the office daily, went to crowded places sometimes, traveled, and generally avoided wearing a mask insofar as that was socially acceptable.

I really liked this post since it took something I did intuitively and haphazardly and gave it a handle by providing the terms to start practicing it intentionally. This had at least two benefits:

First it allowed me to use this technique in a much wider set of circumstances, and to improve the voices that I already have. Identifying the phenomenon allowed it to move from a knack which showed up by luck, to a skill.

Second, it allowed me to communicate the experience more easily to others, and open the possibility for them to use it as well. Unlike many less...

It's rare that I encounter a lesswrong post that opens up a new area of human experience - especially rare for a post that doesn't present an argument or a new interpretation or schema for analysing the world.

But this one does. A simple review, with quotes, of an ethnographical study of late 19th century Russian peasants, opened up a whole new world and potentially changed my vision of the past.

Worth it from its many book extracts and choice of subject matter.

I was impressed by this post. I don't have the mathematical chops to evaluate it as math -- probably it's fairly trivial -- but I think it's rare for math to tell us something so interesting and important about the world, as this seems to do. See this comment where I summarize my takeaways; is it not quite amazing that these conclusions about artificial neural nets are provable (or provable-given-plausible-conditions) rather than just conjectures-which-seem-to-be-borne-out-by-ANN-behavior-so-far? (E.g. conclusions like "Neural nets trained on very complex ...

One of the posts which has been sitting in my drafts pile the longest is titled "Economic Agents Who Have No Idea What's Happening". The draft starts like this:

...Eight hundred years ago, a bloomery produces some iron. The process is not tightly controlled - the metal may contain a wide range of carbon content or slag impurities, and it’s not easy to measure the iron’s quality. There may be some externally-visible signs, but they’re imperfect proxies for the metal’s true composition. The producer has imperfect information about their own outputs.

Tha

The world is a complicated and chaotic place. Anything could interact with everything, and some of these are good. This post describes that general paralysis of the insane can be cured with malaria. At least if they do not die during the treatment.

If late-stage syphilis (general paralysis) isn't treated, then they probably die 3-5 years with progressively worse symptoms each year. So even when 5-20% of the died immediately when the treatment started, they still had better survival rates in one and five years. A morbid example of an expected value choice: w...

I ended up referring back to this post multiple times when trying to understand the empirical data on takeoff speeds and in-particular for trying to estimate the speed of algorithmic progress independent of hardware progress.

I also was quite interested in the details here in order to understand the absolute returns to more intelligence/compute in different domains.

One particular follow-up post I would love to see is to run the same study, but this time with material disadvantage. In-particular, I would really like to see, in both chess and go, ...

There are lots of anecdotes about choosing the unused path and being the disruptor, but I feel this post explains the idea more clearly, with better analogies and boundaries.

To achieve a goal you have to build a lot of skills (deliberate practice) and apply them when it is really needed (maximum performance). Less is talked about searching for the best strategy and combination of skills. I think "deliberate play" is a good concept for this because it shows that strategy research is a small but important part of playing well.

This is a great theorem that's stuck around in my head this last year! It's presented clearly and engagingly, but more importantly, the ideas in this piece are suggestive of a broader agent foundations research direction. If you wanted to intimate that research direction with a single short post that additionally demonstrates something theoretically interesting in its own right, this might be the post you'd share.

I think this is an excellent response (I'd even say, companion piece) to Joe Carlsmith's also-excellent report on the risk from power-seeking AI. On a brief re-skim I think I agree with everything Nate says, though I'd also have a lot more to add and I'd shift emphasis around a bit. (Some of the same points I did in fact make in my own review of Joe's report.)

Why is it important for there to be a response? Well, the 5% number Joe came to at the end is just way too low. Even if you disagree with me about that, you'll concede that a big fraction of the ratio...

This strikes me as a core application of rationality. Learning to notice implicit "should"s and tabooing them. The example set is great.

Some of the richness is in the comments. Raemon's in particular highlights an element that strikes me as missing: The point is to notice the feeling of judging part of the territory as inherently good or bad, as opposed to recognizing the judgment as about your assessment of how you and/or others relate to the territory.

But it's an awful lot to ask of a rationality technique to cover all cases related to its domain.

If all ...

This post is a solid list of advice on self-studying. It matches the Review criterion of posts that continue to affect my behavior. (I don't explicitly think back to the post, but I do occasionally bring up its advice in my mind, like "as yes, it's actually good to read multiple textbooks concurrently and not necessarily finish them".)

I actually disagree with the "most important piece of advice", which is to use spaced repetition software. Multiple times in my life, I have attempted to incorporate an SRS habit into my life, reflecting on why it previously ...

In many ways, this post is frustrating to read. It isn't straigthforward, it needlessly insults people, and it mixes irrelevant details with the key ideas.

And yet, as with many of Eliezer's post, its key points are right.

What this post does is uncover the main epistemological mistakes made by almost everyone trying their hands at figuring out timelines. Among others, there is:

- Taking arbitrary guesses within a set of options that you don't have enough evidence to separate

- Piling on arbitrary assumption on arbitraty assumption, leading to completely uninforma

This was a very interesting read. Aside from just illuminating history and how people used to think differently, I think this story has a lot of implications for policy questions today.

The go-to suggestions for pretty much any structural ill in the world today is to "raise awareness" and "appoint someone". These two things often make the problem worse. "Raising awareness" mostly acts to give activists moral license to do nothing practical about the problem, and can even backfire by making the problem a political issue. For example, a campaign to raise awar...

I've thought a good amount about Finite Factored Sets in the past year or two, but I do sure keep going back to thinking about the world primarily in the form of Pearlian causal influence diagrams, and I am not really sure why.

I do think this one line by Scott at the top gave me at least one pointer towards what was happening:

but I'm trained as a combinatorialist, so I'm giving a combinatorics talk upfront.

In the space of mathematical affinities, combinatorics is among the branches of math I feel most averse to, and I think that explains a good...

One particularly important thing I got out of this post was crystallizing a complaint I sometimes have about people using anthropic reasoning. If someone says there's trillion-to-1 evidence for (blah) based on anthropics, it's actually not so crazy to say "well I don't believe (blah) anyway, based on the evidence I get from observing the world", it seems to me.

Or less charitably to myself, maybe this post is helping me rationalize my unjustified and unthinking gut distrust of anthropic reasoning :-P

Anyway, great post.

I went back and forth on this post a lot. Ultimately the writing is really fantastic and I appreciate the thought and presence Joe put into it. It doesn't help me understand why someone would care about ant experience but it does help me understand the experience of caring about ant sentience.

This post helped me understand the motivation for the Finite Factored Sets work, which I was confused about for a while. The framing of agency as time travel is a great intuition pump.

I think this post points towards something important, which is a bit more than what the title suggests, but I have a problem describing it succinctly. :)

Computer programming is about creating abstractions, and leaky abstractions are a common enough occurrence to have their own wiki page. Most systems are hard to comprehend as a whole, and a human has to break them into parts which can be understood individually. But these are not perfect cuts, the boundaries are wobbly, and the parts "leak" into each other.

Most commonly these leaks happen because of a tech...

I think this post makes an important point -- or rather, raises a very important question, with some vivid examples to get you started. On the other hand, I feel like it doesn't go further, and probably should have -- I wish it e.g. sketched a concrete scenario in which the future is dystopian not because we failed to make our AGIs "moral" but because we succeeded, or e.g. got a bit more formal and complemented the quotes with a toy model (inspired by the quotes) of how moral deliberation in a society might work, under post-AGI-alignment conditions, and ho...

This post is a good review of a book, to an space where small regulatory reform could result in great gains, and also changed my mind about LNT. As an introduction to the topic, more focus on economic details would be great, but you can't be all things to all men.

I really, really liked this idea. In some sense it's just reframing the idea of trade-offs. But it's a really helpful (for me) reframe that makes it feel concrete and real to me.

I'd long been familiar with "the expert blind spot" — the issue where experts will forget what it's like to see like a non-expert and will try to teach from there. Like when aikido teachers would tell me to "just relax, act natural, and let the technique just happen on its own." That makes sense if you've been practicing that technique for a decade! But it's awful advice to give a ...

This post gave me a piece of jargon that I've found useful since reading it. In some sense, the post is just saying "sometimes people don't do Bayesian updating", which is a pretty cold take. But I found it useful to read through a lot of examples and discussion of what the deal might be. In my practice of everyday rationality, this post made it easier for me to stop and ask things like, "Is this a trapped prior? Might my [or others'] reluctance to update be due to whatever mechanisms cause a trapped prior?"

I liked this story enough to still remember it, separately from the original Sort By Controversial story. Trade across moral divide is a useful concept to have handles for.

The best compliment I can give this post is that the core idea seems so obviously true that it seems impossible that I haven't thought of or read it before. And yet, I don't think I have.

Aside from the core idea that it's scientifically useful to determine the short list of variables that fully determine or mediate an effect, the secondary claim is that this is the main type of science that is useful and the "hypothesis rejection" paradigm is a distraction. This is repeated a few times but not really proven, and it's not hard to think of counterexamples: m...

I've referenced this post, or at least this concept, in the past year. I think it's fairly important. I've definitely seen this dynamic. I've felt it as a participant who totally wants a responsible authority figure to look up to and follow, and I've seen in how people respond to various teacher-figures in the rationalsphere.

I think the rationalsphere lucked out in its founding members being pretty wise, and going out of their way to try to ameliorate a lot of the effects here, and still those people end up getting treated in a weird cult-leader-y way even...

This post is among the most concrete, actionable, valuable post I read from 2021. Earlier this year, when I was trying to get a handle on the current-state-of-AI, this post transformed my opinion of Interpretability research from "man, this seems important but it looks so daunting and I can't imagine interpretability providing enough value in time" to "okay, I actually see a research framework I could expect to be scalable."

I'm not a technical researcher so I have trouble comparing this post to other Alignment conceptual work. But my impression, from seein...

This post is the most comprehensive answer to the question "what was really going on at Leverage Research" anyone has ever given, and that question has been of interest to many in the LW community. I'm happy to see it's been nominated for the year-end review; thank you to whomever did that!

Since this got nominated, now's a good time to jump in and note that I wish that I had chosen different terminology for this post.

I was intending for "final crunch time" to be a riff on Eliezer saying, here, that we are currently in crunch time.

This is crunch time for the whole human species, and not just for us but for the intergalactic civilization whose existence depends on us. This is the hour before the final exam and we're trying to get as much studying done as possible.

I said explicitly, in this post, "I'm going to refer to this last stretch of a fe...

This might be the lowest karma post that I've given a significant review vote for. (I'm currently giving it a 4). I'd highly encourage folk to give it A Think.

This post seems to be asking an important question of how to integrate truthseeking and conflict theory. I think this is probably one of the most important questions in the world. Conflict is inevitable. Truthseeking is really important. They are in tension. What do we do about that?

I think this is an important civilizational question. Most people don't care nearly enough about truthseeking in the fi...

This post is on a very important topic: how could we scale ideas about value extrapolation or avoiding goal misgeneralisation... all the way up to superintelligence? As such, its ideas are very worth exploring and getting to grips to. It's a very important idea.

However, the post itself is not brilliantly written, and is more of "idea of a potential approach" than a well crafted theory post. I hope to be able to revisit it at some point soon, but haven't been able to find or make the time, yet.

I haven't thought about Oliver Sipple since I posted my original comment. Revisiting it now, I think it is a juicier consequentialist thought experiment than the trolley problem or the surgeon problem. Partly, this is because the ethics of the situation depend so much on which aspect you examine, at which time, and illustrates how deeply entangled ethical discourse is with politics and PR.

It's also perfectly plausible to me that Oliver's decline was caused by the psychological effect of unwanted publicity and the dissolution of his family ties. But I'm not...

The ideas in this post greatly influence how I think about AI timelines, and I believe they comprise the current single best way to forecast timelines.

A +12-OOMs-style forecast, like a bioanchors-style forecast, has two components:

- an estimate of (effective) compute over time (including factors like compute getting cheaper and algorithms/ideas getting better in addition to spending increasing), and

- a probability distribution on the (effective) training compute requirements for TAI (or equivalently the probability that TAI is achievable as a function of tr

I upvoted this highly for the review. I think of this as a canonical reference post now for the sort of writing I want to see on LessWrong. This post identified an important problem I've seen a lot of people struggle with, and writes out clear instructions for it.

I guess a question I have is "how many people read this and had it actually help them write more quickly?". I've personally found the post somewhat helpful, but I think mostly already had the skill.

This is a relatively banal meta-commentary on reasons people sometimes give for doing worst-case analysis, and the differences between those reasons. The post reads like a list of things with no clear through-line. There is a gesture at an important idea from a Yudkowsky post (the logistic success curve idea) but the post does not helpfully expound that idea. There is a kind of trailing-off towards the end of the post as things like "planning fallacy" seem to have been added to the list with little time taken to place them in the context of the other thing...

This post is pretty important to me. "Understanding frames" and "Understanding coordination theory" are like my two favorite things, and this post ties them together.

When I previously explored frames, I was mostly thinking through the lens of "how do people fail to communicate." I like that this post goes into the more positively-focused "what are frames useful for, and how might you design a good frame on purpose?". Much discussion of frames on LW has been a bit vague and woo-y. I like that this post frames Frames as a technical product, and approac...

A good review of work done, which shows that the writer is following their research plan and following up their pledge to keep the community informed.

The contents, however, are less relevant, and I expect that they will change as the project goes on. I.e. I think it is a great positive that this post exists, but it may not be worth reading for most people, unless they are specifically interested in research in this area. They should wait for the final report, be it positive or negative.

I liked this post when I read it. It matched my sense that (e.g.) using "outside view" to refer to Hanson's phase transition model of agriculture->industry->AI was overstating the strength of the reasoning behind it.

But I've found that I've continued to use the terms "inside view" and "outside view" to refer to the broad categories sketched out in the two Big Lists O' Things. Both in my head and when speaking. (Or I'll use variants like "outside viewish" or similar.)

I think there is a meaningful distinction here: the reasoning moves on the "Outside" ...

This post provides a maximally clear and simple explanation of a complex alignment scheme. I read the original "learning the prior" post a few times but found it hard to follow. I only understood how the imitative generalization scheme works after reading this post (the examples and diagrams and clear structure helped a lot).

I like this research agenda because it provides a rigorous framing for thinking about inductive biases for agency and gives detailed and actionable advice for making progress on this problem. I think this is one of the most useful research directions in alignment foundations since it is directly applicable to ML-based AI systems.

I wrote up a bunch of my high-level views on the MIRI dialogues in this review, so let me say some things that are more specific to this post.

Since the dialogues are written, I keep coming back to the question of the degree to which consequentialism is a natural abstraction that will show up in AI systems we train, and while this dialogue had some frustrating parts where communication didn't go perfectly, I still think it has some of the best intuition pumps for how to think about consequentialism in AI systems.

The other part I liked the most w...

The Georgism series was my first interaction with a piece of economic theory that tried to make sense by building a different model than anything I had seen before. It was clear and engaging. It has been a primary motivator in my learning more about economics.

I'm not sure how the whole series would work in the books, but the review of Progress and Poverty was a great introduction to all the main ideas.

I have a very contrarian take on Gödel's Incompleteness Theorem, which is that it's widely misunderstood, most things people conclude from it are false, and it's actually largely irrelevant. This is why I was excited to read a review of this book I've heard so much fuss about, to see if it would change my mind.

Well, it didn't. Sam himself doesn't think the second half of the book (where we talk about conclusions) is all that strong, and I agree. So as an exploration of what to do with the theorem, this review isn't that useful; it's more of a negative exam...

I'm torn about this one. On the one hand, it's basically a linkpost; Katja adds some useful commentary but it's not nearly as important/valuable as the quotes from Lewis IMO. On the other hand, the things Lewis said really need to be heard by most people at some point in their life, and especially by anyone interested in rationality, and Katja did LessWrong a service by noticing this & sharing it with the community. I tentatively recommend inclusion.

The comments have some good discussion too.

This post has tentatively entered my professional worldview. "Big if true."

I'm looking at this through the lens of "how do we find/create the right people to help solve x-risk and other key urgent problems." The track record of AI/rationalist training programs doesn't seem that great. (i.e. they seem to typically work mostly via selection[1]).

In the past year, I've seen John attempt to make an actual training regimen for solving problems we don't understand. I feel at least somewhat optimistic about his current training attempts, partly because his m...

I like post because it: -- Focuses on a machine which is usually non-central to accounts of the industrial revolution (at least in others which I've read), which makes novel and interesting to those interested in the roots of progress -- And has a high ratio of specific empirical detail to speculation -- Furthermore separates speculation from historical claims pretty cleanly

So, "Don't Shoot the Dog" is a collection of parenting advice based solely on the principle of reinforcement learning, i.e., the idea that kids do things more if they are rewarded and less if they're punished. It gets a lot out of this, including things that many parents do wrong. And the nicest thing is that, because everything is based on such a simple idea, most of the advice is self-evident. Pretty good, considering that learning tips are often controversial.

For example, say you ask your kid to clean her room, but she procrastinates on the task. When s...

(Self-review.)

I was surprised by how well-received this was!

I was also a bit disappointed at how many commenters focused on the AI angle. Not that it necessarily matters, but to me, this isn't a story about AI. (I threw in the last two paragraphs because I wasn't sure how to end it in a way that "felt like an ending.")

To me, this story is an excuse for an exploration about how concepts work (inspired by an exchange with John Wentworth on "Unnatural Categories Are Optimized for Deception"). The story-device itself is basically a retread of "That Alien Messa...

This is a post that gave me (an ML noob) a great deal of understanding of how language models work — for example the discussion of the difference between "being able to do a task" and "knowing when to perform that task" is one I hadn't conceptualized before reading this post, and makes a large difference in how to think about the improvements from scaling. I also thought the characterization of the split between different schools of thought and what they pay attention to was quite illuminating.

I don't have enough object-level engagement for my recommendation to be much independent evidence, but I still will be voting this either a +4 or +9, because I personally learned a bunch from it.

This post is one of the LW posts a younger version of myself would have been most excited to read. Building on what I got from the Embedded Agency sequence, this post lays out a broad-strokes research plan for getting the alignment problem right. It points to areas of confusion, it lists questions we should be able to answer if we got this right, it explains the reasoning behind some of the specific tactics the author is pursuing, and it answers multiple common questions and objections. It leaves me with a feeling of "Yeah, I could pursue that too if I wanted, and I expect I could make some progress" which is a shockingly high bar for a purported plan to solve the alignment problem. I give this post +9.

I was aware of this post and I think read it in 2021, but kind of bounced off it the dumb reason that "split and commit" sounds approximately synonymous with "disagree and commit", though Duncan is using it in a very different way.

In fact, the concept means something pretty damn useful, is my guess, and I can begin to see cases where I wish I was practicing this more. I intended to start. I might need to invent a synonym to make it feel less like an overloaded term. Or disagree and commit on matters of naming things :P

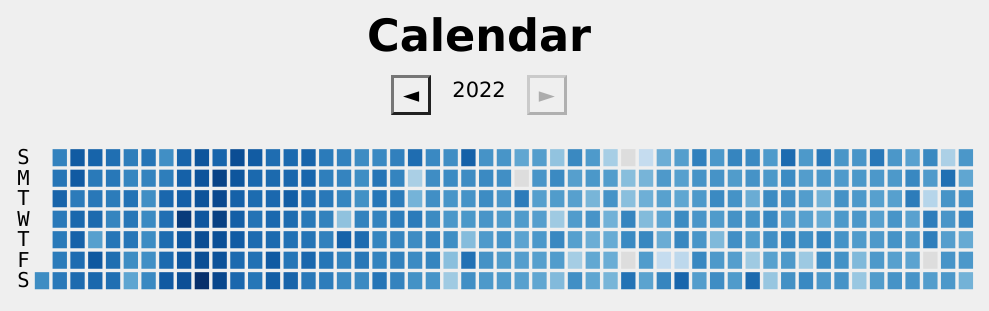

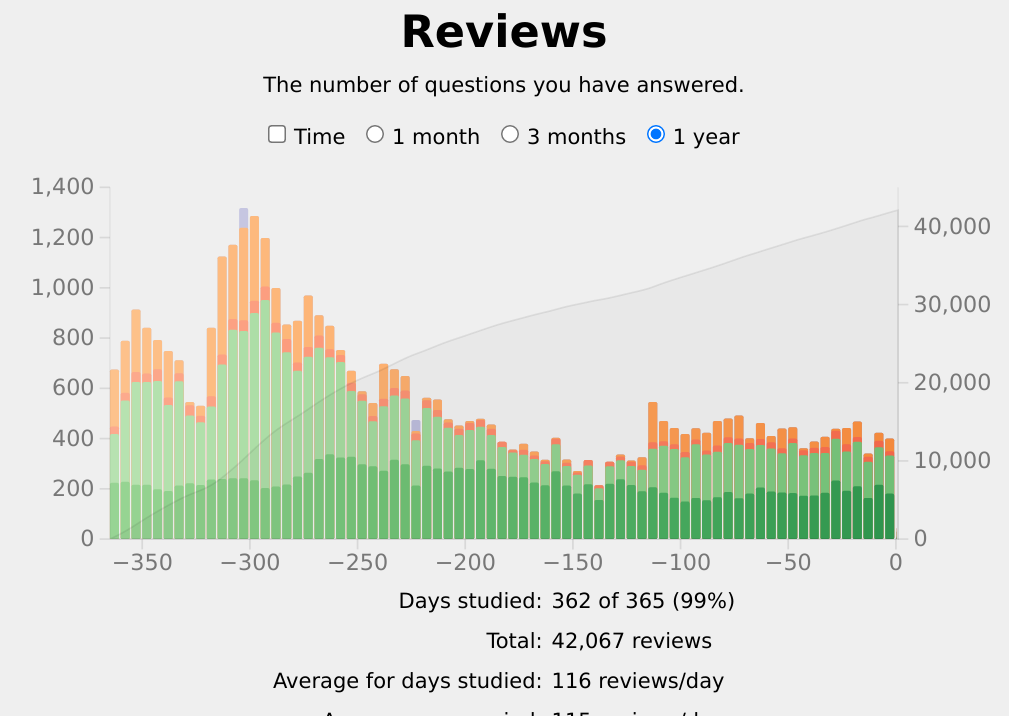

I'm glad I started using Anki. I did Anki 362/365 days this year.

I averaged 19 minutes of review a day (although I really think review tended to take longer), which nominally means I spent 4.75 clock-days studying Anki.

Seems very worth it, in my experience.

This post was a great dive into two topics:

- How an object-level research field has gone, and what are the challenges it faces.

- Forming a model about how technologically optimistic projects go.

I think this post was good on it's first edition, but became great after the author displayed admirable ability to update their mind and willingness to update their post in light of new information.

Overall I must reluctantly only give this post a +1 vote for inclusion, as I think the books are better served by more general rationality content, but I'm terms of what I would like to see more of on this site, +9. Maybe I'll compromise and give +4.

For a long time, I could more-or-less follow the logical arguments related to e.g. Newcomb’s problem, but I didn’t really get it, like, it still felt wrong and stupid at some deep level. But when I read Joe’s description of “Perfect deterministic twin prisoner’s dilemma” in this post, and the surrounding discussion, thinking about that really helped me finally break through that cloud of vague doubt, and viscerally understand what everyone’s been talking about this whole time. The whole post is excellent; very strong recommend for the 2021 review.

This post trims down the philosophical premises that sit under many accounts of AI risk. In particular it routes entirely around notions of agency, goal-directedness, and consequentialism. It argues that it is not humans losing power that we should be most worried about, but humans quickly gaining power and misusing such a rapid increase in power.

Re-reading the post now, I have the sense that the arguments are even more relevant than when it was written, due to the broad improvements in machine learning models since it was written. The arguments in this po...

I'm glad for this article because it sparked the conversation about the relevance of behavioral economics. I also agree with Scott's criticism of it (which unfortunately isn't part of the review). But together they made for a great update on the state of behavioral economics.

I checked if there's something new in the literature since these articles were published, and found this paper by three of the authors who wrote the 2020 article Scott wrote about in his article. They conclude that "the evidence of loss aversion that we report in this paper and in Mrkv...

Brilliant article. I’m also curious about the economics side of things.

I found an article which estimates that nuclear power would be two orders of magnitude cheaper if the regulatory process were to be improved, but it doesn’t explain the calculations which led to the ‘two orders of magnitude’ claim. https://www.mackinac.org/blog/2022/nuclear-wasted-why-the-cost-of-nuclear-energy-is-misunderstood

I think this is my second-favorite post in the MIRI dialogues (for my overall review see here).

I think this post was valuable to me in a much more object-level way. I think this post was the first post that actually just went really concrete on the current landscape of efforts int he domain of AI Notkilleveryonism and talked concretely about what seems feasible for different actors to achieve, and what isn't, in a way that parsed for me, and didn't feel either like something obviously political, or delusional.

I didn't find the part about differ...

I just really like the clarity of this example. Noticing concrete lived experience at this level of detail. It highlights the feeling in my own experience and makes me more likely to notice it in real time when it's happening in my own life.

As a 2021 "best of" post, the call for people to share their experiences doesn't make as much sense, particularly should this post end up included in book form. I'm not sure how that fits with the overall process though. I don't wish Anna hadn't asked for more examples!

I appreciate this post, though mostly secondhand. It's special to me because it provided me with a way to participate more-or-less directly in an alignment project: one of my glowfic buddies decided to rope me in to write a glowfic thread in this format for the project [here](https://glowfic.com/posts/5726). I'd like to hear more updates about how it's gone in the last year, though!

EDIT: Oops, in a tired state I got muddled between this AMA post and the original introduction of dath ilan made in an April Fool's post in 2014 (golly, that's a while back)

When this was published, I had little idea of how ongoing a concept dath ilan would become in my world. I think there's value both in the further explorations of this (e.g. Mad Investor Chaos glowfic and other glowfics that illustrate a lot of rationality and better societal function than Earth), but also in just the underlying concept of "what would have produced you as the median pers...

One of the things I like about this post the most, is it shows how much careful work is required to communicate. It's not a trick, it's not just being better than other people, it's simple, understandable, and hard work.

I think all the diagrams are very helpful, and really slow down and zoom in on the goal of nailing down an interpretation of your words and succeeding at communicating.

There's a very related concept (almost so obvious that you could say it's contained in the post) of finding common ground, where, in negotiation/conflict/disagreement, you ca...

I'm torn here because this post is incremental progress, and the step size feels small for inclusion in the books. OTOH small-but-real progress is better than large steps in the wrong direction, and this post has the underrepresented virtues of "strong connection to reality" and "modeling the process of making progress". And just yesterday I referred someone to it because it contained concepts they needed.

I think this post was important. I used the phrase 'Law of No Evidence: Any claim that there is “no evidence” of something is evidence of bullshit' several times (mostly in reply to tweets using that phrase or when talking about an article that uses the phrase).

Was it important intellectual progress? I think so. Not as a cognitive tool for use in an ideal situation, where you and others are collaboratively truth-seeking - but for use in adversarial situations, where people, institutions and authorities lie, mislead and gaslight you.

It is not a tool meant t...

This post culminates years of thinking which formed a dramatic shift in my worldview. It is now a big part of my life and business philosophy, and I've showed it to friends many times when explaining my thinking. It's influenced me to attempt my own bike repair, patch my own clothes, and write web-crawlers to avoid paying for expensive API access. (The latter was a bust.)

I think this post highlights using rationality to analyze daily life in a manner much deeper than you can find outside of LessWrong. It's in the spirit of the 2012 post "Rational Toothpast...

As I said in my original comment here, I'm not a parent, so I didn't get a chance to try this. But now I work at a kindergarten, and was reminded of this post by the review process, so I can actually try it! Expect another review after I do :)

One review criticized my post for being inadequate at world modeling - readers who wish to learn more about predictions are better served by other books and posts (but also praised me for being willing to update its content after new information arrived). I don't disagree, but I felt it was necessary to clarify my background of writing it.

First and foremost, this post was meant specifically as (1) a review of the research progress on Whole Brain Emulation of C. elegans, and (2) a request for more information from the community. I became aware of this resea...

Many people believe that they already understand Dennett's intentional stance idea, and due to that will not read this post in detail. That is, in many cases, a mistake. This post makes an excellent and important point, which is wonderfully summarized in the second-to-last paragraph:

...In general, I think that much of the confusion about whether some system that appears agent-y “really is an agent” derives from an intuitive sense that the beliefs and desires we experience internally are somehow fundamentally different from those that we “merely” infer and a

I like this for the idea of distinguishing between what is real (how we behave) vs what is perceived (other people's judgment of how we are behaving). It helped me see that rather than focusing on making other people happy or seeking their approval, I should instead focus on what I believe I should do (e.g. what kinds of behaviour create value in the world) and measure myself accordingly. My beliefs may be wrong, but feedback from reality is far more objective and consistent than things like social approval, so it's a much saner goal. And more importantly,...

I'm in two minds about this post.

On one hand, I think the core claim is correct Most people are generally too afraid of low negative EV stuff like lawsuits, terrorism, being murdered etc... I think this is also a subset of the general argument that goes something like "most people are too cowardly. Being less cowardly is in most cases better"

That being said, I have a few key problems with this article that make me downvote it.

- I feel like it's writing to persuade, not to explain. It's all arguments for not caring about lawsuits and no examination of why y

I'd ideally like to see a review from someone who actually got started on Independent Alignment Research via this document, and/or grantmakers or senior researchers who have seen up-and-coming researchers who were influenced by this document.

But, from everything I understand about the field, this seems about right to me, and seems like a valuable resource for people figuring out how to help with Alignment. I like that it both explains the problems the field faces, and it lays out some of the realpolitik of getting grants.

Actually, rereading this, it strikes me as a pretty good "intro to the John Wentworth worldview", weaving a bunch of disparate posts together into a clear frame.

Learning what we can about how ML algorithms generalize seems very important. The classical philosophy of alignment tends to be very pessimistic about anything like this possibly being helpful. (That is, it is claimed that trying to reward "happiness-producing actions" in the training environment is doomed, because the learned goal will definitely generalize to something not-what-you-meant like "tiling the galaxies with smiley faces.") That is, of course, the conservative assumption. (We would prefer not to bet the entire future history of the world on AI ...

I haven't read a ton of Dominic Cummings, but the writing of his that I have read had a pretty large influence on me. It is very rare to get any insider story about how politics works internally from someone who speaks in a mechanistic language about the world, and I pretty majorly updated my models of how to achieve political outcomes, and also majorly updated upwards on my ability to achieve things in politics without going completely crazy (I don't think politics had no effect on Cumming's sanity, but he seems to have weathered it in a healthier way than the vast majority of other people I've seen go into it).

I have been doing various grantmaking work for a few years now, and I genuinely think this is one of the best and most important posts to read for someone who is themselves interested in grantmaking in the EA space. It doesn't remotely cover everything, but almost everything that it does say isn't said anywhere else.

I feel like this post is the best current thing to link to for understanding the point of coherence arguments in AI Alignment, which I think are really crucial, and even in 2023 I still see lots of people make bad arguments either overextending the validity of coherence arguments, or dismissing coherence arguments completely in an unproductive way.

This post in particular feels like it has aged well and became surprisingly very relevant in the FTX situation. Indeed post-FTX I saw a lot of people who were confidently claiming that you should not take a 51% bet to double or nothing your wealth, even if you have non-diminishing returns to money, and I sent this post to multiple people to explain why I think that criticism is not valid.

A decent introduction to the natural abstraction hypothesis, and how testing it might be attempted. A very worthy project, but it isn't that easy to follow for beginners, nor does it provide a good understanding of how the testing might work in detail. What might consist a success, what might consist a failure of this testing? A decent introduction, but only an introduction, and it should have been part of a sequence or a longer post.

I still appreciate this post for getting me to think about the question "how much language can dogs learn?". I also still find the evidence pretty sus, and mostly tantalizing in the form of "man I wish there were more/better experiments like this."

BUT, what feels (probably?) less sus to me is JenniferRM's comment about the dog Chaser, who learned explicit nouns and verbs. This is more believable to me, and seems to have had more of a scientific setup. (Ideally I'd like to spend this review-time spot-checking that the paper seems reasonable, alas, in the gr...

This post has successfully stuck around in my mind for two years now! In particular, it's made me explicitly aware of the possibility of flinching away from observations because they're normie-tribe-coded.

I think I deny the evidence on most of the cases of dogs generating complex English claims. But it was epistemically healthy for that model anomaly to be rubbed in my face, rather than filter-bubbled away plus flinched away from and ignored.

This is a fantastic piece of economic reasoning applied to a not-flagged-as-economics puzzle! As the post says, a lot of its content is floating out there on the internet somewhere: the draw here is putting all those scattered insights together under their common theory of the firm and transaction costs framework. In doing so, it explicitly hooked up two parts of my world model that had previously remained separate, because they weren't obviously connected.

I think this is a fantastically clear analysis of how power and politics work, that made a lot of things click for me. I agree it should be shorter but honestly every part of this is insightful. I find myself confused even how to review it, because I don't know how to compare this to how confusing the world was before this post. This is some of the best sense-making I have read about how governmental organizations function today.

There's a hope that you can just put the person who's most obviously right in charge. This post walks through the basic things th...

This post was personally meaningful to me, and I'll try to cover that in my review while still analyzing it in the context of lesswrong articles.

I don't have much to add about the 'history of rationality' or the description of interactions of specific people.

Most of my value from this post wasn't directly from the content, but how the content connected to things outside of rationality and lesswrong. So, basically, i loved the citations.

Lesswrong is very dense in self-links and self-citations, and to a lesser degree does still have a good number of li...

I think this post is valuable because it encourages people to try solving very hard problem, specifically by showing them how they might be able to do that! I think its main positive effect is simply in pointing out that it is possible to get good at solving hard problems, and the majority of the concretes in the post are useful for continuing to convince the reader of this basic possibility.

I'm leaving this review primarily because this post somehow doesn't have one yet, and it's way too important to get dropped out of the Review!

ELK had some of the most alignment community engagement of any technical content that I've seen. It is extremely thorough, well-crafted, and aims at a core problem in alignment. It serves as an examplar of how to present concrete problems to induce more people to work on AI alignment.

That said, I personally bounced after reading the first few pages of the document. It was good as far as I got, but it was pretty effortful to get through, and (as mentioned above) already had tons of attention on it.

I like this post for reinforcing a point that I consider important about intellectual progress, and for pushing against a failure mode of the Sequences-style rationalists.

As far as I can tell, intellectual progress is made bit by bit with later building on earlier Sequences. Francis Bacon gets credit for landmark evolution of the scientific method, but it didn't spring from nowhere, he was building on ideas that had built on ideas, etc.

This says the same is true for our flavor of rationality. It's built on many things, and not just probability theory.

The f...

I think of all the posts that Holden has written in the last two years, this is the one that I tend to refer to by far the most, in-particular the "size of economy" graph.

I think there are a number of other arguments that lead you to roughly the same conclusion ("that whatever has been happening for the last few centuries/millenia has to be an abnormal time in history, unless you posit something very cyclical"), that other people have written about (Luke's old post about "there was only one industrial revolution" is the one that I used to link for this the...

This was an important and worthy post.

I'm more pessimistic than Ajeya; I foresee thorny meta-ethical challenges with building AI that does good things and not bad things, challenges not captured by sandwiching on e.g. medical advice. We don't really have much internal disagreement about the standards by which we should judge medical advice, or the ontology in which medical advice should live. But there are lots of important challenges that are captured by sandwiching problems - sandwiching requires advances in how we interpret human feedback, and how we tr...

I'm generally happy to see someone do something concrete and report back, and this was an exceptionally high-value thing to try.

This post felt like a great counterpoint to the drowning child thought experiment, and as such I found it a useful insight. A reminder that it's okay to take care of yourself is important, especially in these times and in a community of people dedicated to things like EA and the Alignment Problem.

This was a useful and concrete example of a social technique I plan on using as soon as possible. Being able to explain why is super useful to me, and this post helped me do that. Explaining explicitly the intuitions behind communication cultures is useful for cooperation. This post feels like a step in the right direction in that regard.

A great explanation of something I've felt, but not been able to articulate. Connecting the ideas of Stag-Hunt, Coordination problems, and simulacrum levels is a great insight that has paid dividends as an explanatory tool.

I really enjoyed this post as a compelling explanation of slack in a domain that I don't see referred to that often. It helped me realize the value of having "unproductive" time that is unscheduled. It's now something I consider when previously I did not.

This is a post about the mystery of agency. It sets up a thought experiment in which we consider a completely deterministic environment that operates according to very simple rules, and ask what it would be for an agentic entity to exist within that.

People in the game of life community actually spent some time investigating the empirical questions that were raised in this post. Dave Greene notes:

...The technology for clearing random ash out of a region of space isn't entirely proven yet, but it's looking a lot more likely than it was a year ago, that a work

This post attempts to separate a certain phenomenon from a certain very common model that we use to understand that phenomenon. The model is the "agent model" in which intelligent systems operate according to an unchanging algorithm. In order to make sense of their being an unchanging algorithm at the heart of each "agent", we suppose that this algorithm exchanges inputs and outputs with the environment via communication channels known as "observations" and "actions".

This post really is my central critique of contemporary artificial intelligence discourse....

This is an essay about methodology. It is about the ethos with which we approach deep philosophical impasses of the kind that really matter. The first part of the essay is about those impasses themselves, and the second part is about what I learned in a monastery about addressing those impasses.

I cried a lot while writing this essay. The subject matter -- the impasses themselves -- are deeply meaningful to me, and I have the sense that they really do matter.

It is certainly true that there are these three philosophical impasses -- each has been discussed in...

The underlying assumption of this post is looking increasingly unlikely to obtain. Nevertheless, I find myself back here every once and a while, wistfully fantasizing about a world that might have been.

I think the predictions hold up fairly well, though it's hard to evaluate, since they are conditioning on something unlikely, and because it's only been 1.5 years out of 20, it's unsurprising that the predictions look about as plausible now as they did then. I've since learned that the bottleneck for drone delivery is indeed very much regulatory, so who know...

Quick self-review: